OpenVINO 2022.3之八:OpenVINO Async API

OpenVINO Async API 是由 OpenVINO 工具包提供的编程接口,用于实现深度学习模型的异步推理。它允许开发人员并发执行多个推理请求,并优化硬件资源的利用率。

OpenVINO 推理请求的API提供了同步和异步执行。ov::InferRequest::infer() 本质上是同步的并且易于操作。异步将infer()“拆分”成ov::InferRequest::start_async() 和 ov::InferRequest::wait() (或回调函数)。虽然同步API可能更容易入手,但在生产代码中推荐使用异步API。因为异步API实现任意可能数量请求的流程控制。

c++示例代码:

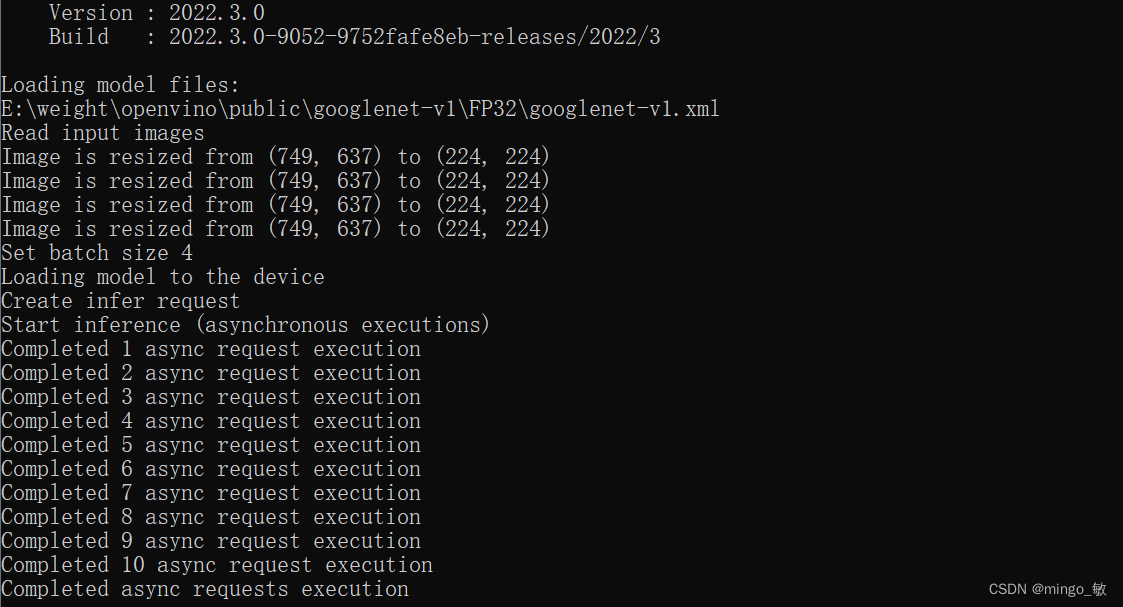

图像分类代码,代码来自openvino 示例

#include #include

#include

#include

python示例代码:

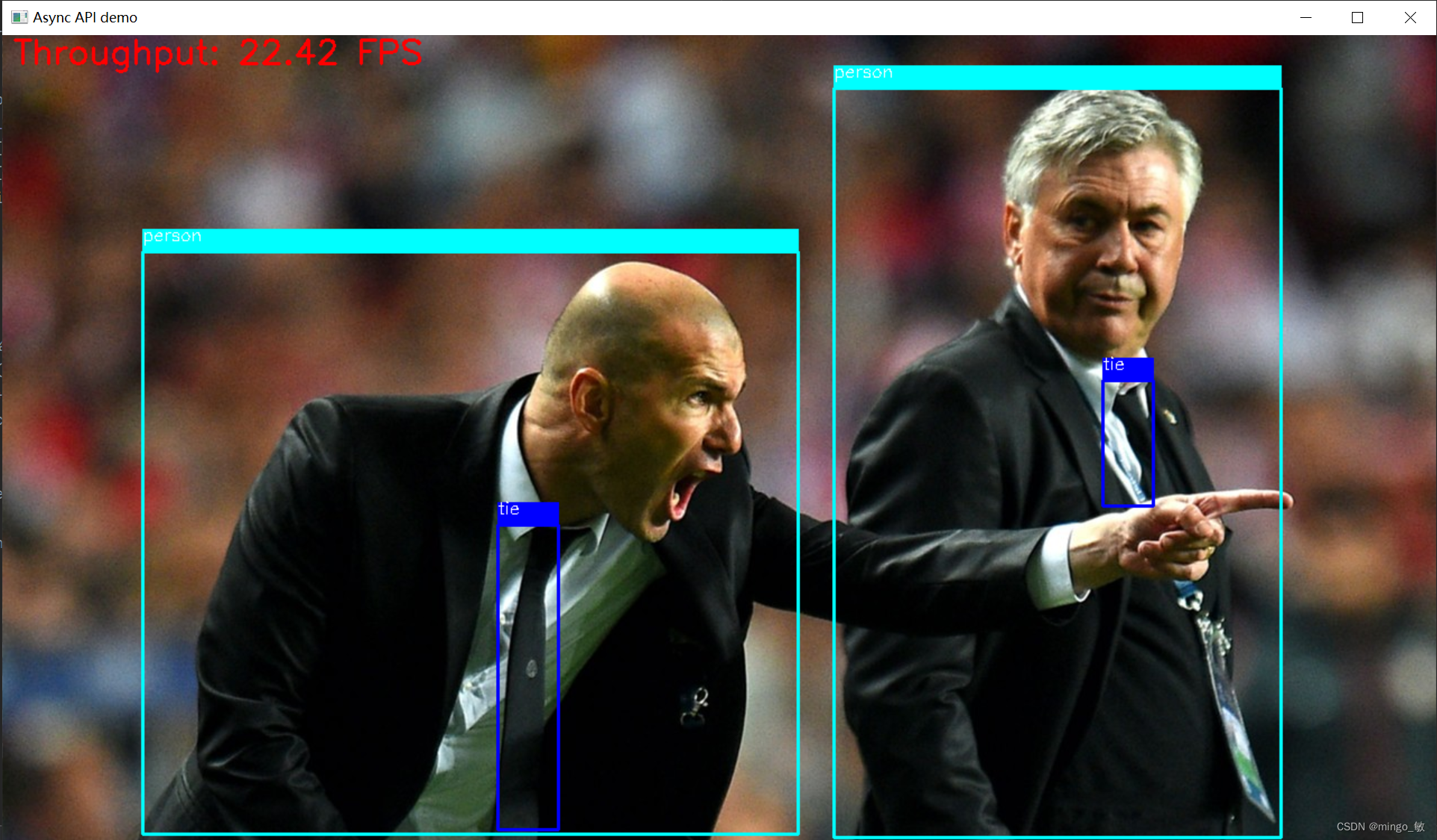

yolov5 目标检测,缺失权重文件或者代码请去ultralytics/yolov5

import cv2

import math

import numpy as np

import time

from typing import Tupleimport torchvision

import yaml

import torch

from openvino.runtime import Core, Tensor# Load COCO Label from yolov5/data/coco.yaml

with open('./data/coco.yaml', 'r', encoding='utf-8') as f:result = yaml.load(f.read(), Loader=yaml.FullLoader)

class_list = result['names']# Step1: Create OpenVINO Runtime Core

core = Core()

# Step2: Compile the Model for the dedicated device: CPU/GPU.0/GPU.1...

net = core.compile_model("./weights/yolov5s_openvino_model/yolov5s.xml", "CPU")# get input node and output node

input_node = net.inputs[0]

output_node = net.outputs[0]# Step 3. Create 1 Infer_request for current frame, 1 for next frame

infer_request_curr = net.create_infer_request()

infer_request_next = net.create_infer_request()# color palette

colors = [(255, 255, 0), (0, 255, 0), (0, 255, 255), (255, 0, 0)]

# import the letterbox for preprocess the frame

# from utils.augmentations import letterboximage_paths = ["./images/bus.jpg", "./images/zidane.jpg"]

# Get the current frame

frame_curr = cv2.imread(image_paths[0])

# Preprocess the frame

letterbox_img_curr, _, _ = letterbox(frame_curr, auto=False)

# Normalization + Swap RB + Layout from HWC to NCHW

blob = Tensor(cv2.dnn.blobFromImage(letterbox_img_curr, 1 / 255.0, swapRB=True))

# Transfer the blob into the model

infer_request_curr.set_tensor(input_node, blob)

# Start the current frame Async Inference

infer_request_curr.start_async()for idx in range(100):# Calculate the end-to-end process throughput.start = time.time()# Get the next frameframe_next = cv2.imread(image_paths[idx%len(image_paths)])# Preprocess the frameletterbox_img_next, _, _ = letterbox(frame_next, auto=False)# Normalization + Swap RB + Layout from HWC to NCHWblob = Tensor(cv2.dnn.blobFromImage(letterbox_img_next, 1 / 255.0, swapRB=True))# Transfer the blob into the modelinfer_request_next.set_tensor(input_node, blob)# Start the next frame Async Inferenceinfer_request_next.start_async()# wait for the current frame inference resultinfer_request_curr.wait()# Get the inference result from the output_nodeinfer_result = infer_request_curr.get_tensor(output_node)# Postprocess the inference resultdata = torch.tensor(infer_result.data)# Postprocess of YOLOv5:NMSdets = non_max_suppression(data)[0].numpy()bboxes, scores, class_ids = dets[:, :4], dets[:, 4], dets[:, 5]# rescale the coordinatesbboxes = scale_coords(letterbox_img_curr.shape[:-1], bboxes, frame_curr.shape[:-1]).astype(int)# show bbox of detectionsfor bbox, score, class_id in zip(bboxes, scores, class_ids):color = colors[int(class_id) % len(colors)]cv2.rectangle(frame_curr, (bbox[0], bbox[1]), (bbox[2], bbox[3]), color, 2)cv2.rectangle(frame_curr, (bbox[0], bbox[1] - 20), (bbox[2], bbox[1]), color, -1)cv2.putText(frame_curr, class_list[class_id], (bbox[0], bbox[1] - 10), cv2.FONT_HERSHEY_SIMPLEX, .5,(255, 255, 255))end = time.time()# show FPSfps = (1 / (end - start))fps_label = "Throughput: %.2f FPS" % fpscv2.putText(frame_curr, fps_label, (10, 25), cv2.FONT_HERSHEY_SIMPLEX, 1, (0, 0, 255), 2)print(fps_label + "; Detections: " + str(len(class_ids)))cv2.imshow("Async API demo", frame_curr)# Swap the infer requestinfer_request_curr, infer_request_next = infer_request_next, infer_request_currframe_curr = frame_nextletterbox_img_curr = letterbox_img_next# wait key for endingif cv2.waitKey(1) > -1:print("finished by user")cv2.destroyAllWindows()break

本文来自互联网用户投稿,文章观点仅代表作者本人,不代表本站立场,不承担相关法律责任。如若转载,请注明出处。 如若内容造成侵权/违法违规/事实不符,请点击【内容举报】进行投诉反馈!