day32:特征点匹配

特征点匹配就是在不同的图像中寻找同一个物体的同一个特征点.因为每个特征点都具有标志着唯一身份和特点的描述子,因此特征点匹配其实就是在两个图像中寻找具有相似描述子的两个特征点.根据描述子特点的不同 ,寻 找两个相似描述子的方法也不尽相同,总体上可以总结为两类:

第一类是计算两个描述子之间的欧氏距离,这种匹配方式的特征点有 SIFT 特征点、 SURF 特征点

等.

第二类是计算两个描述子之间的汉明距离 这种匹配方式的特征点有 ORB 特征点、 BRISK特征点等.

特征点匹配是图像处理领域寻找不同图像间信息关联的重要方法.由于相机移动导致成像视场发生改变,因此同一个物体会出现在图像中不同的位置,通过特征点匹配可以快速定位物体在新图像中的位置 为后续对图像的进一 步处理提供数据支持。特征点匹配由于数据量小、匹配精确而被 广泛应用在三维重建、视觉定位、运动估计、图像配准等领域. 1.DescriptorMatcher类:

2.暴力匹配

暴力匹配就是计算 训练描述子集合中每个描述子与查询描述 子之间的距离,之后将所有距离排 序,选择距离最小或者距离满足阈值要求的描述子作为匹配结果.

3.显示特征点匹配结果

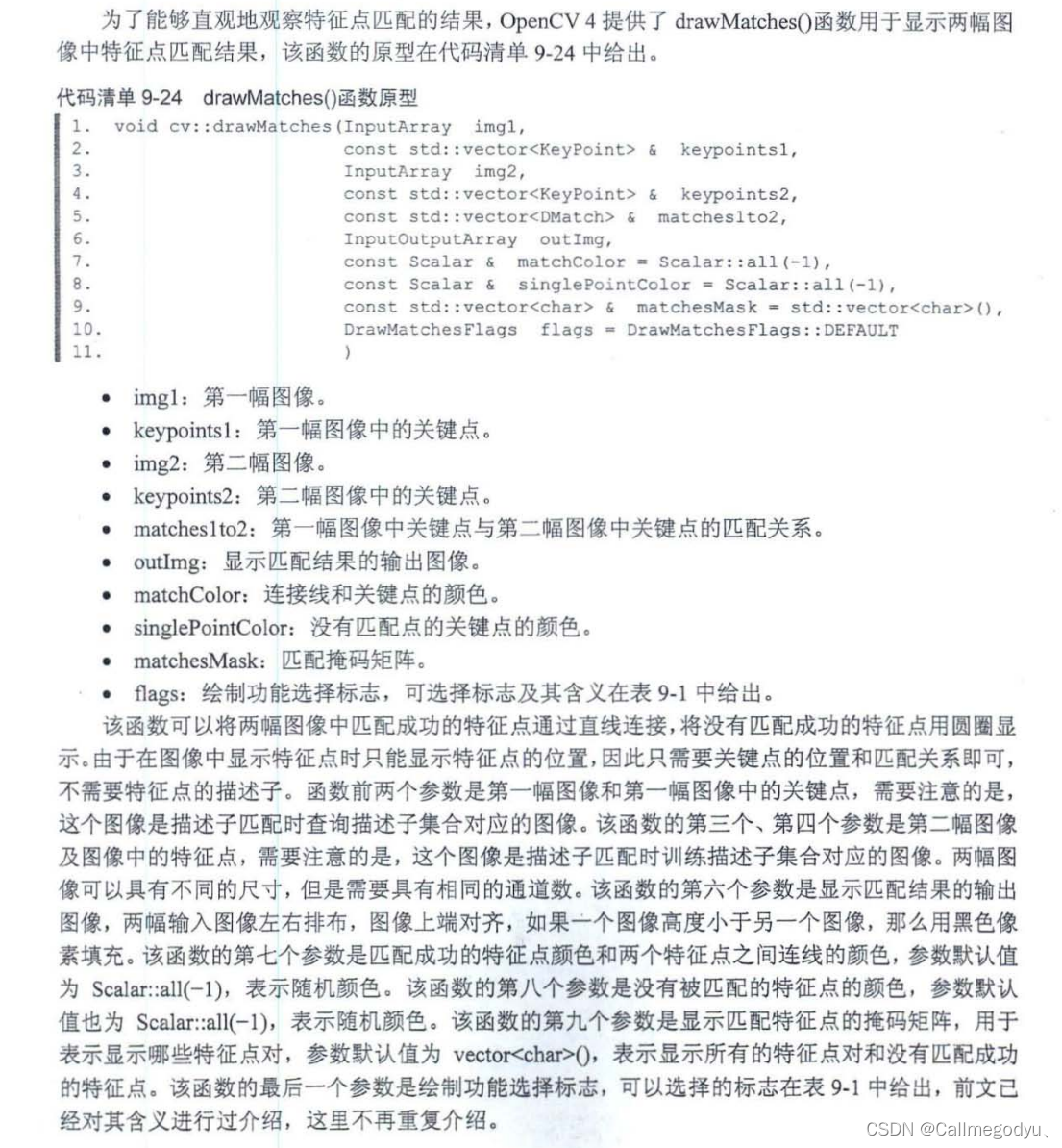

void visionagin:: MyORBmatch()

{Mat imgquery = imread("C:\\Users\\86176\\Downloads\\visionimage\\box.png");Mat imgtrain = imread("C:\\Users\\86176\\Downloads\\visionimage\\box_in_scene.png");if (imgquery.empty() == true||imgtrain.empty()==true){cout << "读取失败!" << endl;}Ptr orb = ORB::create(100);vector querykeypoints, trainkeypoints;//计算两幅图的关键点orb->detect(imgquery, querykeypoints);orb->detect(imgtrain, trainkeypoints);Mat querydes, traindes;//计算两幅图的描述子orb->compute(imgquery, querykeypoints, querydes);orb->compute(imgtrain, trainkeypoints, traindes);//特征点匹配vector matchout;//存放特征点匹配的结果BFMatcher matcher(NORM_HAMMING);//定义暴力匹配类,orb特征点用hanming距离matcher.match(querydes, traindes, matchout);//进行匹配cout << "匹配的特征点:" << matchout.size() << "个" << endl;//通过汉明距离筛选特征点double min_dist = 1000;double max_dist = 0;//筛选出匹配结果中得最大和最小汉明距离for (int i = 0; i < matchout.size(); ++i){double dist = matchout[i].distance;if (dist <= min_dist){min_dist = dist;}if (dist >= max_dist){max_dist = dist;}}cout << "max hanming dist is :" << max_dist << endl;cout << "min hanming dist is :" << min_dist << endl;//创建合适的匹配结果vector goodmatches;for (int j = 0; j < matchout.size(); ++j){if (matchout[j].distance <=max(2*min_dist,20.0))//汉明距离大于最小距离的两倍属于误匹配{goodmatches.push_back(matchout[j]);}}cout << "剩余的合适的特征点数目:" << goodmatches.size() << endl;//绘制匹配的结果Mat outbad, outok;drawMatches(imgquery, querykeypoints, imgtrain, trainkeypoints, matchout,outbad);drawMatches(imgquery, querykeypoints, imgtrain, trainkeypoints, goodmatches, outok);imshow("outbad", outbad);imshow("outok", outok);

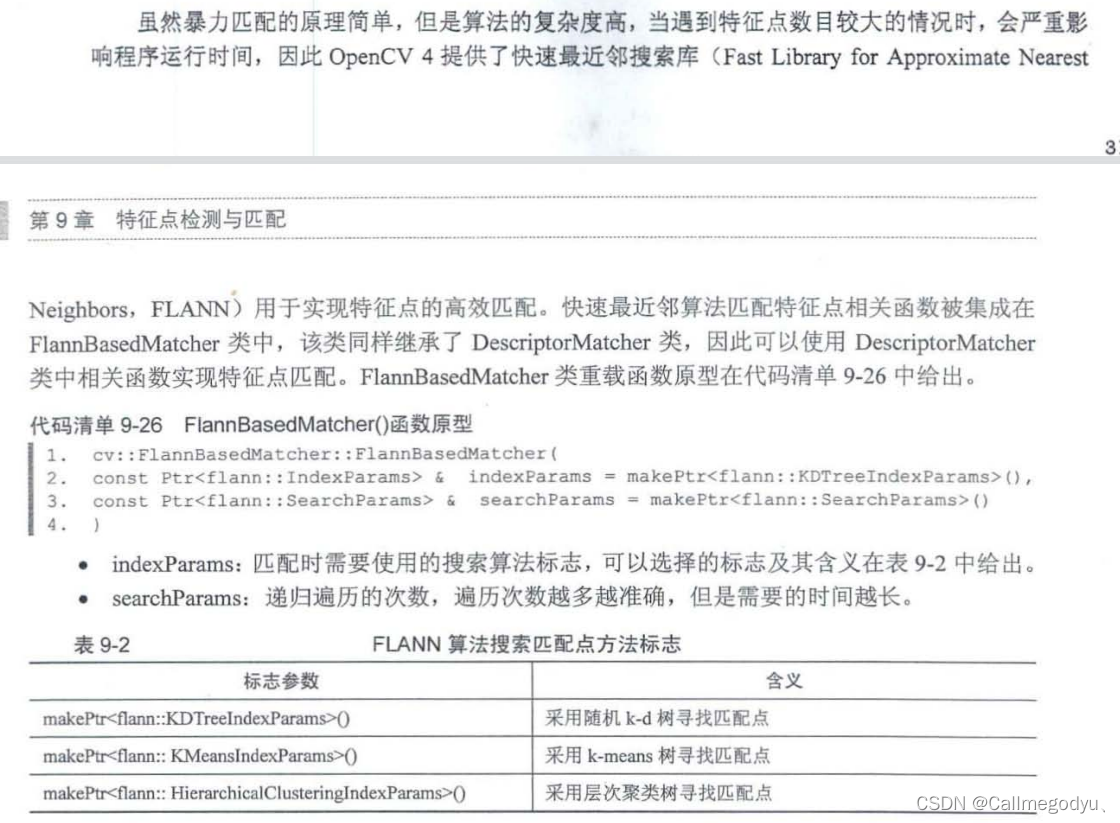

}  4.FLANN匹配

4.FLANN匹配

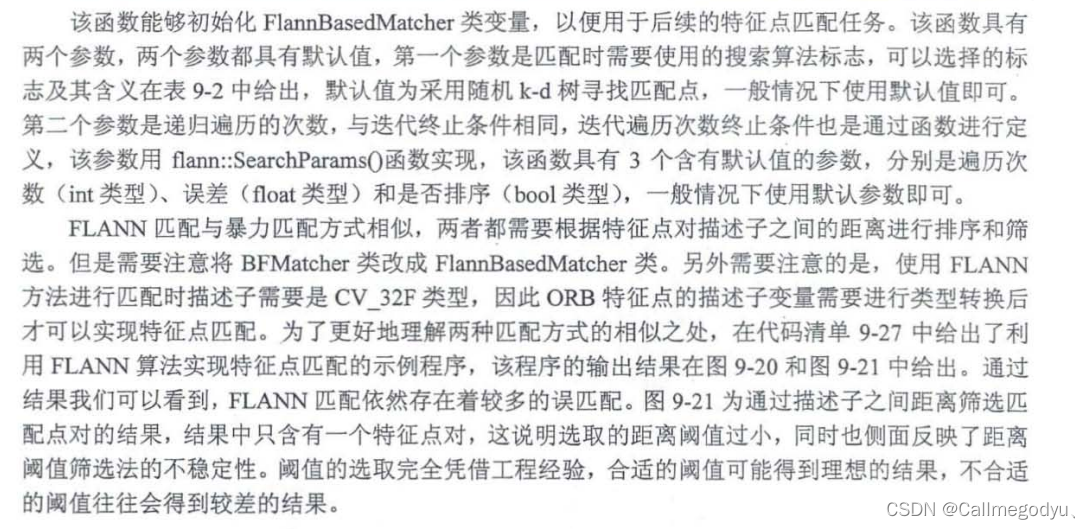

void visionagin:: Myflannmatch()

{Mat imgquery = imread("C:\\Users\\86176\\Downloads\\visionimage\\box.png");Mat imgtrain = imread("C:\\Users\\86176\\Downloads\\visionimage\\box_in_scene.png");if (imgquery.empty() == true || imgtrain.empty() == true){cout << "读取失败!" << endl;}Ptr orb = ORB::create(1000);vector trainkeypoints, querykeypoints;orb->detect(imgquery, querykeypoints);orb->detect(imgtrain, trainkeypoints);Mat querydescriptions, traindescriptions;orb->compute(imgquery, querykeypoints, querydescriptions);orb->compute(imgtrain, trainkeypoints, traindescriptions);//flann中的描述子需32fquerydescriptions.convertTo(querydescriptions, CV_32F);traindescriptions.convertTo(traindescriptions, CV_32F);vectorout;FlannBasedMatcher flannmatch;flannmatch.match(querydescriptions, traindescriptions, out);double min_dist = 100, max_dist = 0;for (int i = 0; i < out.size(); i++)//out.rows{double dist = out[i].distance;if (dist max_dist){max_dist = dist;}}cout << "max_dist is :" << max_dist << endl;cout << "min_dist is :" << min_dist << endl;vector goodout;for (int j = 0; j < out.size(); j++){if (out[j].distance < 0.4 * max_dist){goodout.push_back(out[j]);}}Mat outimg, goodimg;drawMatches(imgquery, querykeypoints, imgtrain, trainkeypoints,out,outimg);drawMatches(imgquery, querykeypoints, imgtrain, trainkeypoints, goodout, goodimg);imshow("outimg", outimg);imshow("goodimg", goodimg);

}

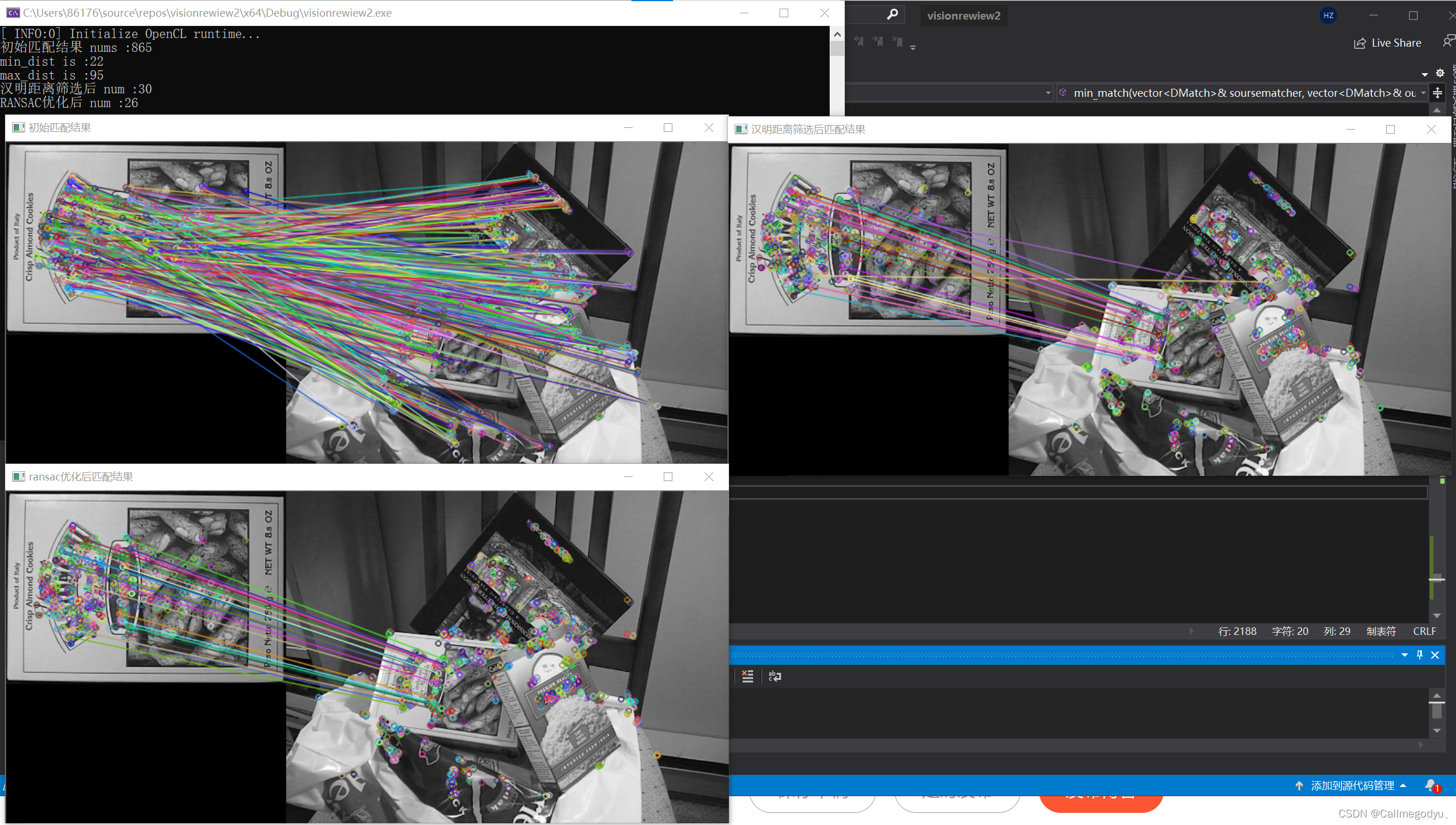

5.RANSAC优化特征点匹配

//以下皆为RANSAC算法优化特征点匹配程序

void orb_det_comp(Mat& image, vector& pts1, Mat& out)

{Ptr orb = ORB::create(1000, 1.2);orb->detect(image, pts1);orb->compute(image, pts1, out);

}

void min_match(vector& soursematcher, vector& outmatcher)

{double min_dist = 1000, max_dist = 0;for (int i = 0; i < soursematcher.size(); ++i){double dist = soursematcher[i].distance;if (dist < min_dist){min_dist = dist;}if (dist > max_dist){max_dist = dist;}}cout << "min_dist is :" << min_dist << endl;cout << "max_dist is :" << max_dist << endl;for (int j = 0; j < soursematcher.size(); ++j){if (soursematcher[j].distance <=max(2 * min_dist, 20.0)){outmatcher.push_back(soursematcher[j]);}}

}

void ransacmatch(vector& querykpt, vector& trainkpt, vector& match1, vector& matchransac)

{vectorqueryp(match1.size()), trainp(match1.size());//定义匹配点对坐标//保存从关键点中提取的点对for (int i = 0; i < match1.size(); ++i){queryp[i] = querykpt[match1[i].queryIdx].pt;//match1[i].queryIdx 是结果中对应的query的indextrainp[i] = trainkpt[match1[i].trainIdx].pt;}//匹配点对进行RANSAC过滤vector mask(queryp.size());findHomography(queryp, trainp, RANSAC, 3, mask);for (int j = 0; j < mask.size(); ++j){if (mask[j]){matchransac.push_back(match1[j]);}}}void visionagin:: MyRANSAC()

{Mat imgquery = imread("C:\\Users\\86176\\Downloads\\visionimage\\box.png");Mat imgtrain = imread("C:\\Users\\86176\\Downloads\\visionimage\\box_in_scene.png");if (imgquery.empty() == true || imgtrain.empty() == true){cout << "读取失败!" << endl;}vector trainkeypoints, querykeypoints;Mat querydescriptions, traindescriptions;//计算关键点和特征点orb_det_comp(imgquery, querykeypoints, querydescriptions);orb_det_comp(imgtrain, trainkeypoints, traindescriptions);//建立暴力匹配类BFMatcher bfmatch(NORM_HAMMING);// 存放结果的Dmatch vectorvector matchers, min_matchers, ransac_matchers;//分别为初始结果,最小汉明距离筛选后的结果以及RANSAC优化后的结果bfmatch.match(querydescriptions, traindescriptions, matchers);cout << "初始匹配结果 nums :" << matchers.size()<

本文来自互联网用户投稿,文章观点仅代表作者本人,不代表本站立场,不承担相关法律责任。如若转载,请注明出处。 如若内容造成侵权/违法违规/事实不符,请点击【内容举报】进行投诉反馈!