python爬百度图片

更新2019.10.3

当你想下载一些图片

我们看到他的网址是:

https://image.baidu.com/search/index?tn=baiduimage&ipn=r&ct=201326592&cl=2&lm=-1&st=-1&fm=result&fr=&sf=1&fmq=1570110921604_R&pv=&ic=&nc=1&z=&hd=&latest=©right=&se=1&showtab=0&fb=0&width=&height=&face=0&istype=2&ie=utf-8&sid=&word=%E5%8A%A8%E6%BC%AB%E4%BA%BA%E7%89%A9

图片是你往下滑动,就会加载新的图片

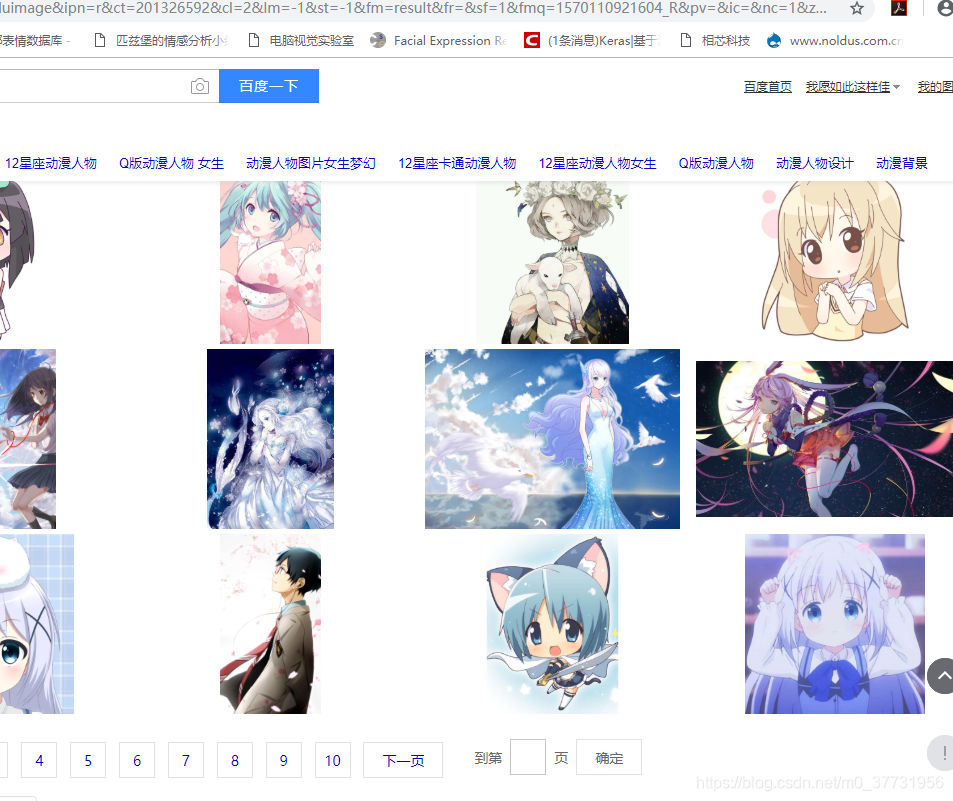

我们想要的效果是翻页

把index的位置改成flip

就会出现页数,点击来换页

点击第二页,再点击第三页,我们发现网址发生了改变

https://image.baidu.com/search/flip?tn=baiduimage&ie=utf-8&word=%E5%8A%A8%E6%BC%AB%E4%BA%BA%E7%89%A9&pn=40&gsm=&ct=&ic=0&lm=-1&width=0&height=0

pn = 0 20 40 60 依次递增,说明不同的页数是通过pn来改变的

word = 你要查找的图片

所有代码:

import re

import requestsimage = 0def dowmloadPic(html):global imagepic_url = re.findall('"objURL":"(.*?)",', html, re.S)for each in pic_url:print('正在下载第' + str(image) + '张图片,图片地址:' + str(each))try:pic = requests.get(each, timeout=10) #发送请求到服务器,服务器返回(图片)的二进制数据流except requests.exceptions.ConnectionError:print('【错误】当前图片无法下载')continuedir = 'E:/1code/Fer/dataset/smile/'+ str(image) + '.jpg'fp = open(dir, 'wb')fp.write(pic.content) #把图片的二进制数据存到本地文件,也就是保存图片fp.close()image += 1if __name__ == '__main__':n = 10for i in range(n):i_page = i*20url = 'http://image.baidu.com/search/flip?tn=baiduimage&ie=utf-8&word='+ '人的微笑' + '&pn='+str(i_page)+'&gsm=&ct=&ic=0&lm=-1&width=0&height=0'print(url)result = requests.get(url)dowmloadPic(result.text)

更新2019.10.4

之前下载的图片有重复,虽然pn是20,但是查看网页源代码的时候发现一次加载60张,只不过是显示20张

增加了获取最后一页的函数

import time

import re

import requestsimage = 0

now_page = 0def dowmloadPic(html):global imagepic_url = re.findall('"objURL":"(.*?)",', html, re.S)# print("XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX ")# print(str(len(pic_url)))for each in pic_url:#print('正在下载第' + str(image) + '张图片,图片地址:' + str(each))#print('正在下载第' + str(image)+'张图片')try:pic = requests.get(each, timeout=10) #发送请求到服务器,服务器返回(图片)的二进制数据流except requests.exceptions.ConnectionError:print('[错误] 当前图片无法下载')continuedir = '../dataset/smile2/' + str(image) + '.jpg'with open(dir, 'wb') as f:f.write(pic.content) #把图片的二进制数据存到本地文件,也就是保存图片image += 1def getLastPage(url):global now_pageresult = requests.get(url).text#print(result)lastpage_num = re.findall('(.*?)',result)return_str = lastpage_num[-1] if lastpage_num else str(now_page)return return_strif __name__ == '__main__':i_page = 0url = 'http://image.baidu.com/search/flip?tn=baiduimage&ie=utf-8&word=' + '你要搜索的东西' + '&pn=' + str(i_page) + '&gsm=&ct=&ic=0&lm=-1&width=0&height=0'now_page = int(getLastPage(url))while ( now_page > i_page//20 +1 ):print("当前页数:" + str(i_page//20+1))i_page += 60result = requests.get(url)dowmloadPic(result.text)url = 'http://image.baidu.com/search/flip?tn=baiduimage&ie=utf-8&word=' + '你要搜索的东西' + '&pn='+str(i_page)+'&gsm=&ct=&ic=0&lm=-1&width=0&height=0'now_page = int(getLastPage(url))time.sleep(20)print('一共打印了'+str(image)+'张图片')

别人的博客:

地址:python爬取百度图片https://blog.csdn.net/qq_37482202/article/details/82312599

import re

import requestsdef get_onepage_urls(onepage_url):#获取单个翻页的所有图片的urls+当前翻页的下一翻页的urlif not onepage_url:print("已经最后一页,结束")return [],''try:html = requests.get(onepage_url).textexcept Exception as e:print(e)pic_url = []fanye_url = ''return pic_url,fanye_url#用正则得到所有图片的url和下一页的urlpic_urls = re.findall('"objURL":"(.*?)",',html,re.S)fanye_urls = re.findall(re.compile(r'下一页'),html,flags=0)fanye_url = 'http://image.baidu.com'+fanye_urls[0] if fanye_urls else ''return pic_urls,fanye_urldef down_pic(pic_urls):#给出图片链接列表,下载所有图片for i,pic_url in enumerate(pic_urls):try:pic = requests.get(pic_url,timeout = 15)dir = 'E:/1code/Fer/dataset/smile/'+ str(i) + '.jpg'with open(dir,'wb') as f:f.write(pic.content)print("成功下载第%s图片:%s"%(str(i+1),str(pic_url)))except Exception as e:print("下载第%s张图片时失败:%s"%(str(i+1),str(pic_url)))print(e)continueif __name__ == '__main__':url_init = 'http://image.baidu.com/search/flip?tn=baiduimage&ie=utf-8&word=%E4%BA%BA%E7%9A%84%E5%BE%AE%E7%AC%91&pn=0&gsm=&ct=&ic=0&lm=-1&width=0&height=0'# 声明一个list用来装图片urlall_pic_urls = []onepage_urls, fanye_url = get_onepage_urls(url_init)all_pic_urls.extend(onepage_urls)fanye_count = 0 # 累计翻页数# 循环添加图片urlwhile 1:onepage_urls, fanye_url = get_onepage_urls(fanye_url)print("XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX")print(fanye_url)fanye_count += 1print('第%s页' % fanye_count)if fanye_url == '' and onepage_urls == []:breakall_pic_urls.extend(onepage_urls)# 下载图片down_pic(list(set(all_pic_urls)))

本文来自互联网用户投稿,文章观点仅代表作者本人,不代表本站立场,不承担相关法律责任。如若转载,请注明出处。 如若内容造成侵权/违法违规/事实不符,请点击【内容举报】进行投诉反馈!