【Kubernetes】centos7搭建k8s集群(一) - 环境搭建与安装

文章目录

- 一、环境准备

- 1.查看Centos版本

- 2.关闭selinux

- 2.关闭swap

- 3.配置ip_forward转发

- 4.更新yum源

- 5.安装docker

- 6.安装k8s组件

- 7.内核参数修改

- 内核参数修改失败常见问题

- 8.其他说明

- 二、Master节点配置

- 1.Master节点初始化

- 2.添加flannel的网络

- 3.查看集群

- 三、work节点初始化

- 1.设置机器名称

- 2.加入集群

- 3.设置work2

- 相关文章

- 附件

一、环境准备

本次教程,使用docker 18.09.9和kubelet-1.16.4,要求centos7.6以上版本,k8s集群至少需要三台服务器,内存至少需要2G。

虚拟机安装完后,基础安装可以参照这篇文章

https://moonce.blog.csdn.net/article/details/124817683

1.查看Centos版本

cat /etc/centos-release

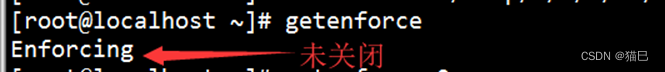

2.关闭selinux

查看selinux是否关闭

getenforce

临时关闭

setenforce 0

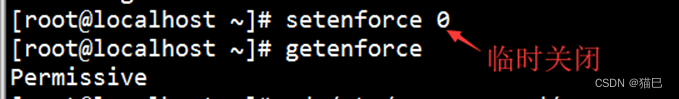

永久关闭

编辑selinux

vi /etc/sysconfig/selinux

修改SELINUX

SELINUX=disabled

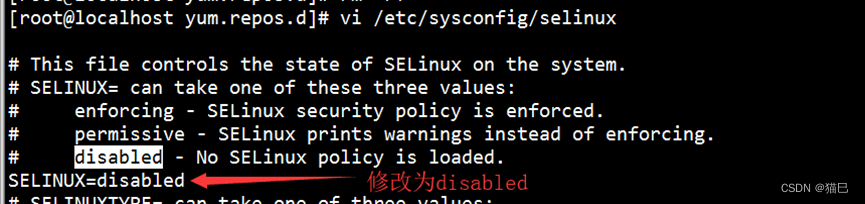

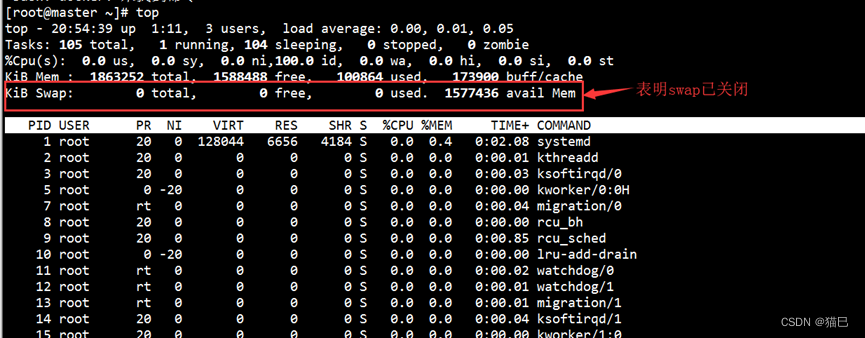

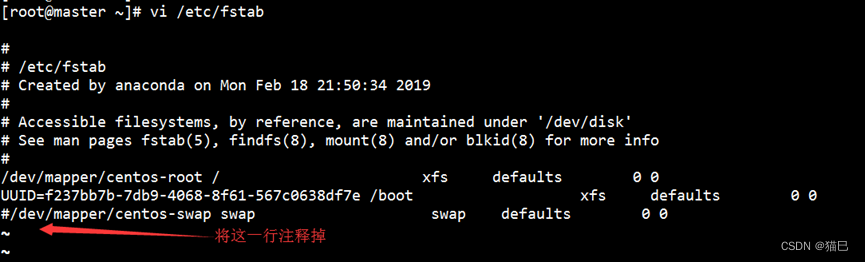

2.关闭swap

k8s要求系统关闭,否则安装过程会报错

查看是否关闭

top

临时关闭

swapoff -a

永久关闭

sed -i.bak '/swap/s/^/#/' /etc/fstab

作用就是注释掉swap那一行,相当于编辑文件/etc/fstab

3.配置ip_forward转发

ip_forward 配置文件当前内容为0,表示禁止数据包转发,将其修改为1表示允许

echo "1" > /proc/sys/net/ipv4/ip_forward

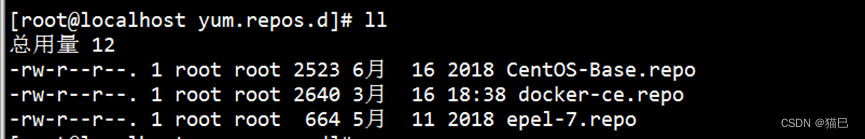

4.更新yum源

先清除掉系统自带配置(如有需要,请先自行备份)

rm -rf /etc/yum.repos.d/*

下载centos7软件源和ocker源

wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

wget -P /etc/yum.repos.d/ http://mirrors.aliyun.com/repo/epel-7.repo

wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

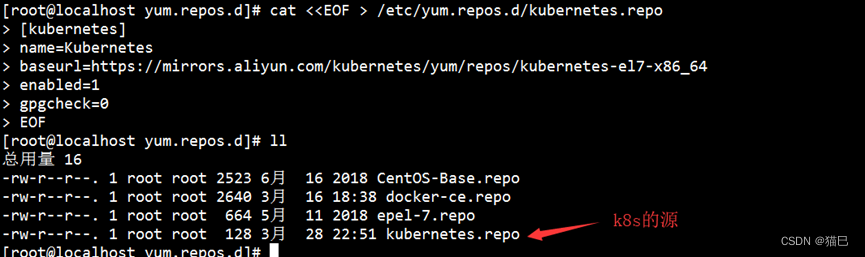

配置k8s源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

EOF

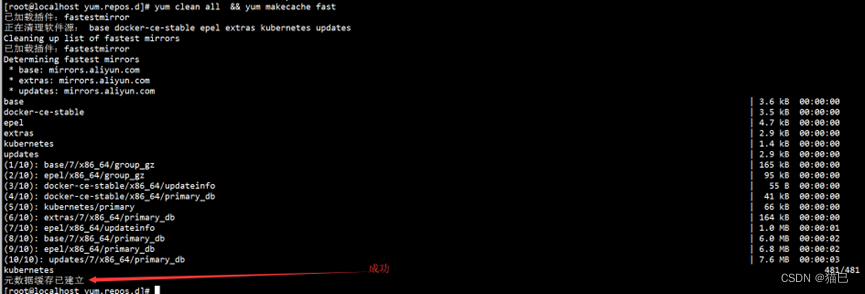

刷新yum缓存

yum clean all && yum makecache fast

5.安装docker

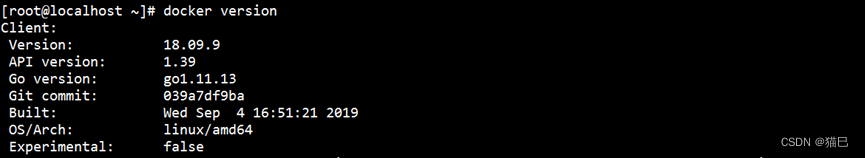

docker使用版本 18.09.9

安装

yum install docker-ce-18.09.9 docker-ce-cli-18.09.9 containerd.io -y

检测

docker version

配置

运行k8s需要docker配置参数--cgroup-driver=systemd

创建文件夹

sudo mkdir -p /etc/docker

插入信息

sudo tee /etc/docker/daemon.json <<-'EOF'

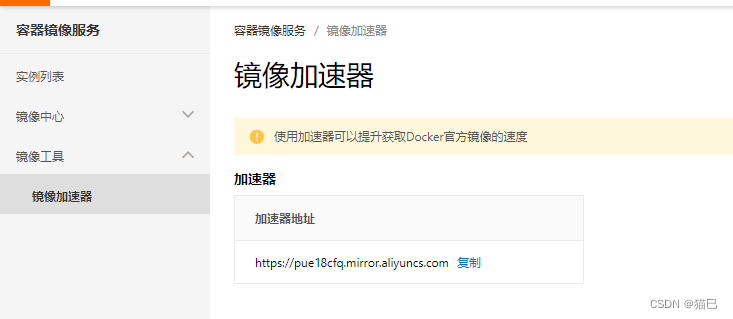

{"registry-mirrors": ["https://pue18cfq.mirror.aliyuncs.com"],"exec-opts": ["native.cgroupdriver=systemd"]

}

EOF

重新启动

sudo systemctl daemon-reload

sudo systemctl restart docker

https://pue18cfq.mirror.aliyuncs.com为博主的阿里云镜像加速地址,自己如果想要申请可以到https://cr.console.aliyun.com/cn-shanghai/instances/mirrors注册申请,当然你也可以直接用博主的。

设置开机自启动

systemctl enable docker && systemctl start docker

6.安装k8s组件

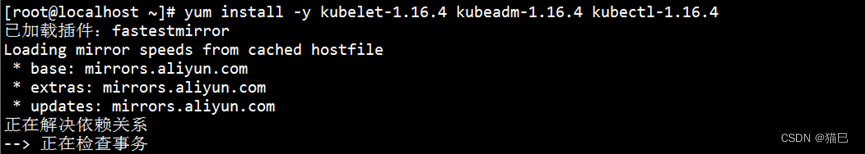

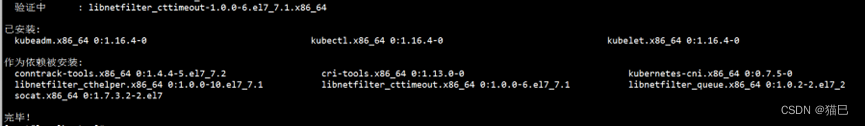

安装

yum install -y kubelet-1.16.4 kubeadm-1.16.4 kubectl-1.16.4

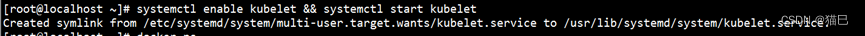

设置开机启动

systemctl enable kubelet && systemctl start kubelet

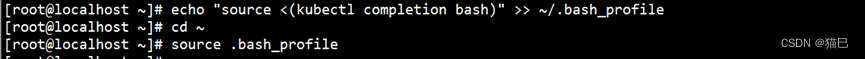

添加kubectl上下文到环境中,并在~目录中,使配置生效

echo "source <(kubectl completion bash)" >> ~/.bash_profile

cd ~

source .bash_profile

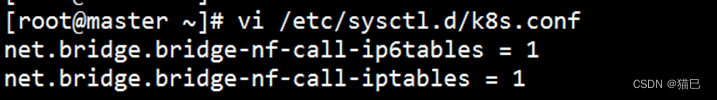

7.内核参数修改

k8s网络一般使用flannel,该网络需要设置内核参数bridge-nf-call-iptables=1

添加参数配置文件

vi /etc/sysctl.d/k8s.conf

输入

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

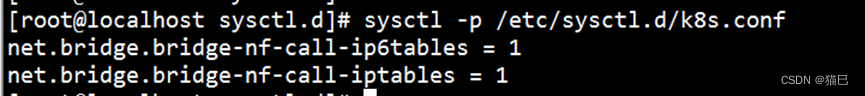

执行

sysctl -p /etc/sysctl.d/k8s.conf

内核参数修改失败常见问题

有些系统执行sysctl -p /etc/sysctl.d/k8s.conf会报异常,一般是因为

修改这个参数需要系统有br_netfilter模块

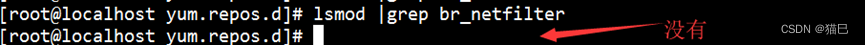

使用lsmod |grep br_netfilter命令,查看系统里是否有br_netfilter模块

lsmod |grep br_netfilter

新增br_netfilter模块

modprobe br_netfilter

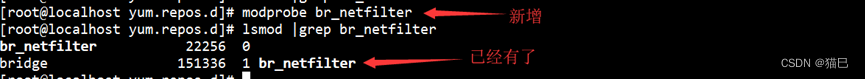

上述方式重启后无效。需要配置系统启动加载脚本使其永久生效

开机自启动,编辑

vi /etc/rc.sysinit

添加如下内容

加载模块动作

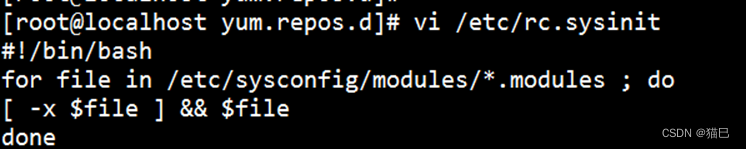

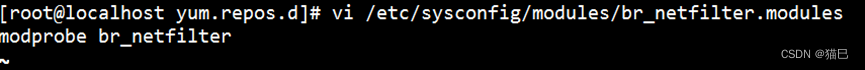

vi /etc/sysconfig/modules/br_netfilter.modules

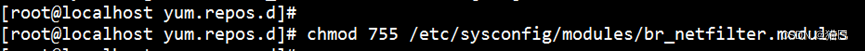

增加执行权限

chmod 755 /etc/sysconfig/modules/br_netfilter.modules

8.其他说明

如果是虚拟机配置,完成准备后,我们可以再复制两个虚拟机来当从服务器,这样我们就省的再去配置了。

二、Master节点配置

1.Master节点初始化

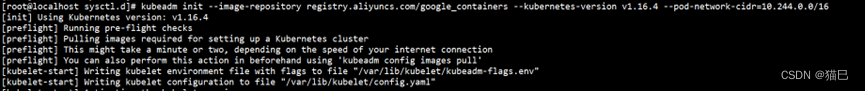

第一次需要拉取镜像比较慢,耐心等待

kubeadm init --image-repository registry.aliyuncs.com/google_containers --kubernetes-version v1.16.4 --pod-network-cidr=10.244.0.0/16

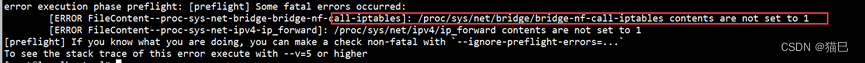

如果出现下面错误,则是前面的环境准备中的7.内核参数修改没有配置

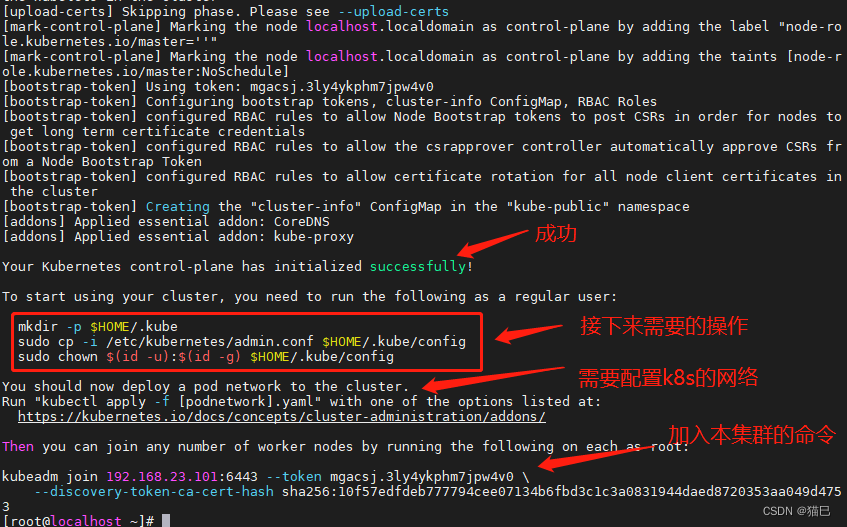

正常情况,如下

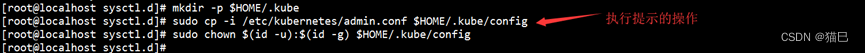

接下来,我们按它的提示执行操作

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

2.添加flannel的网络

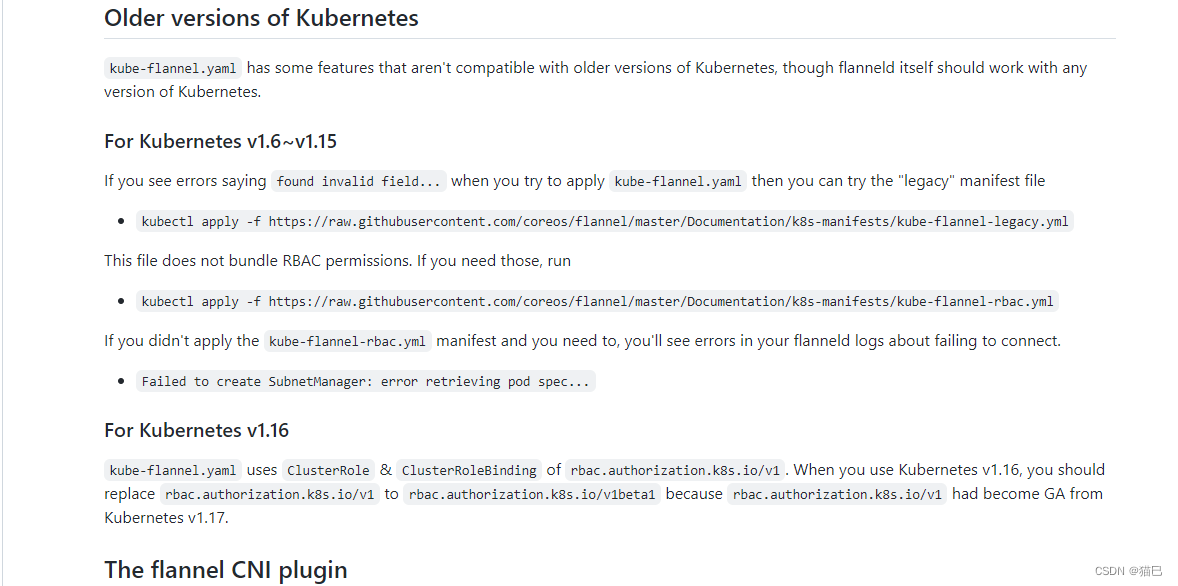

按照master的提示,我们接下来应该配置一个pod network。

按照提示,打开网址,并找到Flannel

https://kubernetes.io/docs/concepts/cluster-administration/addons/

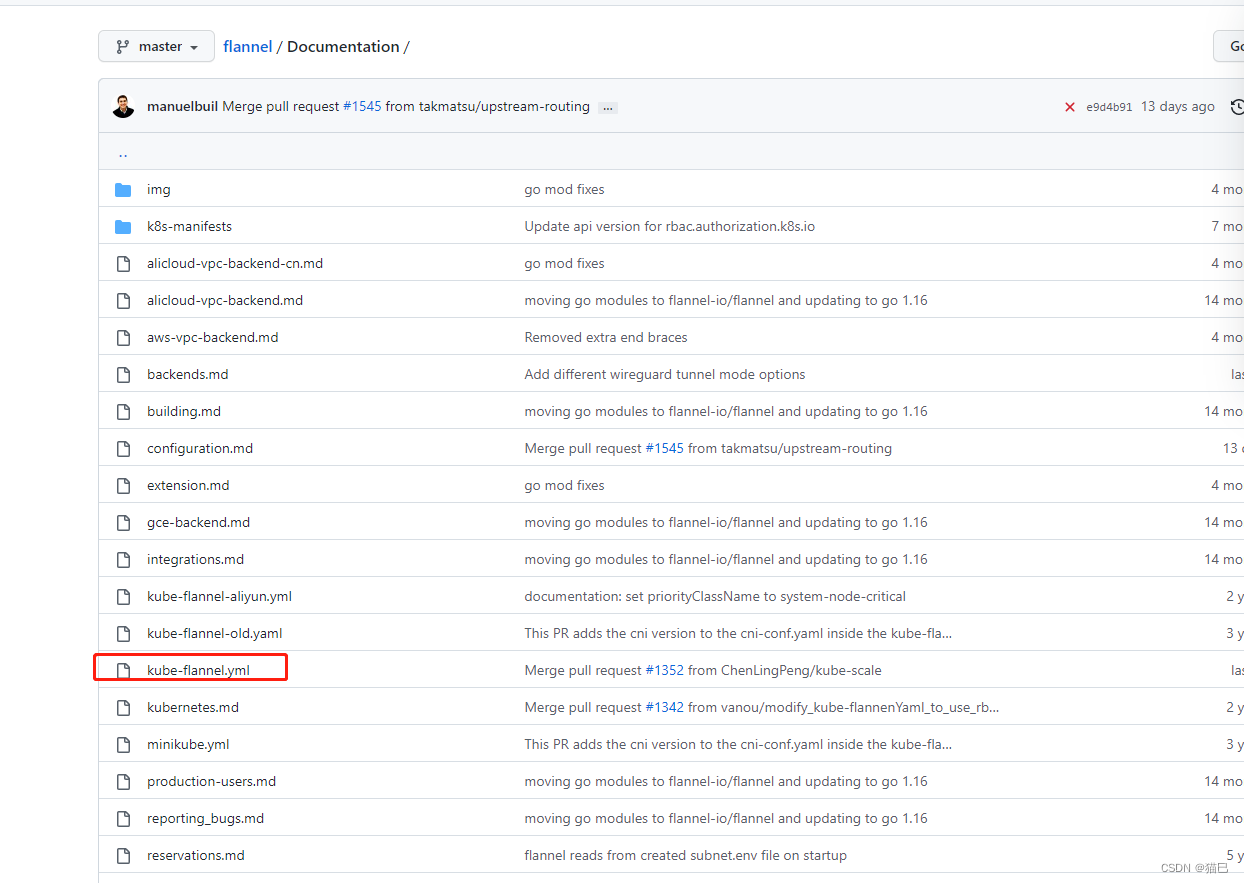

对于我们安装的旧版本,按照官网提示,我们用的1.16,下载后将v1替换成v1beta1。

找到对应的文件(直接复制或下载源码找到该文件),博主把文件内容放到文末附件中了,需要的可以直接复制自己创建文件

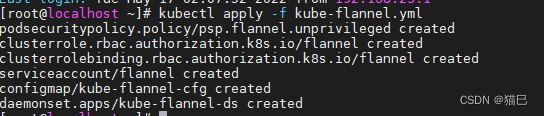

将文件上传到master服务器,执行以下命令

kubectl apply -f kube-flannel.yml

至此,大功告成

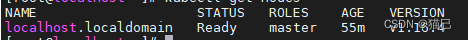

3.查看集群

kubectl get nodes

三、work节点初始化

work节点的配置,相对master来说简单许多,只需要规划好节点的名称即可

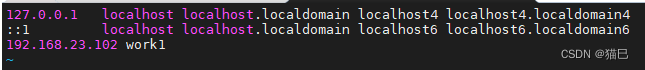

1.设置机器名称

设置一个机器名为work1

hostnamectl set-hostname work1

配置对应的ip

vi /etc/hosts

插入(192.168.23.102为work1的IP地址)

192.168.23.102 work1

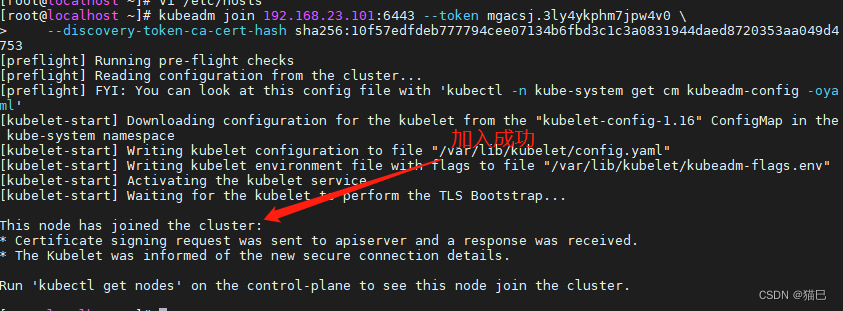

2.加入集群

在要加入的工作节点机器上,执行master 初始化时提示的join语句,即加到master的管辖内(这是博主的join,你需要根据自己的实际提示的join语句运行)

kubeadm join 192.168.23.101:6443 --token mgacsj.3ly4ykphm7jpw4v0 \--discovery-token-ca-cert-hash sha256:10f57edfdeb777794cee07134b6fbd3c1c3a0831944daed8720353aa049d4753

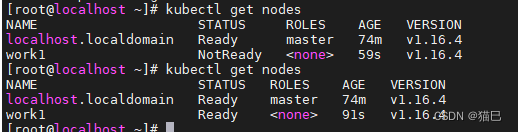

回到master节点再次查看集群(提示NotReady别紧张,可能需要等待几秒)

到这里,work节点部署完成,我们再重复这个操作,部署work2。

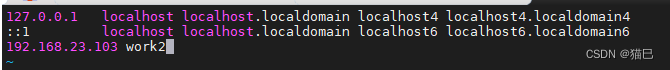

3.设置work2

hostnamectl set-hostname work2

vi /etc/hosts

kubeadm join 192.168.23.101:6443 --token mgacsj.3ly4ykphm7jpw4v0 \--discovery-token-ca-cert-hash sha256:10f57edfdeb777794cee07134b6fbd3c1c3a0831944daed8720353aa049d4753

相关文章

【Kubernetes】centos7搭建k8s集群(一) - 环境搭建与安装

【Kubernetes】centos7搭建k8s集群(二) - 操作命令与讲解

【Kubernetes】centos7搭建k8s集群(三) - 存储使用与讲解

【Kubernetes】centos7搭建k8s集群(四) - 网络原理讲解

附件

kube-flannel.yml

---

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:name: psp.flannel.unprivilegedannotations:seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/defaultseccomp.security.alpha.kubernetes.io/defaultProfileName: docker/defaultapparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/defaultapparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default

spec:privileged: falsevolumes:- configMap- secret- emptyDir- hostPathallowedHostPaths:- pathPrefix: "/etc/cni/net.d"- pathPrefix: "/etc/kube-flannel"- pathPrefix: "/run/flannel"readOnlyRootFilesystem: false# Users and groupsrunAsUser:rule: RunAsAnysupplementalGroups:rule: RunAsAnyfsGroup:rule: RunAsAny# Privilege EscalationallowPrivilegeEscalation: falsedefaultAllowPrivilegeEscalation: false# CapabilitiesallowedCapabilities: ['NET_ADMIN', 'NET_RAW']defaultAddCapabilities: []requiredDropCapabilities: []# Host namespaceshostPID: falsehostIPC: falsehostNetwork: truehostPorts:- min: 0max: 65535# SELinuxseLinux:# SELinux is unused in CaaSPrule: 'RunAsAny'

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:name: flannel

rules:

- apiGroups: ['extensions']resources: ['podsecuritypolicies']verbs: ['use']resourceNames: ['psp.flannel.unprivileged']

- apiGroups:- ""resources:- podsverbs:- get

- apiGroups:- ""resources:- nodesverbs:- list- watch

- apiGroups:- ""resources:- nodes/statusverbs:- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:name: flannel

roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: flannel

subjects:

- kind: ServiceAccountname: flannelnamespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:name: flannelnamespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:name: kube-flannel-cfgnamespace: kube-systemlabels:tier: nodeapp: flannel

data:cni-conf.json: |{"name": "cbr0","cniVersion": "0.3.1","plugins": [{"type": "flannel","delegate": {"hairpinMode": true,"isDefaultGateway": true}},{"type": "portmap","capabilities": {"portMappings": true}}]}net-conf.json: |{"Network": "10.244.0.0/16","Backend": {"Type": "vxlan"}}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:name: kube-flannel-dsnamespace: kube-systemlabels:tier: nodeapp: flannel

spec:selector:matchLabels:app: flanneltemplate:metadata:labels:tier: nodeapp: flannelspec:affinity:nodeAffinity:requiredDuringSchedulingIgnoredDuringExecution:nodeSelectorTerms:- matchExpressions:- key: kubernetes.io/osoperator: Invalues:- linuxhostNetwork: truepriorityClassName: system-node-criticaltolerations:- operator: Existseffect: NoScheduleserviceAccountName: flannelinitContainers:- name: install-cni-plugin#image: flannelcni/flannel-cni-plugin:v1.0.1 for ppc64le and mips64le (dockerhub limitations may apply)image: rancher/mirrored-flannelcni-flannel-cni-plugin:v1.0.1command:- cpargs:- -f- /flannel- /opt/cni/bin/flannelvolumeMounts:- name: cni-pluginmountPath: /opt/cni/bin- name: install-cni#image: flannelcni/flannel:v0.17.0 for ppc64le and mips64le (dockerhub limitations may apply)image: rancher/mirrored-flannelcni-flannel:v0.17.0command:- cpargs:- -f- /etc/kube-flannel/cni-conf.json- /etc/cni/net.d/10-flannel.conflistvolumeMounts:- name: cnimountPath: /etc/cni/net.d- name: flannel-cfgmountPath: /etc/kube-flannel/containers:- name: kube-flannel#image: flannelcni/flannel:v0.17.0 for ppc64le and mips64le (dockerhub limitations may apply)image: rancher/mirrored-flannelcni-flannel:v0.17.0command:- /opt/bin/flanneldargs:- --ip-masq- --kube-subnet-mgrresources:requests:cpu: "100m"memory: "50Mi"limits:cpu: "100m"memory: "50Mi"securityContext:privileged: falsecapabilities:add: ["NET_ADMIN", "NET_RAW"]env:- name: POD_NAMEvalueFrom:fieldRef:fieldPath: metadata.name- name: POD_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespace- name: EVENT_QUEUE_DEPTHvalue: "5000"volumeMounts:- name: runmountPath: /run/flannel- name: flannel-cfgmountPath: /etc/kube-flannel/- name: xtables-lockmountPath: /run/xtables.lockvolumes:- name: runhostPath:path: /run/flannel- name: cni-pluginhostPath:path: /opt/cni/bin- name: cnihostPath:path: /etc/cni/net.d- name: flannel-cfgconfigMap:name: kube-flannel-cfg- name: xtables-lockhostPath:path: /run/xtables.locktype: FileOrCreate

本文来自互联网用户投稿,文章观点仅代表作者本人,不代表本站立场,不承担相关法律责任。如若转载,请注明出处。 如若内容造成侵权/违法违规/事实不符,请点击【内容举报】进行投诉反馈!