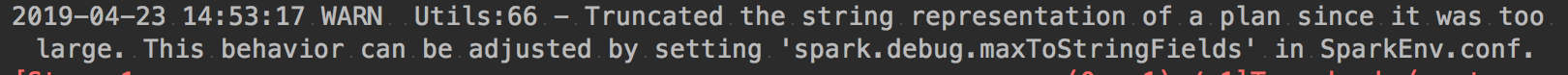

This behavior can be adjusted by setting 'spark.debug.maxToStringFields' in SparkEnv.conf.

def create_spark():spark = SparkSession. \builder.master('local'). \appName('pipelinedemo'). \getOrCreate()return spark报错:

WARN NativeCodeLoader:62 - Unable to load native-hadoop library for your platform… using builtin-java classes where applicable

Setting default log level to “WARN”.

改:

def create_spark():spark = SparkSession. \builder.master('local'). \appName('pipelinedemo'). \***config("spark.debug.maxToStringFields", "100").\***getOrCreate()return spark

本文来自互联网用户投稿,文章观点仅代表作者本人,不代表本站立场,不承担相关法律责任。如若转载,请注明出处。 如若内容造成侵权/违法违规/事实不符,请点击【内容举报】进行投诉反馈!