【Hadoop】MapReduce案例——词频统计

文章目录

- 一、前期准备

- 二、WordCount案例

- 三、射雕英雄传词频案例

一、前期准备

1.新建maven项目

2.pom.xml中添加项目依赖

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"><modelVersion>4.0.0modelVersion><groupId>com.hdtraingroupId><artifactId>wordcountartifactId><version>1.0-SNAPSHOTversion><name>wordcountname><url>http://www.example.comurl><properties><project.build.sourceEncoding>UTF-8project.build.sourceEncoding><maven.compiler.source>1.8maven.compiler.source><maven.compiler.target>1.8maven.compiler.target>properties><dependencies><dependency><groupId>junitgroupId><artifactId>junitartifactId><version>4.11version><scope>testscope>dependency><dependency><groupId>log4jgroupId><artifactId>log4jartifactId><version>1.2.17version>dependency><dependency><groupId>org.apache.hadoopgroupId><artifactId>hadoop-hdfsartifactId><version>2.7.1version>dependency><dependency><groupId>org.apache.hadoopgroupId><artifactId>hadoop-commonartifactId><version>2.7.1version>dependency><dependency><groupId>org.apache.hadoopgroupId><artifactId>hadoop-clientartifactId><version>2.7.1version>dependency><dependency><groupId>org.apache.hadoopgroupId><artifactId>hadoop-mapreduce-client-coreartifactId><version>2.7.1version>dependency><dependency><groupId>com.janeluogroupId><artifactId>ikanalyzerartifactId><version>2012_u6version>dependency>dependencies><build><pluginManagement><plugins><plugin><artifactId>maven-clean-pluginartifactId><version>3.1.0version>plugin><plugin><artifactId>maven-resources-pluginartifactId><version>3.0.2version>plugin><plugin><artifactId>maven-compiler-pluginartifactId><version>3.8.0version>plugin><plugin><artifactId>maven-surefire-pluginartifactId><version>2.22.1version>plugin><plugin><artifactId>maven-jar-pluginartifactId><version>3.0.2version>plugin><plugin><artifactId>maven-install-pluginartifactId><version>2.5.2version>plugin><plugin><artifactId>maven-deploy-pluginartifactId><version>2.8.2version>plugin><plugin><artifactId>maven-site-pluginartifactId><version>3.7.1version>plugin><plugin><artifactId>maven-project-info-reports-pluginartifactId><version>3.0.0version>plugin>plugins>pluginManagement>build>

project>

3.项目中添加resource文件夹

添加配置文件:core-site.xml、hdfs-site.xml、mapred-site.xml

修改文件夹文件结构为resource

二、WordCount案例

采用”哈利波特“英文版作为数据

1.WordCountJob.class

package com.hdtrain;import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;import java.io.IOException;// 定义wordcount任务

public class WordCountJob {public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {//System.setProperty("HADOOP_USER_NAME", "root");//读取配置文件Configuration configuration = new Configuration(true);configuration.set("mapreduce.framework.name", "local");//创建jobJob job = Job.getInstance(configuration);//设置Job的参数job.setJobName("wordcount-" + System.currentTimeMillis()); //设置job名job.setJarByClass(WordCountJob.class); //设置当前job主类job.setNumReduceTasks(2);//设置要处理文件的路径FileInputFormat.setInputPaths(job, "/data/harry.txt");//设置输出结果路径FileOutputFormat.setOutputPath(job, new Path("/results/wordcount-"+System.currentTimeMillis()));//设置map要输出的数据类型job.setMapOutputKeyClass(Text.class);job.setMapOutputValueClass(IntWritable.class);//设置map类job.setMapperClass(WordCountMapper.class);//设置reduce类job.setReducerClass(WordCountReduce.class);//提交任务job.waitForCompletion(true);}

}

2.WordCountMapper.class

package com.hdtrain;import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;import java.io.IOException;public class WordCountMapper extends Mapper<LongWritable, Text, Text, IntWritable> {@Overrideprotected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {//获取单词String[] words = value.toString().replaceAll("[^a-zA-Z0-9\\s']", "").split(" ");开始向context添加数据,写出到reduce,统计单词数量for (int i = 0; i < words.length; i++){context.write(new Text(words[i]), new IntWritable(1));}}

}

3.WordCountReducer.class

package com.hdtrain;import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;import java.io.IOException;

import java.util.Iterator;public class WordCountReducer extends Reducer<Text, IntWritable, Text, LongWritable> {@Overrideprotected void reduce(Text key, Iterable<IntWritable> values, Context context) throws IOException, InterruptedException {//声明一个变量存放总数long count = 0;//获取迭代器Iterator<IntWritable> iterator = values.iterator();//开始遍历迭代器while (iterator.hasNext()){int value = iterator.next().get();count += value;}//继续写出context.write(key, new LongWritable(count));}

}

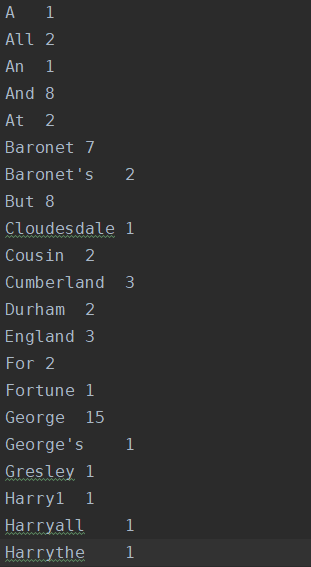

4.计算结果

三、射雕英雄传词频案例

采用”哈利波特“英文版作为数据

1.SdxyzJob.class

package com.hdtrain;import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import sun.net.sdp.SdpSupport;import java.io.IOException;public class SdyxzJob {public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {//1.读取配置文件Configuration configuration = new Configuration(true);configuration.set("mapreduce.framework.name", "local");//2.创建jobJob job = Job.getInstance(configuration);//3.设置job的参数job.setJobName("射雕英雄传-"+System.currentTimeMillis());job.setJarByClass(SdyxzJob.class);job.setNumReduceTasks(2);//4.设置要处理数据文件的路径FileInputFormat.setInputPaths(job, new Path("/data/sdyxz.txt"));//5.设置输出结果路径FileOutputFormat.setOutputPath(job, new Path("/results/sdyxz-"+System.currentTimeMillis()));//6.设置map要输出的数据类型job.setOutputKeyClass(Text.class);job.setMapOutputValueClass(IntWritable.class);//7.设置map类job.setMapperClass(SdyxzMapper.class);//8.设置reduce类job.setReducerClass(SdyxzReducer.class);//9.提交jobjob.waitForCompletion(true);}

}

2.SdxyzMapper.class

package com.hdtrain;import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import org.wltea.analyzer.core.IKSegmenter;

import org.wltea.analyzer.core.Lexeme;import java.io.IOException;

import java.io.StringReader;public class SdyxzMapper extends Mapper<LongWritable, Text, Text, IntWritable> {@Overrideprotected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {StringReader stringReader = new StringReader(value.toString());IKSegmenter ikSegmenter = new IKSegmenter(stringReader, true);Lexeme lexeme = null;while((lexeme = ikSegmenter.next()) != null){context.write(new Text(lexeme.getLexemeText()), new IntWritable(1));}}

}

3.SdxyzReducer.class

package com.hdtrain;import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;import java.io.IOException;

import java.util.Iterator;public class SdyxzReducer extends Reducer<Text, IntWritable, Text, LongWritable> {@Overrideprotected void reduce(Text key, Iterable<IntWritable> values, Context context) throws IOException, InterruptedException {long count = 0;Iterator<IntWritable> iterator = values.iterator();while(iterator.hasNext()){int value = iterator.next().get();count += value;}context.write(key, new LongWritable(count));}

}

4.IK分词器示例

package com.hdtrain;import org.wltea.analyzer.core.IKSegmenter;

import org.wltea.analyzer.core.Lexeme;import java.io.IOException;

import java.io.StringReader;public class IKword {public static void main(String[] args) throws IOException {StringReader stringReader = new StringReader("畔一排数十株乌柏树,叶子似火烧般红,正是八月天时。村前村后的野草刚起始变黄,一抹斜阳映照之下,更增了几分萧索。两株大松树下围着一堆村民,男男女女和十几个小孩,正自聚精会神的听着一个瘦削的老者说话。");IKSegmenter ikSegmenter = new IKSegmenter(stringReader, true);Lexeme lexeme = null;while((lexeme = ikSegmenter.next()) != null){System.out.println(lexeme.getLexemeText());}}

}5.计算结果

本文来自互联网用户投稿,文章观点仅代表作者本人,不代表本站立场,不承担相关法律责任。如若转载,请注明出处。 如若内容造成侵权/违法违规/事实不符,请点击【内容举报】进行投诉反馈!