批量发送消息mysql模型_rocketmq-批量发送消息

参考:

https://blog.csdn.net/u010277958/article/details/88647281

https://blog.csdn.net/u010634288/article/details/56049305

https://blog.csdn.net/u014004279/article/details/99644995

RocketMQ-批量发送消息

批量发送消息可提高传递小消息的性能。同时也需要满足以下特征:

批量消息要求必要具有同一topic、相同消息配置

不支持延时消息

建议一个批量消息最好不要超过1MB大小

示例:

String topic = "BatchTest";

List messages = new ArrayList<>();

messages.add(new Message(topic, "TagA", "Order1", "Hello world 0".getBytes()));

messages.add(new Message(topic, "TagA", "Order2", "Hello world 1".getBytes()));

messages.add(new Message(topic, "TagA", "Order3", "Hello world 2".getBytes()));try{

producer.send(messages);

}catch(Exception e) {

e.printStackTrace();

}

在发送批量消息时先构建一个消息对象集合,然后调用send(Collection msg)方法即可。由于批量消息的1M限制,所以一般情况下在集合中添加消息需要先计算当前集合中消息对象的大小是否超过限制,如果超过限制也可以使用分割消息的方式进行多次批量发送。

RocketMQ之批量消息发送源码解析

DefaultProducer.send

RocketMQ提供了批量发送消息的API,同样在DefaultProducer.java中

@OverridepublicSendResult send(

Collection msgs) throwsMQClientException, RemotingException,

MQBrokerException, InterruptedException {return this.defaultMQProducerImpl.send(batch(msgs));

}

它的参数为Message集合,也就是一批消息。它的另外一个重载方法提供了发送超时时间参数

@Overridepublic SendResult send(Collectionmsgs,long timeout) throwsMQClientException, RemotingException,

MQBrokerException, InterruptedException {return this.defaultMQProducerImpl.send(batch(msgs), timeout);

}

可以看到是将消息通过batch()方法打包为单条消息,我们看一下batch方法的逻辑

DefaultProducer.batch

private MessageBatch batch(Collection msgs) throws MQClientException {

// 声明批量消息体

MessageBatch msgBatch;

try {

//从Message的list生成批量消息体MessageBatch

msgBatch =MessageBatch.generateFromList(msgs);for(Message message : msgBatch) {

Validators.checkMessage(message,this);

MessageClientIDSetter.setUniqID(message);

message.setTopic(withNamespace(message.getTopic()));

}//设置消息体,此时的消息体已经是处理过后的批量消息体

msgBatch.setBody(msgBatch.encode());

}catch(Exception e) {throw new MQClientException("Failed to initiate the MessageBatch", e);

}//设置topic

msgBatch.setTopic(withNamespace(msgBatch.getTopic()));returnmsgBatch;

}

从代码可以看到,核心思想是将一批消息(Collection msgs)打包为MessageBatch对象,我们看下MessageBatch的声明

public class MessageBatch extends Message implements Iterable {

private final List messages;

private MessageBatch(Listmessages) {this.messages =messages;

}

可以看到MessageBatch继承自Message,持有List 引用。

我们接着看一下generateFromList方法

MessageBatch.generateFromList

public static MessageBatch generateFromList(Collectionmessages) {assert messages != null;assert messages.size() > 0;

//首先实例化一个Message的list

List messageList = new ArrayList(messages.size());

Message first= null;

//对messages集合进行遍历

for (Message message : messages) {

//判断延时级别,如果大于0抛出异常,原因为:批量消息发送不支持延时

if (message.getDelayTimeLevel() > 0) {throw newUnsupportedOperationException

("TimeDelayLevel in not supported for batching");

}

//判断topic是否以 **"%RETRY%"** 开头,如果是,//则抛出异常,原因为:批量发送消息不支持消息重试

if(message.getTopic().startsWith(MixAll.RETRY_GROUP_TOPIC_PREFIX)) {throw new UnsupportedOperationException("Retry Group is not supported for batching");

}

//判断集合中的每个Message的topic与批量发送topic是否一致,//如果不一致则抛出异常,原因为://批量消息中的每个消息实体的Topic要和批量消息整体的topic保持一致。

if (first == null) {

first=message;

}else{if (!first.getTopic().equals(message.getTopic())) {throw new UnsupportedOperationException("The topic of the messages in one batch should be the same");

}

//判断批量消息的首个Message与其他的每个Message实体的等待消息存储状态是否相同,//如果不同则报错,原因为:批量消息中每个消息的waitStoreMsgOK状态均应该相同。

if (first.isWaitStoreMsgOK() !=message.isWaitStoreMsgOK()) {throw new UnsupportedOperationException("The waitStoreMsgOK of the messages in one batch should the same");

}

}

//校验通过后,将message实体添加到messageList中

messageList.add(message);

}

//将处理完成的messageList作为构造方法,//初始化MessageBatch实体,并设置topic以及isWaitStoreMsgOK状态。

MessageBatch messageBatch = new MessageBatch(messageList);

messageBatch.setTopic(first.getTopic());

messageBatch.setWaitStoreMsgOK(first.isWaitStoreMsgOK());returnmessageBatch;

}

总结一下,generateFromList方法对调用方设置的Collection 集合进行遍历,经过前置校验之后,转换为MessageBatch对象并返回给DefaultProducer.batch方法中,我们接着看DefaultProducer.batch的逻辑。

到此,通过MessageBatch.generateFromList方法,将发送端传入的一批消息集合转换为了MessageBatch实体。

DefaultProducer.batch

private MessageBatch batch(Collection msgs) throws MQClientException {

//声明批量消息体

MessageBatch msgBatch;try{//从Message的list生成批量消息体MessageBatch

msgBatch =MessageBatch.generateFromList(msgs);for(Message message : msgBatch) {

Validators.checkMessage(message,this);

MessageClientIDSetter.setUniqID(message);

message.setTopic(withNamespace(message.getTopic()));

}//设置消息体,此时的消息体已经是处理过后的批量消息体

msgBatch.setBody(msgBatch.encode());

}catch(Exception e) {throw new MQClientException("Failed to initiate the MessageBatch", e);

}//设置topic

msgBatch.setTopic(withNamespace(msgBatch.getTopic()));returnmsgBatch;

}

注意下面这行代码:

//设置消息体,此时的消息体已经是处理过后的批量消息体

msgBatch.setBody(msgBatch.encode());

这里对MessageBatch进行消息编码处理,通过调用MessageBatch的encode方法实现,代码逻辑如下:

public byte[] encode() {returnMessageDecoder.encodeMessages(messages);

}

可以看到是通过静态方法 encodeMessages(List messages) 实现的。

我们看一下encodeMessages方法的逻辑:

public static byte[] encodeMessages(Listmessages) {//TO DO refactor, accumulate in one buffer, avoid copies

List encodedMessages = new ArrayList(messages.size());int allSize = 0;for (Message message : messages) {

//遍历messages集合,分别对每个Message实体进行编码操作,转换为byte[]

byte[] tmp =encodeMessage(message);//将转换后的单个Message的byte[]设置到encodedMessages中

encodedMessages.add(tmp);//批量消息的二进制数据长度随实际消息体递增

allSize +=tmp.length;

}byte[] allBytes = new byte[allSize];int pos = 0;for (byte[] bytes : encodedMessages) {//遍历encodedMessages,按序复制每个Message的二进制格式消息体

System.arraycopy(bytes, 0, allBytes, pos, bytes.length);

pos+=bytes.length;

}//返回批量消息整体的消息体二进制数组

returnallBytes;

}

encodeMessages的逻辑在注释中分析的已经比较清楚了,其实就是遍历messages,并按序拼接每个Message实体的二进制数组格式消息体并返回。

我们可以继续看一下单个Message是如何进行编码的,调用了 MessageDecoder.encodeMessage(message) 方法,逻辑如下:

public static byte[] encodeMessage(Message message) {//only need flag, body, properties

byte[] body =message.getBody();int bodyLen =body.length;

String properties=messageProperties2String(message.getProperties());byte[] propertiesBytes =properties.getBytes(CHARSET_UTF8);//note properties length must not more than Short.MAX

short propertiesLength = (short) propertiesBytes.length;int sysFlag =message.getFlag();int storeSize = 4 //1 TOTALSIZE

+ 4 //2 MAGICCOD

+ 4 //3 BODYCRC

+ 4 //4 FLAG

+ 4 + bodyLen //4 BODY

+ 2 +propertiesLength;

ByteBuffer byteBuffer=ByteBuffer.allocate(storeSize);//1 TOTALSIZE

byteBuffer.putInt(storeSize);

//2 MAGICCODE

byteBuffer.putInt(0);

//3 BODYCRC

byteBuffer.putInt(0);

//4 FLAG

int flag =message.getFlag();

byteBuffer.putInt(flag);

//5 BODY

byteBuffer.putInt(bodyLen);

byteBuffer.put(body);

//6 properties

byteBuffer.putShort(propertiesLength);

byteBuffer.put(propertiesBytes);

returnbyteBuffer.array();

}

这里其实就是将消息按照RocektMQ的消息协议进行编码,格式为:

消息总长度 ---4字节

魔数---4字节

bodyCRC校验码---4字节

flag标识---4字节

body长度---4字节

消息体---消息体实际长度N字节

属性长度---2字节

扩展属性--- N字节

通过encodeMessage方法处理之后,消息便会被编码为固定格式,最终会被Broker端进行处理并持久化。

其他

到此便是批量消息发送的源码分析,实际上RocketMQ在处理批量消息的时候是将其解析为单个消息再发送的,这样就在底层统一了单条消息、批量消息发送的逻辑,让整个框架的设计更加健壮,也便于我们进行理解学习。

RocketMQ批量消费、消息重试、消费模式、刷盘方式

一、Consumer 批量消费

可以通过consumer.setConsumeMessageBatchMaxSize(10);//每次拉取10条

这里需要分为2种情况1、Consumer端先启动 2、Consumer端后启动. 正常情况下:应该是Consumer需要先启动

1、Consumer端先启动

Consumer代码如下

packagequickstart;importjava.util.List;importcom.alibaba.rocketmq.client.consumer.DefaultMQPushConsumer;importcom.alibaba.rocketmq.client.consumer.listener.ConsumeConcurrentlyContext;importcom.alibaba.rocketmq.client.consumer.listener.ConsumeConcurrentlyStatus;importcom.alibaba.rocketmq.client.consumer.listener.MessageListenerConcurrently;importcom.alibaba.rocketmq.client.exception.MQClientException;importcom.alibaba.rocketmq.common.consumer.ConsumeFromWhere;importcom.alibaba.rocketmq.common.message.MessageExt;/*** Consumer,订阅消息*/

public classConsumer {public static void main(String[] args) throwsInterruptedException, MQClientException {

DefaultMQPushConsumer consumer= new DefaultMQPushConsumer("please_rename_unique_group_name_4");

consumer.setNamesrvAddr("192.168.100.145:9876;192.168.100.146:9876");

consumer.setConsumeMessageBatchMaxSize(10);/*** 设置Consumer第一次启动是从队列头部开始消费还是队列尾部开始消费

* 如果非第一次启动,那么按照上次消费的位置继续消费*/consumer.setConsumeFromWhere(ConsumeFromWhere.CONSUME_FROM_FIRST_OFFSET);

consumer.subscribe("TopicTest", "*");

consumer.registerMessageListener(newMessageListenerConcurrently() {public ConsumeConcurrentlyStatus consumeMessage(Listmsgs, ConsumeConcurrentlyContext context) {try{

System.out.println("msgs的长度" +msgs.size());

System.out.println(Thread.currentThread().getName()+ " Receive New Messages: " +msgs);

}catch(Exception e) {

e.printStackTrace();returnConsumeConcurrentlyStatus.RECONSUME_LATER;

}returnConsumeConcurrentlyStatus.CONSUME_SUCCESS;

}

});

consumer.start();

System.out.println("Consumer Started.");

}

}

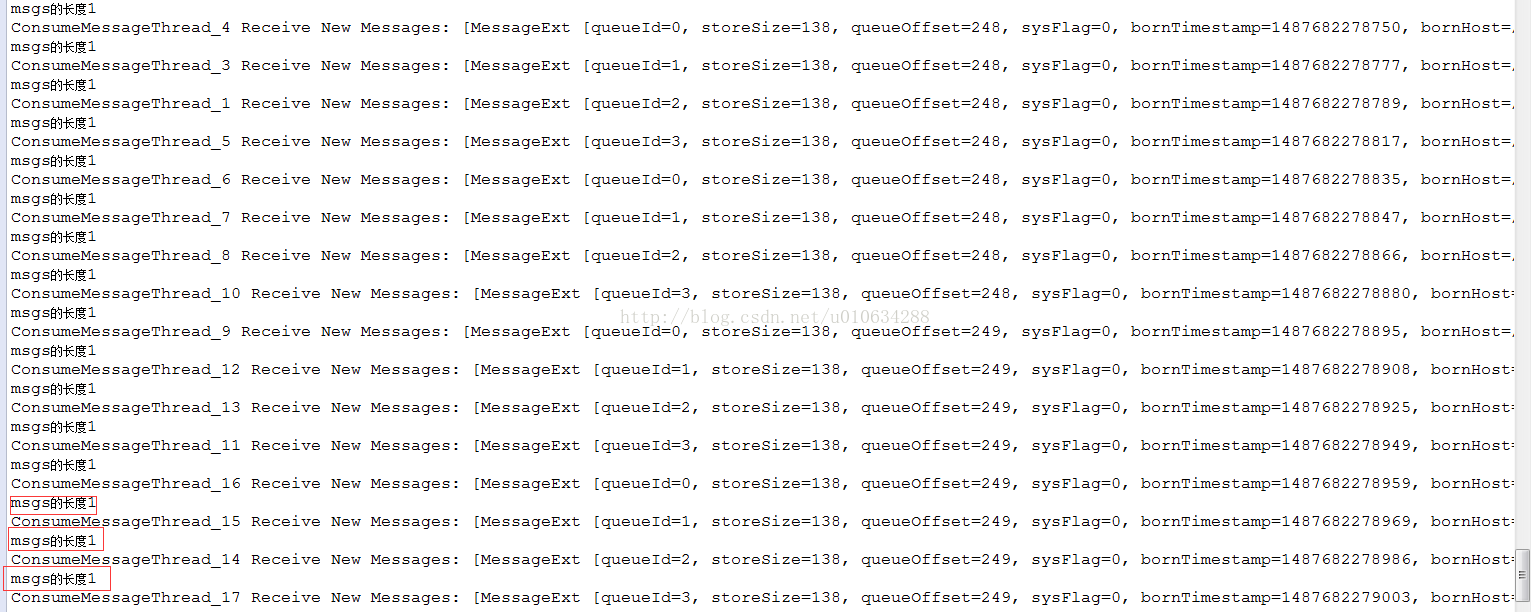

由于这里是Consumer先启动,所以他会去轮询MQ上是否有订阅队列的消息,由于每次producer插入一条,Consumer就拿一条所以测试结果如下(每次size都是1):

2、Consumer端后启动,也就是Producer先启动

由于这里是Consumer后启动,所以MQ上也就堆积了一堆数据,Consumer的

consumer.setConsumeMessageBatchMaxSize(10);//每次拉取10条

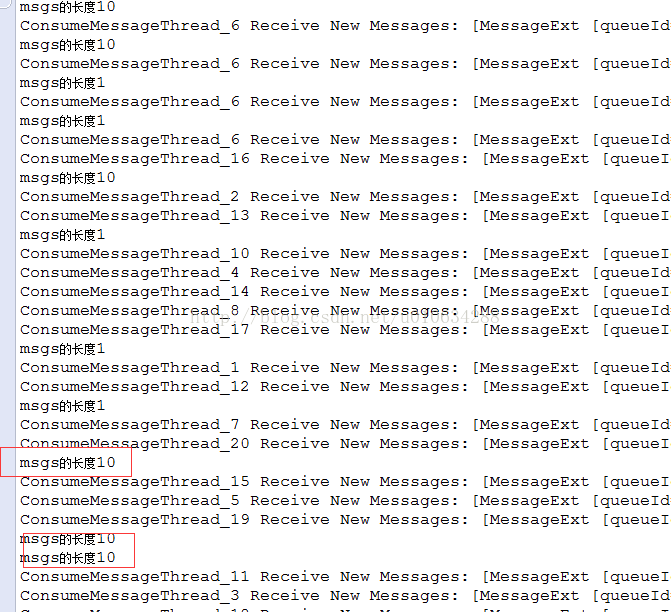

所以这段代码就生效了测试结果如下(每次size最多是10):

二、消息重试机制:消息重试分为2种1、Producer端重试 2、Consumer端重试

1、Producer端重试

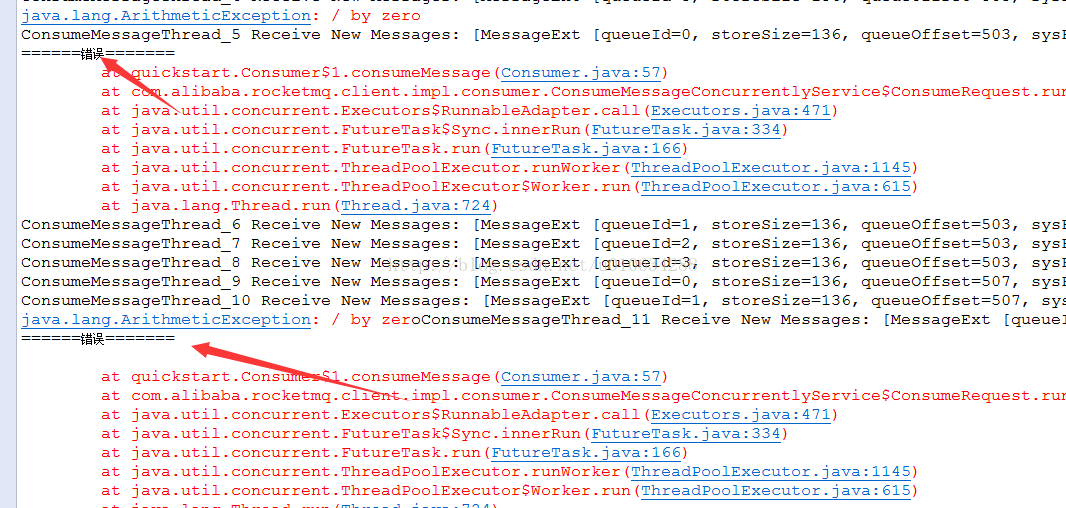

也就是Producer往MQ上发消息没有发送成功,我们可以设置发送失败重试的次数

packagequickstart;importcom.alibaba.rocketmq.client.exception.MQClientException;importcom.alibaba.rocketmq.client.producer.DefaultMQProducer;importcom.alibaba.rocketmq.client.producer.SendResult;importcom.alibaba.rocketmq.common.message.Message;/*** Producer,发送消息

**/

public classProducer {public static void main(String[] args) throwsMQClientException, InterruptedException {

DefaultMQProducer producer= new DefaultMQProducer("please_rename_unique_group_name");

producer.setNamesrvAddr("192.168.100.145:9876;192.168.100.146:9876");

producer.setRetryTimesWhenSendFailed(10);//失败的 情况发送10次

producer.start();for (int i = 0; i < 1000; i++) {try{

Message msg= new Message("TopicTest",//topic

"TagA",//tag

("Hello RocketMQ " + i).getBytes()//body

);

SendResult sendResult=producer.send(msg);

System.out.println(sendResult);

}catch(Exception e) {

e.printStackTrace();

Thread.sleep(1000);

}

}

producer.shutdown();

}

}

2、Consumer端重试

2.1、exception的情况,一般重复16次 10s、30s、1分钟、2分钟、3分钟等等

上面的代码中消费异常的情况返回

return ConsumeConcurrentlyStatus.RECONSUME_LATER;//重试

正常则返回:

return ConsumeConcurrentlyStatus.CONSUME_SUCCESS;//成功

packagequickstart;importjava.util.List;importcom.alibaba.rocketmq.client.consumer.DefaultMQPushConsumer;importcom.alibaba.rocketmq.client.consumer.listener.ConsumeConcurrentlyContext;importcom.alibaba.rocketmq.client.consumer.listener.ConsumeConcurrentlyStatus;importcom.alibaba.rocketmq.client.consumer.listener.MessageListenerConcurrently;importcom.alibaba.rocketmq.client.exception.MQClientException;importcom.alibaba.rocketmq.common.consumer.ConsumeFromWhere;importcom.alibaba.rocketmq.common.message.MessageExt;/*** Consumer,订阅消息*/

public classConsumer {public static void main(String[] args) throwsInterruptedException, MQClientException {

DefaultMQPushConsumer consumer= new DefaultMQPushConsumer("please_rename_unique_group_name_4");

consumer.setNamesrvAddr("192.168.100.145:9876;192.168.100.146:9876");

consumer.setConsumeMessageBatchMaxSize(10);/*** 设置Consumer第一次启动是从队列头部开始消费还是队列尾部开始消费

* 如果非第一次启动,那么按照上次消费的位置继续消费*/consumer.setConsumeFromWhere(ConsumeFromWhere.CONSUME_FROM_FIRST_OFFSET);

consumer.subscribe("TopicTest", "*");

consumer.registerMessageListener(newMessageListenerConcurrently() {public ConsumeConcurrentlyStatus consumeMessage(Listmsgs, ConsumeConcurrentlyContext context) {try{//System.out.println("msgs的长度" + msgs.size());

System.out.println(Thread.currentThread().getName() + " Receive New Messages: " +msgs);for(MessageExt msg : msgs) {

String msgbody= new String(msg.getBody(), "utf-8");if (msgbody.equals("Hello RocketMQ 4")) {

System.out.println("======错误=======");int a = 1 / 0;

}

}

}catch(Exception e) {

e.printStackTrace();if(msgs.get(0).getReconsumeTimes()==3){//记录日志

return ConsumeConcurrentlyStatus.CONSUME_SUCCESS;//成功

}else{return ConsumeConcurrentlyStatus.RECONSUME_LATER;//重试

}

}return ConsumeConcurrentlyStatus.CONSUME_SUCCESS;//成功

}

});

consumer.start();

System.out.println("Consumer Started.");

}

}

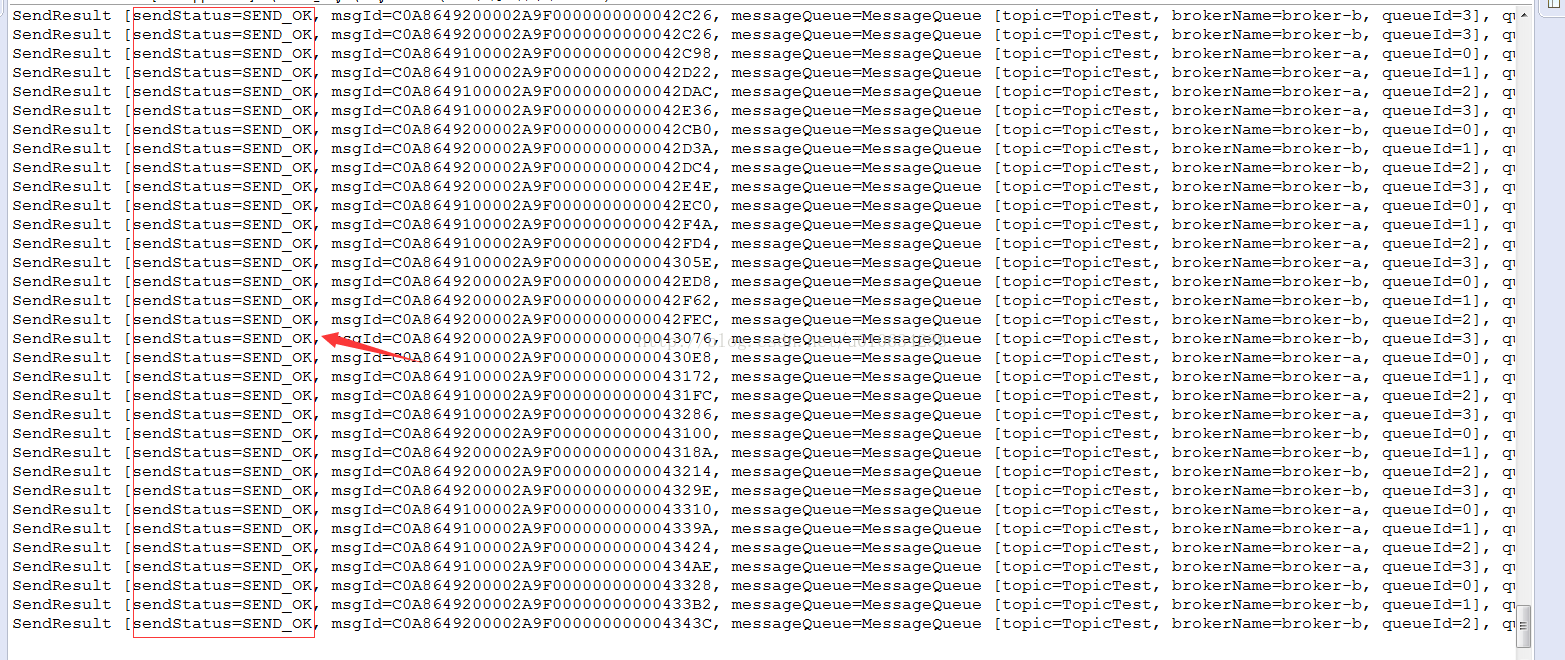

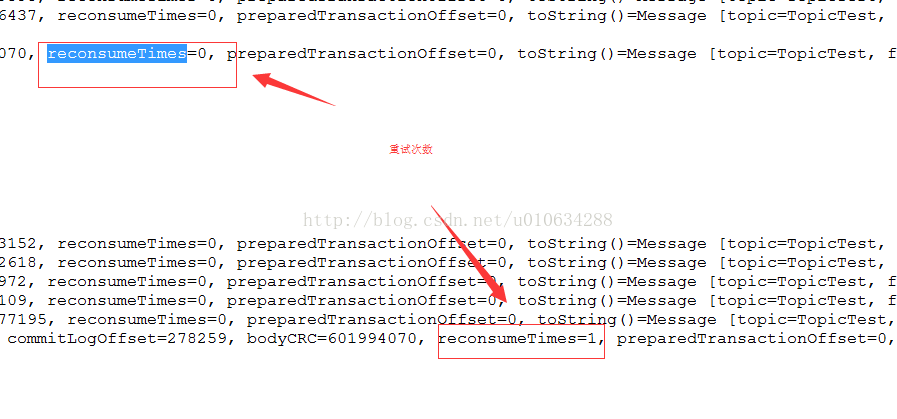

打印结果:

假如超过了多少次之后我们可以让他不再重试记录 日志。

if(msgs.get(0).getReconsumeTimes()==3){

//记录日志

return ConsumeConcurrentlyStatus.CONSUME_SUCCESS;// 成功

}

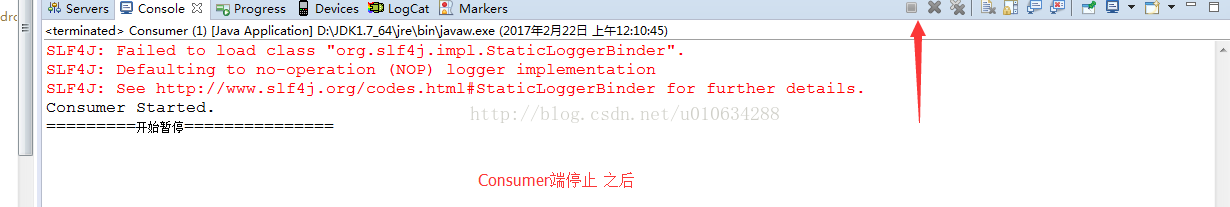

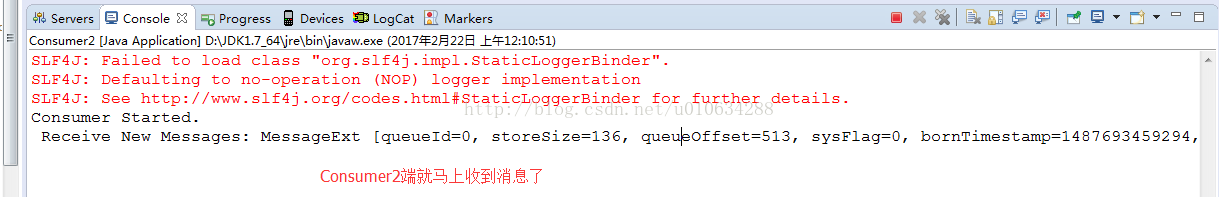

2.2超时的情况,这种情况MQ会无限制的发送给消费端。

就是由于网络的情况,MQ发送数据之后,Consumer端并没有收到导致超时。也就是消费端没有给我返回return ConsumeConcurrentlyStatus.CONSUME_SUCCESS;这样的就认为没有到达Consumer端。

这里模拟Producer只发送一条数据。consumer端暂停1分钟并且不发送接收状态给MQ

packagemodel;importjava.util.List;importcom.alibaba.rocketmq.client.consumer.DefaultMQPushConsumer;importcom.alibaba.rocketmq.client.consumer.listener.ConsumeConcurrentlyContext;importcom.alibaba.rocketmq.client.consumer.listener.ConsumeConcurrentlyStatus;importcom.alibaba.rocketmq.client.consumer.listener.MessageListenerConcurrently;importcom.alibaba.rocketmq.client.exception.MQClientException;importcom.alibaba.rocketmq.common.consumer.ConsumeFromWhere;importcom.alibaba.rocketmq.common.message.MessageExt;/*** Consumer,订阅消息*/

public classConsumer {public static void main(String[] args) throwsInterruptedException, MQClientException {

DefaultMQPushConsumer consumer= new DefaultMQPushConsumer("message_consumer");

consumer.setNamesrvAddr("192.168.100.145:9876;192.168.100.146:9876");

consumer.setConsumeMessageBatchMaxSize(10);/*** 设置Consumer第一次启动是从队列头部开始消费还是队列尾部开始消费

* 如果非第一次启动,那么按照上次消费的位置继续消费*/consumer.setConsumeFromWhere(ConsumeFromWhere.CONSUME_FROM_FIRST_OFFSET);

consumer.subscribe("TopicTest", "*");

consumer.registerMessageListener(newMessageListenerConcurrently() {public ConsumeConcurrentlyStatus consumeMessage(Listmsgs, ConsumeConcurrentlyContext context) {try{//表示业务处理时间

System.out.println("=========开始暂停===============");

Thread.sleep(60000);for(MessageExt msg : msgs) {

System.out.println(" Receive New Messages: " +msg);

}

}catch(Exception e) {

e.printStackTrace();return ConsumeConcurrentlyStatus.RECONSUME_LATER;//重试

}return ConsumeConcurrentlyStatus.CONSUME_SUCCESS;//成功

}

});

consumer.start();

System.out.println("Consumer Started.");

}

}

三、消费模式

广播消费:rocketMQ默认是集群消费,我们可以通过在Consumer来支持广播消费

consumer.setMessageModel(MessageModel.BROADCASTING);// 广播消费

packagemodel;importjava.util.List;importcom.alibaba.rocketmq.client.consumer.DefaultMQPushConsumer;importcom.alibaba.rocketmq.client.consumer.listener.ConsumeConcurrentlyContext;importcom.alibaba.rocketmq.client.consumer.listener.ConsumeConcurrentlyStatus;importcom.alibaba.rocketmq.client.consumer.listener.MessageListenerConcurrently;importcom.alibaba.rocketmq.client.exception.MQClientException;importcom.alibaba.rocketmq.common.consumer.ConsumeFromWhere;importcom.alibaba.rocketmq.common.message.MessageExt;importcom.alibaba.rocketmq.common.protocol.heartbeat.MessageModel;/*** Consumer,订阅消息*/

public classConsumer2 {public static void main(String[] args) throwsInterruptedException, MQClientException {

DefaultMQPushConsumer consumer= new DefaultMQPushConsumer("message_consumer");

consumer.setNamesrvAddr("192.168.100.145:9876;192.168.100.146:9876");

consumer.setConsumeMessageBatchMaxSize(10);

consumer.setMessageModel(MessageModel.BROADCASTING);//广播消费

consumer.setConsumeFromWhere(ConsumeFromWhere.CONSUME_FROM_FIRST_OFFSET);

consumer.subscribe("TopicTest", "*");

consumer.registerMessageListener(newMessageListenerConcurrently() {public ConsumeConcurrentlyStatus consumeMessage(Listmsgs, ConsumeConcurrentlyContext context) {try{for(MessageExt msg : msgs) {

System.out.println(" Receive New Messages: " +msg);

}

}catch(Exception e) {

e.printStackTrace();return ConsumeConcurrentlyStatus.RECONSUME_LATER;//重试

}return ConsumeConcurrentlyStatus.CONSUME_SUCCESS;//成功

}

});

consumer.start();

System.out.println("Consumer Started.");

}

}

如果我们有2台节点(非主关系),2个节点物理上是分开的,Producer往MQ上写入20条数据 其中broker1中拉取了12条 。broker2中拉取了8 条,这种情况下,假如broker1宕机,那么我们消费数据的时候,只能消费到broker2中的8条,broker1中的12条已经持久化到中。需要broker1回复之后这12条数据才能继续被消费。

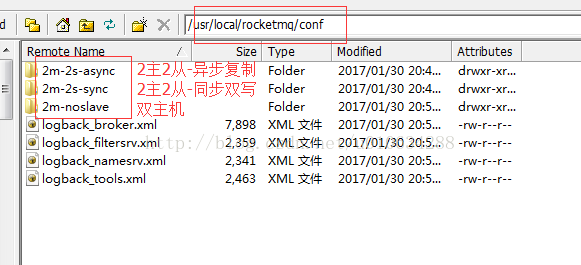

异步复制和同步双写主要是主和从的关系。消息需要实时消费的,就需要采用主从模式部署

异步复制:比如这里有一主一从,我们发送一条消息到主节点之后,这样消息就算从producer端发送成功了,然后通过异步复制的方法将数据复制到从节点

同步双写:比如这里有一主一从,我们发送一条消息到主节点之后,这样消息就并不算从producer端发送成功了,需要通过同步双写的方法将数据同步到从节点后, 才算数据发送成功。

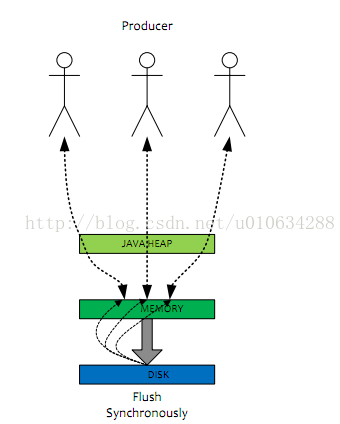

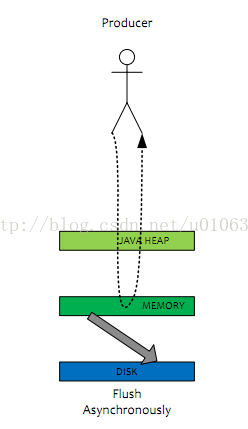

四、刷盘方式

同步刷盘:在消息到达MQ后,RocketMQ需要将数据持久化,同步刷盘是指数据到达内存之后,必须刷到commitlog日志之后才算成功,然后返回producer数据已经发送成功。

异步刷盘:是指数据到达内存之后,返回producer说数据已经发送成功。,然后再写入commitlog日志。

commitlog:

commitlog就是来存储所有的元信息,包含消息体,类似于Mysql、Oracle的redolog,所以主要有CommitLog在,Consume Queue即使数据丢失,仍然可以恢复出来。

consumequeue:记录数据的位置,以便Consume快速通过consumequeue找到commitlog中的数据

本文来自互联网用户投稿,文章观点仅代表作者本人,不代表本站立场,不承担相关法律责任。如若转载,请注明出处。 如若内容造成侵权/违法违规/事实不符,请点击【内容举报】进行投诉反馈!