文本相似度计算,基于同义词词林,hownet,指纹,字符向量,词向量

原文链接:GitHub - liuhuanyong/SentenceSimilarity: self complement of Sentence Similarity compute based on cilin, hownet, simhash, wordvector,vsm models,基于同义词词林,知网,指纹,字词向量,向量空间模型的句子相似度计算。

原文有几段代码无法正常运行,我对其进行了相关修改。

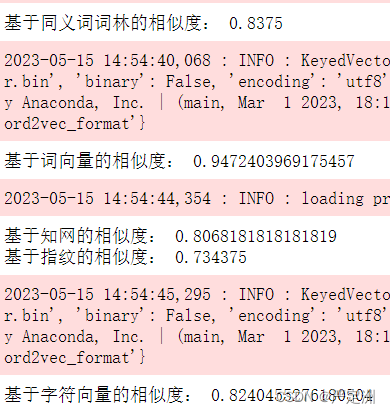

先展示一下最终效果吧:

import import_ipynbfrom 字符向量 import *

from 词向量 import *

from 词林 import *

from 知网 import *

from 指纹 import *text1 = "我是中国人,我深爱着我的祖国"

text2 = "中国是我的母亲,我热爱她"print("基于同义词词林的相似度:",SimCilin().distance(text1, text2))

print("基于词向量的相似度:",SimWordVec().distance(text1, text2))

print("基于知网的相似度:",SimHownet().distance(text1, text2))

print("基于指纹的相似度:",SimHaming().distance(text1, text2))

print("基于字符向量的相似度:",SimTokenVec().distance(text1, text2))

话不多说,直接上代码:

# 基于同义词词林的相似度计算import codecs

import jieba.posseg as pseg

class SimCilin:def __init__(self):self.cilin_path = 'model/cilin.txt'self.sem_dict = self.load_semantic()'''加载语义词典'''def load_semantic(self):sem_dict = {}for line in codecs.open(self.cilin_path , 'r', 'utf-8'):line = line.strip().split(' ')sem_type = line[0]words = line[1:]for word in words:if word not in sem_dict:sem_dict[word] = sem_typeelse:sem_dict[word] += ';' + sem_typefor word, sem_type in sem_dict.items():sem_dict[word] = sem_type.split(';')return sem_dict'''比较计算词语之间的相似度,取max最大值'''def compute_word_sim(self, word1 , word2):sems_word1 = self.sem_dict.get(word1, [])sems_word2 = self.sem_dict.get(word2, [])score_list = [self.compute_sem(sem_word1, sem_word2) for sem_word1 in sems_word1 for sem_word2 in sems_word2]if score_list:return max(score_list)else:return 0'''基于语义计算词语相似度'''def compute_sem(self, sem1, sem2):sem1 = [sem1[0], sem1[1], sem1[2:4], sem1[4], sem1[5:7], sem1[-1]]sem2 = [sem2[0], sem2[1], sem2[2:4], sem2[4], sem2[5:7], sem2[-1]]score = 0for index in range(len(sem1)):if sem1[index] == sem2[index]:if index in [0, 1]:score += 3elif index == 2:score += 2elif index in [3, 4]:score += 1return score/10'''基于词相似度计算句子相似度'''def distance(self, text1, text2):words1 = [word.word for word in pseg.cut(text1) if word.flag[0] not in ['u', 'x', 'w']]words2 = [word.word for word in pseg.cut(text2) if word.flag[0] not in ['u', 'x', 'w']]score_words1 = []score_words2 = []for word1 in words1:score = max(self.compute_word_sim(word1, word2) for word2 in words2)score_words1.append(score)for word2 in words2:score = max(self.compute_word_sim(word2, word1) for word1 in words1)score_words2.append(score)similarity = max(sum(score_words1)/len(words1), sum(score_words2)/len(words2))return similarity

# 基于词向量的相似度计算import gensim, logging

logging.basicConfig(format='%(asctime)s : %(levelname)s : %(message)s', level=logging.INFO)

import numpy as np

import jieba.posseg as pesgclass SimWordVec:def __init__(self):self.embedding_path = 'model/token_vector.bin'self.model = gensim.models.KeyedVectors.load_word2vec_format(self.embedding_path, binary=False)'''获取词向量'''def get_wordvector(self, word):#获取词向量try:return self.model[word]except:return np.zeros(200)'''基于余弦相似度计算句子之间的相似度,句子向量等于字符向量求平均'''def similarity_cosine(self, word_list1,word_list2):#给予余弦相似度的相似度计算vector1 = np.zeros(200)for word in word_list1:vector1 += self.get_wordvector(word)vector1=vector1/len(word_list1)vector2=np.zeros(200)for word in word_list2:vector2 += self.get_wordvector(word)vector2=vector2/len(word_list2)cos1 = np.sum(vector1*vector2)cos21 = np.sqrt(sum(vector1**2))cos22 = np.sqrt(sum(vector2**2))similarity = cos1/float(cos21*cos22)return similarity'''计算句子相似度'''def distance(self, text1, text2):#相似性计算主函数word_list1=[word.word for word in pesg.cut(text1) if word.flag[0] not in ['w','x','u']]word_list2=[word.word for word in pesg.cut(text2) if word.flag[0] not in ['w','x','u']]return self.similarity_cosine(word_list1,word_list2)# def test():

# text1 = '我喜歡你'

# text2 = '我愛你'

# simer = SimWordVec()

# print(simer.distance(text1, text2))# test()# 基于知网的相似度计算import chardet

import jieba.posseg as psegclass SimHownet:def __init__(self):self.semantic_path = 'model/hownet.dat'self.semantic_dict = self.load_semanticwords()'''加载语义词典'''def load_semanticwords(self):# 打开文件以二进制模式读取数据with open(self.semantic_path, 'rb') as f:# 使用 chardet 库自动检测文件编码result = chardet.detect(f.read())# 打开文件并以检测到的编码格式解码数据with open(self.semantic_path, 'r', encoding=result['encoding']) as f:semantic_dict = {}for line in f:words = [word for word in line.strip().replace(' ', '>').replace('\t', '>').split('>') if word != '']word = words[0]word_def = words[2]semantic_dict[word] = word_def.split(',')return semantic_dict'''基于语义计算语义相似度'''def calculate_semantic(self, DEF1, DEF2):DEF_INTERSECTION = set(DEF1).intersection(set(DEF2))DEF_UNION = set(DEF1).union(set(DEF2))return float(len(DEF_INTERSECTION))/float(len(DEF_UNION))'''比较两个词语之间的相似度'''def compute_similarity(self, word1, word2):DEFS_word1 = self.semantic_dict.get(word1, [])DEFS_word2 = self.semantic_dict.get(word2, [])scores = [self.calculate_semantic(DEF_word1, DEF_word2) for DEF_word1 in DEFS_word1 for DEF_word2 in DEFS_word2]if scores:return max(scores)else:return 0'''基于词语相似度计算句子相似度'''def distance(self, text1, text2):words1 = [word.word for word in pseg.cut(text1) if word.flag[0] not in ['u', 'x', 'w']]words2 = [word.word for word in pseg.cut(text2) if word.flag[0] not in ['u', 'x', 'w']]score_words1 = []score_words2 = []for word1 in words1:score = max(self.compute_similarity(word1, word2) for word2 in words2)score_words1.append(score)for word2 in words2:score = max(self.compute_similarity(word2, word1) for word1 in words1)score_words2.append(score)similarity = max(sum(score_words1)/len(words1), sum(score_words2)/len(words2))return similarity# def test():

# text1 = '周杰伦是一个歌手'

# text2 = '刘若英是个演员'# simer = SimHownet()

# sim = simer.distance(text1, text2)

# print(sim)# test()# 基于指纹的相似度计算from simhash import Simhash

import jieba.posseg as psegclass SimHaming:'''利用64位数,计算海明距离'''def haming_distance(self, code_s1, code_s2):x = (code_s1 ^ code_s2) & ((1 << 64) - 1)ans = 0while x:ans += 1x &= x - 1return ans'''利用相似度计算方式,计算全文编码相似度'''def get_similarity(self, a, b):if a > b :return b / aelse:return a / b'''对全文进行分词,提取全文特征,使用词性将虚词等无关字符去重'''def get_features(self, string):word_list=[word.word for word in pseg.cut(string) if word.flag[0] not in ['u','x','w','o','p','c','m','q']]return word_list'''计算两个全文编码的距离'''def get_distance(self, code_s1, code_s2):return self.haming_distance(code_s1, code_s2)'''对全文进行编码'''def get_code(self, string):return Simhash(self.get_features(string)).value'''计算s1与s2之间的距离'''def distance(self, s1, s2):code_s1 = self.get_code(s1)code_s2 = self.get_code(s2)similarity = (100 - self.haming_distance(code_s1,code_s2)*100/64)/100return similarity# def test():

# text1 = '我喜欢你'

# text2 = '我讨厌你'

# simer = SimHaming()

# sim = simer.distance(text1, text2)

# print(sim)# test()#基于字符向量的相似度计算import gensim, logging

logging.basicConfig(format='%(asctime)s : %(levelname)s : %(message)s', level=logging.INFO)

import numpy as npclass SimTokenVec:def __init__(self):self.embedding_path = 'model/token_vector.bin'self.model = gensim.models.KeyedVectors.load_word2vec_format(self.embedding_path, binary=False)'''获取词向量文件'''def get_wordvector(self, word):#获取词向量try:return self.model[word]except:return np.zeros(200)'''基于余弦相似度计算句子之间的相似度,句子向量等于字符向量求平均'''def similarity_cosine(self, word_list1,word_list2):#给予余弦相似度的相似度计算vector1 = np.zeros(200)for word in word_list1:vector1 += self.get_wordvector(word)vector1=vector1/len(word_list1)vector2=np.zeros(200)for word in word_list2:vector2 += self.get_wordvector(word)vector2=vector2/len(word_list2)cos1 = np.sum(vector1*vector2)cos21 = np.sqrt(sum(vector1**2))cos22 = np.sqrt(sum(vector2**2))similarity = cos1/float(cos21*cos22)return similarity'''计算句子相似度'''def distance(self, text1, text2):#相似性计算主函数word_list1=[word for word in text1]word_list2=[word for word in text2]return self.similarity_cosine(word_list1,word_list2)# def test():

# text1 = '我喜欢你'

# text2 = '我讨厌你'

# simer = SimTokenVec()

# sim = simer.distance(text1, text2)

# print(sim)

# test()代码中会用到一些模型,模型在上述的原文链接里,如果链接已失效,可以从这里下载

https://download.csdn.net/download/qq_53962537/87785392

本文来自互联网用户投稿,文章观点仅代表作者本人,不代表本站立场,不承担相关法律责任。如若转载,请注明出处。 如若内容造成侵权/违法违规/事实不符,请点击【内容举报】进行投诉反馈!