centos7.9 的kubernetes1.27部署

kubernetes1.27部署

1. 基本环境配置

kubernetes 集群ip规划

| 主机名 | IP地址 | 说明 |

|---|---|---|

| k8s-master | 192.168.5.57 | master节点 |

| k8s-node01 | 192.168.5.62 | worker节点 |

Pod网段和service和宿主机网段不要重复!!!

| 配置信息 | 备注 |

|---|---|

| 系统版本 | CentOS 7.9 |

| Docker版本 (containerd) | 20.10.x |

| Pod网段 | 172.16.0.0/16 |

| Service网段 | 10.96.0.0/16 |

1.1 修改主机名

k8s-master

# hostnamectl set-hostname k8s-master

k8s-node01

# hostnamectl set-hostname k8s-node01

ctrl+d 退出终端重新登录 刷新变量

1.2 修改/etc/hosts

全部节点

# cat << EOF >> /etc/hosts

192.168.5.57 k8s-master

192.168.5.62 k8s-node1

EOF# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6192.168.5.57 k8s-master

192.168.5.62 k8s-node1

1.3 配置阿里云yum源

全部节点

# 从阿里云镜像站下载CentOS-Base.repo文件并保存到/etc/yum.repos.d/目录下

curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo# 使用yum命令安装yum-utils、device-mapper-persistent-data和lvm2软件包

yum install -y yum-utils device-mapper-persistent-data lvm2# 添加Docker的yum源到yum配置管理器中

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo# 创建一个kubernetes.repo文件,并写入相关配置信息

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF# 使用sed命令删除CentOS-Base.repo文件中包含'mirrors.cloud.aliyuncs.com'和'mirrors.aliyuncs.com'的行

sed -i -e '/mirrors.cloud.aliyuncs.com/d' -e '/mirrors.aliyuncs.com/d' /etc/yum.repos.d/CentOS-Base.repo1.4 安装工具

全部节点

yum install wget jq psmisc vim net-tools telnet yum-utils device-mapper-persistent-data lvm2 git -y

1.5 关闭防火墙、selinux、dnsmasq、swap。

全部节点

# 禁用并停止firewalld服务

systemctl disable --now firewalld# 禁用并停止NetworkManager服务

systemctl disable --now NetworkManager# 临时关闭SELinux

setenforce 0# 修改/etc/sysconfig/selinux文件中的SELINUX=enforcing为SELINUX=disabled

sed -i 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/sysconfig/selinux# 临时关闭交换分区

swapoff -a#永久关闭交换分区,把加载swap分区的那行记录注释掉即可

vi /etc/fstab

#/dev/mapper/cl-swap swap swap defaults 0 01.6 时间同步

全部节点

# 使用yum安装ntpdate软件包

rpm -ivh http://mirrors.wlnmp.com/centos/wlnmp-release-centos.noarch.rpm

yum install ntpdate -y

# 设置系统时区为Asia/Shanghai

ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

# 将系统时区设置为Asia/Shanghai

echo 'Asia/Shanghai' >/etc/timezone

# 同步系统时间

ntpdate time2.aliyun.com# 将ntpdate命令添加到crontab中,每5分钟执行一次

echo "*/5 * * * * /usr/sbin/ntpdate time2.aliyun.com" >> /var/spool/cron/root

1.7 配置limit

全部节点

# 设置文件描述符限制

ulimit -SHn 65535# 编辑配置文件

cat << EOF >> /etc/security/limits.conf# 在文件末尾添加以下内容

* soft nofile 65536 # 设置软限制的最大文件描述符数为 65536

* hard nofile 131072 # 设置硬限制的最大文件描述符数为 131072

* soft nproc 65535 # 设置软限制的最大进程数为 65535

* hard nproc 655350 # 设置硬限制的最大进程数为 655350

* soft memlock unlimited # 设置软限制的内存锁定限制为无限制

* hard memlock unlimited # 设置硬限制的内存锁定限制为无限制EOF

1.8 升级内核

全部节点

yum update -y --exclude=kernel* && reboot

1.9 配置免密

ssh-keygen -t rsa -f ~/.ssh/id_rsa -P ""

ssh-copy-id -i .ssh/id_rsa.pub k8s-node012. 内核配置

2.1 内核下载

wget http://193.49.22.109/elrepo/kernel/el7/x86_64/RPMS/kernel-ml-devel-4.19.12-1.el7.elrepo.x86_64.rpm

wget http://193.49.22.109/elrepo/kernel/el7/x86_64/RPMS/kernel-ml-4.19.12-1.el7.elrepo.x86_64.rpm2.2 安装内核

全部节点

# 使用yum本地安装命令安装kernel-ml软件包

yum localinstall -y kernel-ml*

2.3 更改内核启动顺序

全部节点

# 设置默认启动的内核为第一个(索引为0)内核,并重新生成grub配置文件

grub2-set-default 0 && grub2-mkconfig -o /etc/grub2.cfg# 使用grubby命令将user_namespace.enable=1参数添加到默认内核,并更新内核参数

grubby --args="user_namespace.enable=1" --update-kernel="$(grubby --default-kernel)"#查看默认内核

grubby --default-kernel

2.4 安装ipvsadm

全部节点

# 使用yum命令安装ipvsadm、ipset、sysstat、conntrack、libseccomp软件包

yum install ipvsadm ipset sysstat conntrack libseccomp -y# 加载ip_vs内核模块

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack# 编辑/etc/modules-load.d/ipvs.conf文件,添加ip_vs相关模块

vim /etc/modules-load.d/ipvs.conf

# 加入以下内容

ip_vs

ip_vs_lc

ip_vs_wlc

ip_vs_rr

ip_vs_wrr

ip_vs_lblc

ip_vs_lblcr

ip_vs_dh

ip_vs_sh

ip_vs_fo

ip_vs_nq

ip_vs_sed

ip_vs_ftp

ip_vs_sh

nf_conntrack

ip_tables

ip_set

xt_set

ipt_set

ipt_rpfilter

ipt_REJECT

ipip# 执行systemctl enable --now systemd-modules-load.service命令(报错不用管)

systemctl enable --now systemd-modules-load.service

2.5 配置k8s内核参数

全部节点

cat <<EOF > /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

fs.may_detach_mounts = 1

net.ipv4.conf.all.route_localnet = 1

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_watches=89100

fs.file-max=52706963

fs.nr_open=52706963

net.netfilter.nf_conntrack_max=2310720net.ipv4.tcp_keepalive_time = 600

net.ipv4.tcp_keepalive_probes = 3

net.ipv4.tcp_keepalive_intvl =15

net.ipv4.tcp_max_tw_buckets = 36000

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_max_orphans = 327680

net.ipv4.tcp_orphan_retries = 3

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.ip_conntrack_max = 65536

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.tcp_timestamps = 0

net.core.somaxconn = 16384

EOF# 加载新的sysctl配置

sysctl --system#重启服务

reboot

lsmod | grep --color=auto -e ip_vs -e nf_conntrack#检查内核为4.19

uname -a

3. K8s组件和Runtime安装

3.1 安装docker

全部节点

yum install docker-ce-20.10.* docker-ce-cli-20.10.* -y

# 将以下内容输入到 /etc/modules-load.d/containerd.conf 文件中

cat <<EOF | sudo tee /etc/modules-load.d/containerd.conf

overlay

br_netfilter

EOF# 加载模块

modprobe -- overlay

modprobe -- br_netfilter# 将以下内容输入到 /etc/sysctl.d/99-kubernetes-cri.conf 文件中

cat <<EOF | sudo tee /etc/sysctl.d/99-kubernetes-cri.conf

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

EOF# 加载内核

sysctl --system# 创建 /etc/containerd 目录

mkdir -p /etc/containerd

# 将 containerd 的默认配置写入 /etc/containerd/config.toml 文件中,并显示在终端上

containerd config default | tee /etc/containerd/config.toml# 修改 /etc/containerd/config.toml 文件,将 Containerd 的 Cgroup 配置为 Systemd

vim /etc/containerd/config.toml[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options]BinaryName = ""CriuImagePath = ""CriuPath = ""CriuWorkPath = ""IoGid = 0IoUid = 0NoNewKeyring = falseNoPivotRoot = falseRoot = ""ShimCgroup = ""SystemdCgroup = true #修改[plugins."io.containerd.grpc.v1.cri"]device_ownership_from_security_context = falsedisable_apparmor = falsedisable_cgroup = falsedisable_hugetlb_controller = truedisable_proc_mount = falsedisable_tcp_service = trueenable_selinux = falseenable_tls_streaming = falseenable_unprivileged_icmp = falseenable_unprivileged_ports = falseignore_image_defined_volumes = falsemax_concurrent_downloads = 3max_container_log_line_size = 16384netns_mounts_under_state_dir = falserestrict_oom_score_adj = falsesandbox_image = "registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.6" #修改selinux_category_range = 1024stats_collect_period = 10stream_idle_timeout = "4h0m0s"stream_server_address = "127.0.0.1"stream_server_port = "0"systemd_cgroup = falsetolerate_missing_hugetlb_controller = trueunset_seccomp_profile = ""# 启动Containerd,并配置开机自启动:

systemctl daemon-reload

systemctl enable --now containerd# 配置crictl客户端连接的运行时位置:

cat > /etc/crictl.yaml <<EOF

runtime-endpoint: unix:///run/containerd/containerd.sock

image-endpoint: unix:///run/containerd/containerd.sock

timeout: 10

debug: false

EOF3.2 安装Kubernetes组件

# 查看Kubernetes版本

yum list kubeadm.x86_64 --showduplicates | sort -r# 最新的Kubernetes版本

yum install kubeadm-1.27* kubelet-1.27* kubectl-1.27* -y# 设置Kubelet开机自启动,未初始化没有配置文件,报错正常

systemctl daemon-reload

systemctl enable --now kubelet4. 集群初始化

k8s-master节点

# 导出配置文件

kubeadm config print init-defaults > kubeadm.yaml

#修改文件

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:- system:bootstrappers:kubeadm:default-node-tokentoken: abcdef.0123456789abcdefttl: 24h0m0susages:- signing- authentication

kind: InitConfiguration

localAPIEndpoint:advertiseAddress: 192.168.5.57 #修改本机ipbindPort: 6443

nodeRegistration:criSocket: unix:///var/run/containerd/containerd.sockimagePullPolicy: IfNotPresentname: k8s-master #修改节点名字taints: null

---

apiServer:timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:local:dataDir: /var/lib/etcd

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers #修改镜像拉取地址

kind: ClusterConfiguration

kubernetesVersion: 1.27.4 #kubeadm version一样的版本

networking:dnsDomain: cluster.localpodSubnet: 172.16.0.0/16 #pod地址serviceSubnet: 10.96.0.0/12 #service地址

scheduler: {}

#拉取镜像

kubeadm config images pull --config kubeadm.yaml

#节点初始化

kubeadm init --config kubeadm.yaml --upload-certs#添加配置文件

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

4.2 初始化失败

# 重新配置

kubeadm reset -f ; ipvsadm --clear ; rm -rf ~/.kube#查看日志

tail -f /var/log/messages | grep -v "not found"经常出错的原因:

Containerd的配置文件修改的不对,

配置问题,三个网段有交叉,出现IP地址冲突5.加入节点

k8s-node01节点

# 初始化完成之后会提供token加入

kubeadm join 192.168.5.57:6443 --token abcdef.0123456789abcdef \--discovery-token-ca-cert-hash sha256:0a8921e4a84cdc52d17928432b0e38dae99fba3750a791e2e5e05ff00354c830

如果token失效了

kubeadm token create --print-join-command

kubeadm init phase upload-certs --upload-certs

6. Calico组件的安装

k8s-master节点

下载最新的版本

https://github.com/projectcalico/calico/releases/tag/v3.26.1

#解压文件

tar xf release-v3.26.1 && mv release-v3.26.1 Calico-v3.26.1#加载镜像

cd /root/Calico-v3.26.1/images

ctr image import calico-cni.tar

ctr image import calico-dikastes.tar

ctr image import calico-flannel-migration-controller.tar

ctr image import calico-kube-controllers.tar

ctr image import calico-node.tar

ctr image import calico-pod2daemon.tar

ctr image import calico-typha.tar#运行tigera-operator.yaml和custom-resources.yaml

cd /root/Calico-v3.26.1/manifests

kubectl create -f tigera-operator.yaml#查看pod地址

cat /etc/kubernetes/manifests/kube-controller-manager.yaml | grep cluster-cidr= | awk -F= '{print $NF}'

#修改custom-resources.yaml文件pod地址

vim custom-resources.yamlapiVersion: operator.tigera.io/v1

kind: Installation

metadata:name: default

spec:# Configures Calico networking.calicoNetwork:# Note: The ipPools section cannot be modified post-install.ipPools:- blockSize: 26cidr: 172.16.0.0/16 #修改encapsulation: VXLANCrossSubnetnatOutgoing: EnablednodeSelector: all()

---

apiVersion: operator.tigera.io/v1

kind: APIServer

metadata:name: default

spec: {}等待部署完成

# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master Ready control-plane 15h v1.27.4

k8s-node01 Ready <none> 15h v1.27.4

等待全部容器为running

# kubectl get pod -A -owide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

calico-apiserver calico-apiserver-59bfd5d6fd-2bmks 1/1 Running 0 58m 172.16.85.194 k8s-node01 <none> <none>

calico-apiserver calico-apiserver-59bfd5d6fd-wkklj 1/1 Running 0 58m 172.16.235.197 k8s-master <none> <none>

calico-system calico-kube-controllers-668f76f65-cwddb 1/1 Running 0 61m 172.16.235.193 k8s-master <none> <none>

calico-system calico-node-cdrw8 1/1 Running 0 61m 192.168.5.62 k8s-node01 <none> <none>

calico-system calico-node-hkqvz 1/1 Running 0 61m 192.168.5.57 k8s-master <none> <none>

calico-system calico-typha-667dd89665-5jffc 1/1 Running 0 61m 192.168.5.62 k8s-node01 <none> <none>

calico-system csi-node-driver-grwtd 2/2 Running 0 61m 172.16.235.194 k8s-master <none> <none>

calico-system csi-node-driver-hr4zq 2/2 Running 0 61m 172.16.85.193 k8s-node01 <none> <none>

kube-system coredns-65dcc469f7-8mktj 1/1 Running 0 16h 172.16.235.195 k8s-master <none> <none>

kube-system coredns-65dcc469f7-xbbgp 1/1 Running 0 16h 172.16.235.196 k8s-master <none> <none>

kube-system etcd-k8s-master 1/1 Running 0 16h 192.168.5.57 k8s-master <none> <none>

kube-system kube-apiserver-k8s-master 1/1 Running 0 16h 192.168.5.57 k8s-master <none> <none>

kube-system kube-controller-manager-k8s-master 1/1 Running 0 16h 192.168.5.57 k8s-master <none> <none>

kube-system kube-proxy-9dqh9 1/1 Running 0 16h 192.168.5.62 k8s-node01 <none> <none>

kube-system kube-proxy-l6rc7 1/1 Running 0 16h 192.168.5.57 k8s-master <none> <none>

kube-system kube-scheduler-k8s-master 1/1 Running 0 16h 192.168.5.57 k8s-master <none> <none>

tigera-operator tigera-operator-5f4668786-g8jpk 1/1 Running 1 (64m ago) 67m 192.168.5.62 k8s-node01 <none> <none>

7.Metrics部署

在新版的Kubernetes中系统资源的采集均使用Metrics-server,可以通过Metrics采集节点和Pod的内存、磁盘、CPU和网络的使用率。

k8s-master节点

# 下载文件

wget https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml#更换文件的镜像为国内镜像 避免无法拉取

vim components.yaml

image: registry.cn-hangzhou.aliyuncs.com/google_containers/metrics-server:v0.6.3

添加证书(全部节点操作)

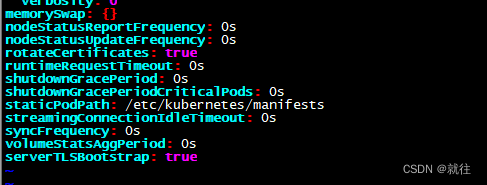

vim /var/lib/kubelet/config.yaml

#在最下面添加

serverTLSBootstrap: true

# 重启kubelet

systemctl restart kubelet #签名申请

kubectl get csr # 查看到几个签名几个

kubectl certificate approve csr-nkn79

kubectl certificate approve csr-tk7tz

8.dashboard部署

k8s-master节点

wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.7.0/aio/deploy/recommended.yaml #修改svc模式

kubectl edit svc -n kubernetes-dashboard kubernetes-dashboard apiVersion: v1

kind: Service

metadata:creationTimestamp: "2023-07-25T03:26:48Z"labels:k8s-app: kubernetes-dashboardname: kubernetes-dashboardnamespace: kubernetes-dashboardresourceVersion: "95886"uid: 72e702f8-c19c-4bdc-b8ff-251deb1fa419

spec:clusterIP: 10.106.23.99clusterIPs:- 10.106.23.99externalTrafficPolicy: ClusterinternalTrafficPolicy: ClusteripFamilies:- IPv4ipFamilyPolicy: SingleStackports:- nodePort: 31061port: 443protocol: TCPtargetPort: 8443selector:k8s-app: kubernetes-dashboardsessionAffinity: Nonetype: NodePort #修改

status:loadBalancer: {}# 网页访问

kubectl get svc -n kubernetes-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dashboard-metrics-scraper ClusterIP 10.102.249.83 <none> 8000/TCP 82m

kubernetes-dashboard NodePort 10.106.23.99 <none> 443:31061/TCP 82m网页登录

https://192.168.5.57:31061/#/login

给予权限

kubectl create clusterrolebinding dashboardcrbinding --clusterrole=cluster-admin --serviceaccount=kubernetes-dashboard:kubernetes-dashboard

获取token

kubectl create token -n kubernetes-dashboard kubernetes-dashboard

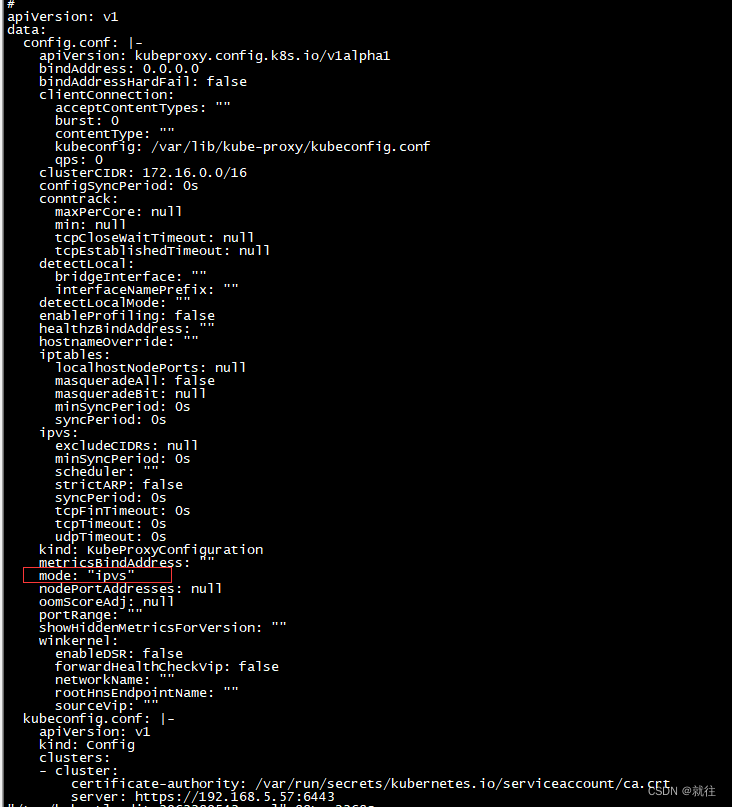

9.Kube-proxy改为ipvs模式

修改configmap

kubectl edit cm kube-proxy -n kube-system

更新pod

kubectl patch daemonset kube-proxy -p "{\"spec\":{\"template\":{\"metadata\":{\"annotations\":{\"date\":\"`date +'%s'`\"}}}}}" -n kube-system

验证

curl 127.0.0.1:10249/proxyMode

本文来自互联网用户投稿,文章观点仅代表作者本人,不代表本站立场,不承担相关法律责任。如若转载,请注明出处。 如若内容造成侵权/违法违规/事实不符,请点击【内容举报】进行投诉反馈!