attack复现

attack复现

1.目标检测

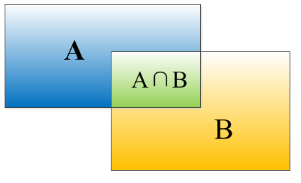

重叠度(IOU)

物体检测最终会定位到一个bounding box(边界框),就是最终框到目标的那个框,对于bounding box的定位精度,我们有一个评价指标,就是IOU。

就是A交B面积除以A并B,这可以用来评价我们的预测结果与实际结果(人工标注)的误差。

正样本、负样本:

比如我们要检测字母A,那样本里面是A的就是正样本,不是A的就是负样本。我们不光要识别正样本,也要把负样本检测出来。

TP(True Positives):被分为正样本,并且分对了

TN(True Negatives):被分为负样本,并且分对了

FP(False Positives):被分为正样本,但是分错了

FN(False Negatives):被分为负样本,但是分错了

如何判断分的对不对呢?IOU>0.5就是对,反之就是错的

Precision 和 recall也被叫做查准率和查全率。

PR图绘制

首先我们有很多张图,每张图里面标注了猫,也就是说我们有很多的标注框,也就是GT(ground truth)。

每个标注框我们给他不同的ID。然后经过我们网络识别出来的预测框,有其对应的GT ID(也就是这个框是预测哪个目标的),并且还有置信度,也就是概率Confidence,另外就是如果预测框和对应的GT框IOU>0.5我们记作True。

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-5mjZz6o9-1686743216844)(

FPS

(Frame Per Second)

FPS,即每秒帧率。除了检测准确度,目标检测算法的另一个重要评估指标是速度,只有速度快,才能够实现实时检测。FPS用来评估目标检测的速度。即每秒内可以处理的图片数量。当然要对比FPS,你需要在同一硬件上进行。另外也可以使用处理一张图片所需时间来评估检测速度,时间越短,速度越快。

3.代码复现

1.安装包

matplotlib==3.5.2

mmcv==2.0.0rc4

mmdet==3.0.0

numpy==1.24.3

opencv_python==4.7.0.72

Pillow==9.2.0

Pillow==9.5.0

scikit_image==0.19.3

scipy==1.6.0

torch==1.12.1

torchvision==0.13.1

tqdm==4.64.1

~pencv_contrib_python==4.7.0.72

2.constan参数定义

device = "cpu"

device1 = "cpu"

3.超参数设置

#

line_interval = 40

#

box_scale = 1.0

# 图片保存路径

save_image_dir = "D:WP\images_p_yolo_momentum_lineinterval_{}".format(line_interval)

4.目标检测模型设置

yolov4_helper = YoLov4Helper()

model_helpers = [yolov4_helper]

5.初始化攻击成功图片个数

success_count = 0

os.system("mkdir -p {}".format(save_image_dir))

6.对训练集image1中图片进行训练

#对所有训练图片

for i, img_path in enumerate(os.listdir("images1")):#若训练集image1中存在图片在save文件夹中,success率+1#否则图片_fail.在save文件夹中,success率不变img_path_ps = os.listdir(save_image_dir)if img_path in img_path_ps:success_count+= 1continueif img_path.replace(".", "_fail.") in img_path_ps: continueprint("img_path", img_path)img_path = os.path.join("images1", img_path)#mask创建#对yolo对象创建maskmask = create_yolo_object_mask(yolov4_helper.darknet_model, img_path)#mask = create_strip_mask()#mask = create_center_mask()#将加上mask后的图片重新进行目标检测,得到攻击成功率success_attack = specific_attack(model_helpers, img_path, mask)if success_attack: success_count += 1print("success: {}/{}".format(success_count, i))

6.1.mask创建

1.创建yolo对象的mask

(先用darknet2pytorch得到每张图片几个主要检测框区域,使用5*5横线框绘制对抗补丁)

具体效果如图所示:

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-AMsO7LDq-1686743216846)(D:\桌面\csdn\27.png)]

mask = create_yolo_object_mask(yolov4_helper.darknet_model, img_path)

create_yolo_object_mask函数

def create_yolo_object_mask(darknet_model, image_path, shape=(500, 500), size=50):#初始化mask mask = torch.zeros(*shape, 3)

#处理输入图片imageimg = Image.open(image_path).convert('RGB')resize_small = transforms.Compose([transforms.Resize((608, 608)),])img1 = resize_small(img)h, w = numpy.array(img).shape[:2]

#生成boxes,do_detect函数 boxes = do_detect(darknet_model, img1, 0.5, 0.4, True)

#对boxes处理得到gridsgrids = []for i, box in enumerate(boxes):x1 = (box[0] - box[2] / 2.0) * wy1 = (box[1] - box[3] / 2.0) * hx2 = (box[0] + box[2] / 2.0) * wy2 = (box[1] + box[3] / 2.0) * hprint(x1, y1, x2, y2)grids += [[int(x1), int(y1), int(x2), int(y2)]]for x1, y1, x2, y2 in grids:x1 = np.clip(x1, 0, 499)x2 = np.clip(x2, 0, 499)y1 = np.clip(y1, 0, 499)y2 = np.clip(y2, 0, 499)print("x1, y1, x2, y2", x1, y1, x2, y2)

#五横线型mask y_interval = max(24, (y2-y1)//5)x_interval = max(24, (x2-x1)//5)for i in range(1, 5):if mask.sum()>4500*3: breakif y1+i*y_interval>y2: breakmask[np.clip(y1+i*y_interval, 0, 499), x1:x2, :]=1for i in range(1, 5):if mask.sum()>4500*3: breakif x1+i*x_interval>x2: breakmask[y1:y2, np.clip(x1+i*x_interval, 0, 499), :]=1print("mask sum", mask.sum())return mask

生成box,do_detect函数

boxes = do_detect(darknet_model, img1, 0.5, 0.4, True)

def do_detect(model, img, conf_thresh, nms_thresh, use_cuda=1):model.eval()

#image图像处理 if isinstance(img, Image.Image):width = img.widthheight = img.heightimg = torch.ByteTensor(torch.ByteStorage.from_buffer(img.tobytes()))img = img.view(height, width, 3).transpose(0, 1).transpose(0, 2).contiguous()img = img.view(1, 3, height, width)img = img.float().div(255.0)

#list_boxeslist_boxes = model(img)

#定义锚anchors = [12, 16, 19, 36, 40, 28, 36, 75, 76, 55, 72, 146, 142, 110, 192, 243, 459, 401]num_anchors = 9anchor_masks = [[0, 1, 2], [3, 4, 5], [6, 7, 8]]strides = [8, 16, 32]anchor_step = len(anchors) // num_anchors

#得到boxes boxes = []for i in range(3):

#先生成masked_anchorsmasked_anchors = []for m in anchor_masks[i]:masked_anchors += anchors[m * anchor_step:(m + 1) * anchor_step]masked_anchors = [anchor / strides[i] for anchor in masked_anchors]

#用get_region_boxes函数得到boxesboxes.append(get_region_boxes1(list_boxes[i].data.cpu().numpy(), conf_thresh, 80, masked_anchors, len(anchor_masks[i])))#num得到boxesboxes = boxes[0][0] + boxes[1][0] + boxes[2][0]t3 = time.time()boxes = nms(boxes, nms_thresh)

return boxes

list_boxes Darknet forward

class Darknet(nn.Module):def __init__(self, cfgfile):super(Darknet, self).__init__()self.blocks = parse_cfg(cfgfile)self.models = self.create_network(self.blocks) # merge conv, bn,leakyself.loss = self.models[len(self.models) - 1]self.width = int(self.blocks[0]['width'])self.height = int(self.blocks[0]['height'])if self.blocks[(len(self.blocks) - 1)]['type'] == 'region':self.anchors = self.loss.anchorsself.num_anchors = self.loss.num_anchorsself.anchor_step = self.loss.anchor_stepself.num_classes = self.loss.num_classesself.header = torch.IntTensor([0, 0, 0, 0])self.seen = 0def forward(self, x):ind = -2self.loss = Noneoutputs = dict()out_boxes = []for block in self.blocks:ind = ind + 1# if ind > 0:# return xif block['type'] == 'net':continueelif block['type'] in ['convolutional', 'maxpool', 'reorg', 'upsample', 'avgpool', 'softmax', 'connected']:x = self.models[ind](x)outputs[ind] = xelif block['type'] == 'route':layers = block['layers'].split(',')layers = [int(i) if int(i) > 0 else int(i) + ind for i in layers]if len(layers) == 1:x = outputs[layers[0]]outputs[ind] = xelif len(layers) == 2:x1 = outputs[layers[0]]x2 = outputs[layers[1]]x = torch.cat((x1, x2), 1)outputs[ind] = xelif len(layers) == 4:x1 = outputs[layers[0]]x2 = outputs[layers[1]]x3 = outputs[layers[2]]x4 = outputs[layers[3]]x = torch.cat((x1, x2, x3, x4), 1)outputs[ind] = xelse:print("rounte number > 2 ,is {}".format(len(layers)))elif block['type'] == 'shortcut':from_layer = int(block['from'])activation = block['activation']from_layer = from_layer if from_layer > 0 else from_layer + indx1 = outputs[from_layer]x2 = outputs[ind - 1]x = x1 + x2if activation == 'leaky':x = F.leaky_relu(x, 0.1, inplace=True)elif activation == 'relu':x = F.relu(x, inplace=True)outputs[ind] = xelif block['type'] == 'region':continueif self.loss:self.loss = self.loss + self.models[ind](x)else:self.loss = self.models[ind](x)outputs[ind] = Noneelif block['type'] == 'yolo':self.features = xboxes = self.models[ind](x)out_boxes.append(boxes)#if self.training:# pass#else:# boxes = self.models[ind](x)# out_boxes.append(boxes)elif block['type'] == 'cost':continueelse:print('unknown type %s' % (block['type']))if self.training:return out_boxes#return losselse:return out_boxes

get_region_boxes函数

get_region_boxes1(list_boxes[i].data.cpu().numpy(), conf_thresh, 80, masked_anchors, len(anchor_masks[i]))

def get_region_boxes1(output, conf_thresh, num_classes, anchors, num_anchors, only_objectness=1, validation=False):anchor_step = len(anchors) // num_anchorsif len(output.shape) == 3:output = np.expand_dims(output, axis=0)batch = output.shape[0]assert (output.shape[1] == (5 + num_classes) * num_anchors)h = output.shape[2]w = output.shape[3]t0 = time.time()all_boxes = []output = output.reshape(batch * num_anchors, 5 + num_classes, h * w).transpose((1, 0, 2)).reshape(5 + num_classes,batch * num_anchors * h * w)grid_x = np.expand_dims(np.expand_dims(np.linspace(0, w - 1, w), axis=0).repeat(h, 0), axis=0).repeat(batch * num_anchors, axis=0).reshape(batch * num_anchors * h * w)grid_y = np.expand_dims(np.expand_dims(np.linspace(0, h - 1, h), axis=0).repeat(w, 0).T, axis=0).repeat(batch * num_anchors, axis=0).reshape(batch * num_anchors * h * w)xs = sigmoid(output[0]) + grid_xys = sigmoid(output[1]) + grid_yanchor_w = np.array(anchors).reshape((num_anchors, anchor_step))[:, 0]anchor_h = np.array(anchors).reshape((num_anchors, anchor_step))[:, 1]anchor_w = np.expand_dims(np.expand_dims(anchor_w, axis=1).repeat(batch, 1), axis=2) \.repeat(h * w, axis=2).transpose(1, 0, 2).reshape(batch * num_anchors * h * w)anchor_h = np.expand_dims(np.expand_dims(anchor_h, axis=1).repeat(batch, 1), axis=2) \.repeat(h * w, axis=2).transpose(1, 0, 2).reshape(batch * num_anchors * h * w)ws = np.exp(output[2]) * anchor_whs = np.exp(output[3]) * anchor_hdet_confs = sigmoid(output[4])cls_confs = softmax(output[5:5 + num_classes].transpose(1, 0))cls_max_confs = np.max(cls_confs, 1)cls_max_ids = np.argmax(cls_confs, 1)t1 = time.time()sz_hw = h * wsz_hwa = sz_hw * num_anchorst2 = time.time()for b in range(batch):boxes = []for cy in range(h):for cx in range(w):for i in range(num_anchors):ind = b * sz_hwa + i * sz_hw + cy * w + cxdet_conf = det_confs[ind]if only_objectness:conf = det_confs[ind]else:conf = det_confs[ind] * cls_max_confs[ind]if conf > conf_thresh:bcx = xs[ind]bcy = ys[ind]bw = ws[ind]bh = hs[ind]cls_max_conf = cls_max_confs[ind]cls_max_id = cls_max_ids[ind]box = [bcx / w, bcy / h, bw / w, bh / h, det_conf, cls_max_conf, cls_max_id]if (not only_objectness) and validation:for c in range(num_classes):tmp_conf = cls_confs[ind][c]if c != cls_max_id and det_confs[ind] * tmp_conf > conf_thresh:box.append(tmp_conf)box.append(c)boxes.append(box)all_boxes.append(boxes)t3 = time.time()if False:print('---------------------------------')print('matrix computation : %f' % (t1 - t0))print(' gpu to cpu : %f' % (t2 - t1))print(' boxes filter : %f' % (t3 - t2))print('---------------------------------')return all_boxes

nms

def nms(boxes, nms_thresh):if len(boxes) == 0:return boxesdet_confs = torch.zeros(len(boxes))for i in range(len(boxes)):det_confs[i] = 1 - boxes[i][4]_, sortIds = torch.sort(det_confs)out_boxes = []for i in range(len(boxes)):box_i = boxes[sortIds[i]]if box_i[4] > 0:out_boxes.append(box_i)for j in range(i + 1, len(boxes)):box_j = boxes[sortIds[j]]if bbox_iou(box_i, box_j, x1y1x2y2=False) > nms_thresh:# print(box_i, box_j, bbox_iou(box_i, box_j, x1y1x2y2=False))box_j[4] = 0return out_boxes

2.strip_mask

(在图片固定位置处绘制20条横线,2条竖线)

def create_strip_mask(shape=(500,500)):mask = torch.zeros(*shape, 3)rows = [15*i+125 for i in range(1, 19)]for row in rows:mask[row, 125:375, :]=1mask[125:375, 200, :] = 1mask[125:375, 300, :] = 1return mask

(yolo_target_mask)

(strip_mask)

3.watermask

mask = create_watermask_mask(watermask_path='D:\桌面\csdn\watermask.jpg')

def create_watermask_mask(watermask_path, shape=(500, 500)):mask = torch.zeros(*shape, 3)img = Image.open(watermask_path).convert('RGB')resize_small = transforms.Compose([transforms.Resize((500, 500)),])img = resize_small(img)mask = np.array(img).astype(np.float32)mask = np.where(mask < 240, 1, 0)mask = torch.from_numpy(mask)return mask

6.2加上mask后在对应的model中得到攻击成功率

success_attack = specific_attack(model_helpers, img_path, mask)

def specific_attack(model_helpers, img_path, mask):img = cv2.imread(img_path)img = torch.from_numpy(img).float()

#参数设置#训练最大次数t, max_iterations = 0, 600#停止损失 stop_loss = 1e-6#步长eps = 1patch_size = 70patch_num = 10

#权重初始化w = torch.zeros(img.shape).float()+127#w = torch.zeros(img.shape).float()w.requires_grad = True

#攻击率初始化 success_attack = False

#初始化yolo检测到最少objectmin_object_num = 1000

#初始化min-object的图像min_img = imggrads = 0loop = asyncio.get_event_loop()#第t次迭代训练 while tobject_nums:min_object_num = object_numsmin_img = patch_img

#若该轮识别的object个数=0,停止迭代并设攻击成功 if object_nums==0:success_attack = Truebreak

# 输出 print("t: {}, attack_loss:{}, object_nums:{}".format(t, attack_loss, object_nums))#反向传播优化w权重 attack_loss.backward()#positions = get_patch_positions(w.grad, patch_size, patch_num)#mask = create_mask_by_positions(positions, patch_size)#optimizer.step()grads = grads + w.grad / (torch.abs(w.grad)).sum()#w = w - eps * w.grad.sign()w = w - eps * grads.sign()w = w.detach()w.requires_grad = Truemin_img = min_img.detach().cpu().numpy()

# print(img_patch)if success_attack:sav=save_image_dir+"\\{}".format(img_path.split("\\")[-1])cv2.imwrite(sav, min_img)else: cv2.imwrite(save_image_dir+"//{}_fail.png".format(img_path.split("\\")[-1].split(".")[0]), min_img)return success_attack

ch_size)

#optimizer.step()

grads = grads + w.grad / (torch.abs(w.grad)).sum()

#w = w - eps * w.grad.sign()

w = w - eps * grads.sign()

w = w.detach()

w.requires_grad = True

min_img = min_img.detach().cpu().numpy()

print(img_patch)

if success_attack:sav=save_image_dir+"\\{}".format(img_path.split("\\")[-1])cv2.imwrite(sav, min_img)

else: cv2.imwrite(save_image_dir+"//{}_fail.png".format(img_path.split("\\")[-1].split(".")[0]), min_img)

return success_attack

本文来自互联网用户投稿,文章观点仅代表作者本人,不代表本站立场,不承担相关法律责任。如若转载,请注明出处。 如若内容造成侵权/违法违规/事实不符,请点击【内容举报】进行投诉反馈!