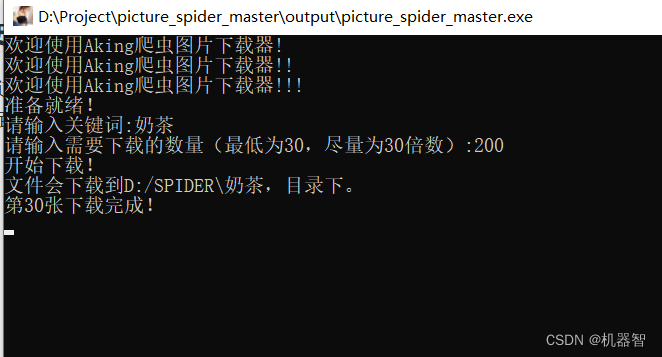

【爬虫实践】python通过关键词批量下载网页图片!附完整代码及exe

使用教程:

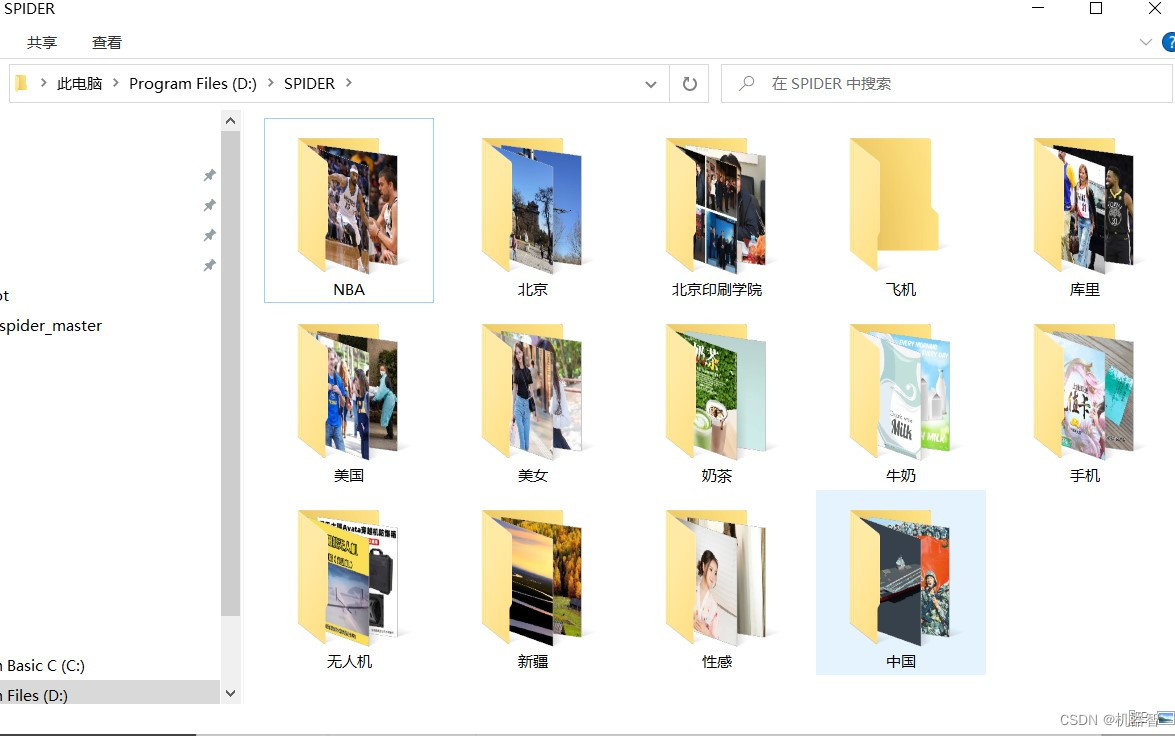

通过简单的关键词(最好中文2~3个字,英文一个单词),在百度图片搜索来下载到本地目录,默认下载路径已设为 D:/SPIDER 目录,可以手动更改!简单的运用request库和urllib,os,time。

核心代码分析:

#导入必须库

import requests

import urllib

import os

import time

keyword = str(input('请输入关键词:'))

folder = 'D:/SPIDER'

sum_old = int(input('请输入需要下载的数量(最低为30,尽量为30倍数):'))

sum = int(sum_old / 30)

if not os.path.exists(str(folder)):print('正在创建文件夹!')os.mkdir(str(folder))print('文件夹已创建!')

#判断中英文

def is_chinese(string):for ch in string:if u'\u4e00' <= ch <= u'\u9fff':return Truereturn False

完整代码:

import requests

import urllib

import os

import timeprint('欢迎使用Aking爬虫图片下载器!')

time.sleep(0.5)

print('欢迎使用Aking爬虫图片下载器!!')

time.sleep(0.5)

print('欢迎使用Aking爬虫图片下载器!!!')

time.sleep(0.5)

print('准备就绪!')

time.sleep(0.5)

keyword = str(input('请输入关键词:'))

folder = 'D:/SPIDER'

sum_old = int(input('请输入需要下载的数量(最低为30,尽量为30倍数):'))

sum = int(sum_old / 30)if not os.path.exists(str(folder)):print('正在创建文件夹!')os.mkdir(str(folder))print('文件夹已创建!')def is_chinese(string):for ch in string:if u'\u4e00' <= ch <= u'\u9fff':return Truereturn Falseclass GetImage():def __init__(self, keyword=(), paginator=1):self.url = "http://image.baidu.com/search/acjson?"self.headers = {'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/105.0.0.0 Safari/537.36'}self.keyword = keyword #定义关键词self.paginator = paginator #定义要爬取的页数def get_param(self):params = []#判断中文,是中文就重编码if is_chinese(self.keyword):keyword = urllib.parse.quote(self.keyword)for i in range(1, self.paginator + 1):params.append('tn=resultjson_com&ipn=rj&ct=201326592&is=&fp=result&queryWord={}&cl=2&lm=-1&ie=utf-8&oe=utf-8&adpicid=&st=-1&z=&ic=&hd=1&latest=0©right=0&word={}&s=&se=&tab=&width=&height=&face=0&istype=2&qc=&nc=1&fr=&expermode=&force=&cg=star&pn={}&rn=30&gsm=78&1557125391211='.format(keyword, keyword, 30 * i))else:keyword = self.keywordfor i in range(1, self.paginator + 1):params.append('tn=resultjson_com&ipn=rj&ct=201326592&is=&fp=result&queryWord={}&cl=2&lm=-1&ie=utf-8&oe=utf-8&adpicid=&st=-1&z=&ic=&hd=1&latest=0©right=0&word={}&s=&se=&tab=&width=&height=&face=0&istype=2&qc=&nc=1&fr=&expermode=&force=&cg=star&pn={}&rn=30&gsm=78&1557125391211='.format(keyword, keyword, 30 * i))return paramsdef get_urls(self, params):urls = []for param in params:urls.append(self.url + param)return urlsdef get_image_url(self, urls):image_url = []for url in urls:json_data = requests.get(url, headers=self.headers).json()json_data = json_data.get('data')for i in json_data:if i:image_url.append(i.get('thumbURL'))return image_urldef get_image(self, image_url):file_name = os.path.join(str(folder), self.keyword)print('开始下载!')print('文件会下载到'+str(file_name)+',目录下。')if not os.path.exists(file_name):os.mkdir(file_name)for index, url in enumerate(image_url, start=1):with open(file_name + '/{}.jpg'.format(index), 'wb') as f:f.write(requests.get(url, headers=self.headers).content)if index != 0 and index % 30 == 0:print("第{}张下载完成!".format(index))def __call__(self, *args, **kwargs):params = self.get_param()urls = self.get_urls(params)image_url = self.get_image_url(urls)self.get_image(image_url)if __name__ == '__main__':spider = GetImage(str(keyword), sum)spider()

windows运行程序:

链接:https://pan.baidu.com/s/1tAfJc1Moziwb25mNC3r7Jw?pwd=kx9h

提取码:kx9h

本文来自互联网用户投稿,文章观点仅代表作者本人,不代表本站立场,不承担相关法律责任。如若转载,请注明出处。 如若内容造成侵权/违法违规/事实不符,请点击【内容举报】进行投诉反馈!