pytorch学习3 -- 自动求导系统autograd

对pytorch的自动求导系统中常用两个方法:torch.autograd.back和torch.autograd.grad进行介绍

torch.autograd.backward(tensors,grad_tensors=None,retain_graph=None,create_graph=False)

- tensors:用于求导的张量,如loss

- retain_graph:保存计算图

- create_graph:创建导数计算图,用于高阶求导

- grad_tensors:多梯度权重(多个loss的比例)

flag = True

#flag = False

if flag:w = torch.tensor([1.], requires_grad=True)x = torch.tensor([2.], requires_grad=True)a = torch.add(w, x)b = torch.add(w, 1)y = torch.mul(a, b)y.backward(retain_graph=True)#retain_graph=True保存计算图,从而可进行第二次计算print(w.grad)y.backward()这里张量y.backward使用的就是torch里的backgrad.

flag = True

#flag = False

if flag:w = torch.tensor([1.], requires_grad=True)x = torch.tensor([2.], requires_grad=True)a = torch.add(w, x) # retain_grad()b = torch.add(w, 1)y0 = torch.mul(a, b) # y0 = (x+w) * (w+1)y1 = torch.add(a, b) # y1 = (x+w) + (w+1) dy1/dw = 2loss = torch.cat([y0, y1], dim=0) # [y0, y1]grad_tensors = torch.tensor([1., 2.])#grad_tensor用于多个梯度之间权重的设置loss.backward(gradient=grad_tensors) # gradient 传入 torch.autograd.backward()中的grad_tensorsprint(w.grad)

tensor([9.])

torch.autograd.grad(outputs,inputs,grad_outputs=None,retain_graph=None,create_graph=False)

- 功能:求取梯度

- outputs:用于求导的张量,如loss

- inputs:需要求梯度的张量

- create_graph:创建导数计算图,用于高阶求导

- retain_graph:保存计算图

- grad_outputs:多梯度权重

flag = True

#flag = False

if flag:x = torch.tensor([3.], requires_grad=True)y = torch.pow(x, 2) # y = x**2grad_1 = torch.autograd.grad(y, x, create_graph=True) # grad_1 = dy/dx = 2x = 2 * 3 = 6print(grad_1)grad_2 = torch.autograd.grad(grad_1[0], x) # grad_2 = d(dy/dx)/dx = d(2x)/dx = 2print(grad_2)(tensor([6.], grad_fn=

(tensor([2.]),)

autograd小贴士:

- 1.梯度不自动清零

- 2.依赖于叶子结点的结点,requires_grad默认为True

- 3.叶子结点不可执行in-place

flag = True

#flag = False

if flag:w = torch.tensor([1.], requires_grad=True)x = torch.tensor([2.], requires_grad=True)for i in range(4):a = torch.add(w, x)b = torch.add(w, 1)y = torch.mul(a, b)y.backward()print(w.grad)w.grad.zero_()#梯度清零,_下划线表示原位操作tensor([5.])

tensor([5.])

tensor([5.])

tensor([5.])

flag = True

#flag = False

if flag:w = torch.tensor([1.], requires_grad=True)x = torch.tensor([2.], requires_grad=True)for i in range(4):a = torch.add(w, x)b = torch.add(w, 1)y = torch.mul(a, b)y.backward()print(w.grad)#w.grad.zero_()#梯度清零,_下划线表示原位操作

tensor([5.])

tensor([10.])

tensor([15.])

tensor([20.])

flag = True

#flag = False

if flag:w = torch.tensor([1.], requires_grad=True)x = torch.tensor([2.], requires_grad=True)a = torch.add(w, x)b = torch.add(w, 1)y = torch.mul(a, b)print(a.requires_grad, b.requires_grad, y.requires_grad)True True True

flag = True

#flag = False

if flag:a = torch.ones((1, ))print(id(a), a)a = a + torch.ones((1, ))#会开辟一个新的内存地址,在新的地址中赋用aprint(id(a), a)a += torch.ones((1, ))#前后a的地址不变print(id(a), a)

flag = True

# flag = False

if flag:w = torch.tensor([1.], requires_grad=True)x = torch.tensor([2.], requires_grad=True)a = torch.add(w, x)b = torch.add(w, 1)y = torch.mul(a, b)w.add_(1)y.backward()2506753264160 tensor([1.])

2506753184248 tensor([2.])

2506753184248 tensor([3.])

Traceback (most recent call last):File "", line 23, in w.add_(1)RuntimeError: a leaf Variable that requires grad has been used in an in-place operation. 逻辑回归

逻辑回归模型是线性的二分类模型

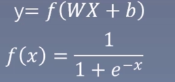

模型表达式:

f(x) 称为sigmoid函数,也称为logistic函数。

y< 0.5, class 为0

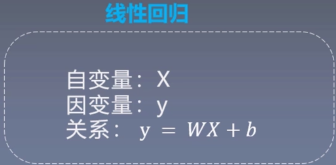

线性回归是分析自变量x与因变量y(标量)之间关系的方法

对数几率回归

机器学习模型训练的五个步骤

获取数据、处理清洗数据

模型

损失函数模型选择

优化器

反复迭代训练

import torch

import torch.nn as nn

import matplotlib.pyplot as plt

import numpy as np

torch.manual_seed(10)#%%

# ============================ step 1/5 生成数据 ============================

sample_nums = 100

mean_value = 1.7

bias = 1

n_data = torch.ones(sample_nums, 2)

x0 = torch.normal(mean_value * n_data, 1) + bias # 类别0 数据 shape=(100, 2)

y0 = torch.zeros(sample_nums) # 类别0 标签 shape=(100, 1)

x1 = torch.normal(-mean_value * n_data, 1) + bias # 类别1 数据 shape=(100, 2)

y1 = torch.ones(sample_nums) # 类别1 标签 shape=(100, 1)

train_x = torch.cat((x0, x1), 0)

train_y = torch.cat((y0, y1), 0)# ============================ step 2/5 选择模型 ============================

class LR(nn.Module):def __init__(self):super(LR, self).__init__()self.features = nn.Linear(2, 1)self.sigmoid = nn.Sigmoid()def forward(self, x):x = self.features(x)x = self.sigmoid(x)return xlr_net = LR() # 实例化逻辑回归模型# ============================ step 3/5 选择损失函数 ============================

loss_fn = nn.BCELoss()# ============================ step 4/5 选择优化器 ============================

lr = 0.01 # 学习率

optimizer = torch.optim.SGD(lr_net.parameters(), lr=lr, momentum=0.9)# ============================ step 5/5 模型训练 ============================

for iteration in range(1000):# 前向传播y_pred = lr_net(train_x)# 计算 lossloss = loss_fn(y_pred.squeeze(), train_y)# 反向传播loss.backward()# 更新参数optimizer.step()# 绘图if iteration % 20 == 0:mask = y_pred.ge(0.5).float().squeeze() # 以0.5为阈值进行分类correct = (mask == train_y).sum() # 计算正确预测的样本个数acc = correct.item() / train_y.size(0) # 计算分类准确率plt.scatter(x0.data.numpy()[:, 0], x0.data.numpy()[:, 1], c='r', label='class 0')plt.scatter(x1.data.numpy()[:, 0], x1.data.numpy()[:, 1], c='b', label='class 1')w0, w1 = lr_net.features.weight[0]w0, w1 = float(w0.item()), float(w1.item())plot_b = float(lr_net.features.bias[0].item())plot_x = np.arange(-6, 6, 0.1)plot_y = (-w0 * plot_x - plot_b) / w1plt.xlim(-5, 7)plt.ylim(-7, 7)plt.plot(plot_x, plot_y)plt.text(-5, 5, 'Loss=%.4f' % loss.data.numpy(), fontdict={'size': 20, 'color': 'red'})plt.title("Iteration: {}\nw0:{:.2f} w1:{:.2f} b: {:.2f} accuracy:{:.2%}".format(iteration, w0, w1, plot_b, acc))plt.legend()plt.show()plt.pause(0.5)if acc > 0.99:break

本文来自互联网用户投稿,文章观点仅代表作者本人,不代表本站立场,不承担相关法律责任。如若转载,请注明出处。 如若内容造成侵权/违法违规/事实不符,请点击【内容举报】进行投诉反馈!