CUB-200-2011鸟类数据集的下载与使用pytorch加载

2021/2/5 更新

极力推荐的方式 https://github.com/wvinzh/WS_DAN_PyTorch

2020/7/19 更新

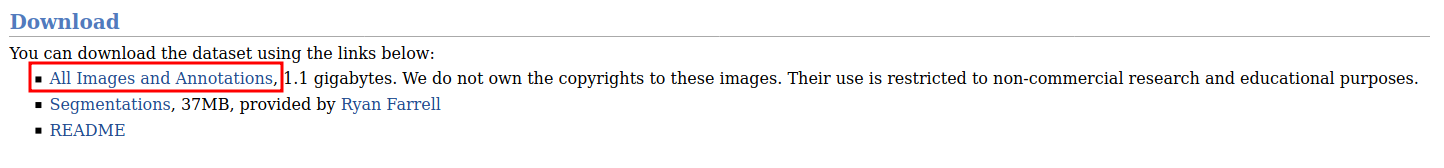

CUB-200-2011的下载:

https://www.vision.caltech.edu/datasets/cub_200_2011/

网站有时进不去,可以通过百度云下载。

百度云链接:链接: https://pan.baidu.com/s/1o60hA0qrupDjtMGPVCke3A 密码: u0sr

如果只跑分类,下载第一个就行了。里面除了类别标签也有标注框。如果还想用到分割的话,可以下载Segmentations。

我只下了红框内的压缩包

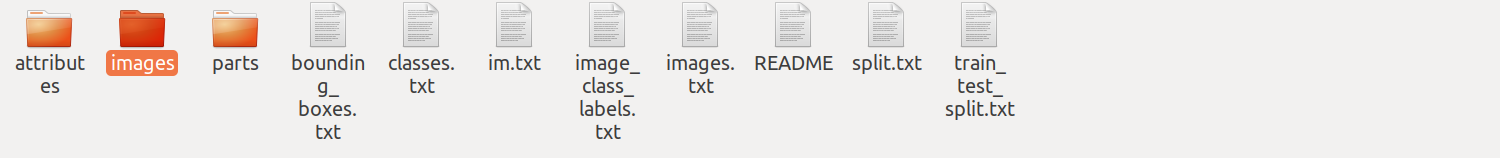

下载完,解压后:

下载完,解压后:

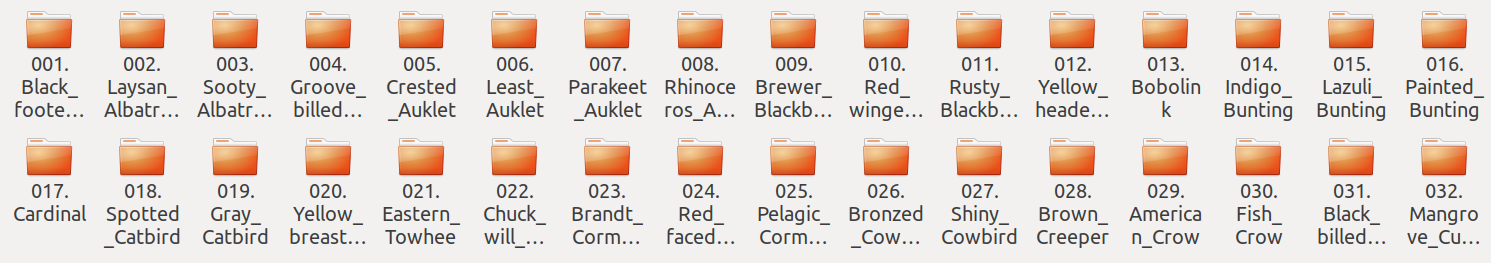

images文件夹:

images文件夹:

一共200类,每类60张左右的图片:

一共200类,每类60张左右的图片:

读取图片与类别标签:

方法一:使用scipy.misc、PIL和numpy结合处理

方法一代码是参考NTS-Net的,哈哈哈哈!!!原作者写的非常瓦利鼓的!!!

import numpy as np

# 读取数据

import scipy.misc

import os

from PIL import Image

from torchvision import transforms

import torchclass CUB():def __init__(self, root, is_train=True, data_len=None,transform=None, target_transform=None):self.root = rootself.is_train = is_trainself.transform = transformself.target_transform = target_transformimg_txt_file = open(os.path.join(self.root, 'images.txt'))label_txt_file = open(os.path.join(self.root, 'image_class_labels.txt'))train_val_file = open(os.path.join(self.root, 'train_test_split.txt'))# 图片索引img_name_list = []for line in img_txt_file:# 最后一个字符为换行符img_name_list.append(line[:-1].split(' ')[-1])# 标签索引,每个对应的标签减1,标签值从0开始label_list = []for line in label_txt_file:label_list.append(int(line[:-1].split(' ')[-1]) - 1)# 设置训练集和测试集train_test_list = []for line in train_val_file:train_test_list.append(int(line[:-1].split(' ')[-1]))# zip压缩合并,将数据与标签(训练集还是测试集)对应压缩# zip() 函数用于将可迭代的对象作为参数,将对象中对应的元素打包成一个个元组,# 然后返回由这些元组组成的对象,这样做的好处是节约了不少的内存。# 我们可以使用 list() 转换来输出列表# 如果 i 为 1,那么设为训练集# 1为训练集,0为测试集# zip压缩合并,将数据与标签(训练集还是测试集)对应压缩# 如果 i 为 1,那么设为训练集train_file_list = [x for i, x in zip(train_test_list, img_name_list) if i]test_file_list = [x for i, x in zip(train_test_list, img_name_list) if not i]train_label_list = [x for i, x in zip(train_test_list, label_list) if i][:data_len]test_label_list = [x for i, x in zip(train_test_list, label_list) if not i][:data_len]if self.is_train:# scipy.misc.imread 图片读取出来为array类型,即numpy类型self.train_img = [scipy.misc.imread(os.path.join(self.root, 'images', train_file)) for train_file intrain_file_list[:data_len]]# 读取训练集标签self.train_label = train_label_listif not self.is_train:self.test_img = [scipy.misc.imread(os.path.join(self.root, 'images', test_file)) for test_file intest_file_list[:data_len]]self.test_label = test_label_list# 数据增强def __getitem__(self,index):# 训练集if self.is_train:img, target = self.train_img[index], self.train_label[index]# 测试集else:img, target = self.test_img[index], self.test_label[index]if len(img.shape) == 2:# 灰度图像转为三通道img = np.stack([img]*3,2)# 转为 RGB 类型img = Image.fromarray(img,mode='RGB')if self.transform is not None:img = self.transform(img)if self.target_transform is not None:target = self.target_transform(target)return img, targetdef __len__(self):if self.is_train:return len(self.train_label)else:return len(self.test_label)if __name__ == '__main__':'''dataset = CUB(root='./CUB_200_2011')for data in dataset:print(data[0].size(),data[1])'''# 以pytorch中DataLoader的方式读取数据集transform_train = transforms.Compose([transforms.Resize((224, 224)),transforms.RandomCrop(224, padding=4),transforms.RandomHorizontalFlip(),transforms.ToTensor(),transforms.Normalize([0.485,0.456,0.406], [0.229,0.224,0.225]),])dataset = CUB(root='./CUB_200_2011', is_train=False, transform=transform_train,)print(len(dataset))trainloader = torch.utils.data.DataLoader(dataset, batch_size=2, shuffle=True, num_workers=0,drop_last=True)print(len(trainloader))

'''

trainset: 5994

trainloader 374

testset: 5794

testloader 363

'''

但是有一个致命缺陷:耗时太长:145.11554789543152 s

读数据耗时两分半,这谁顶的住?

方法二:使用cv2和numpy结合处理

方法二代码参考github上大佬weiaicunzai的调参仓库

- 建立dataset.py文件

import os

import cv2

import numpy as np

from torch.utils.data import Datasetclass CUB(Dataset):def __init__(self, path, train=True, transform=None, target_transform=None):self.root = pathself.is_train = trainself.transform = transformself.target_transform = target_transformself.images_path = {}with open(os.path.join(self.root, 'images.txt')) as f:for line in f:image_id, path = line.split()self.images_path[image_id] = pathself.class_ids = {}with open(os.path.join(self.root, 'image_class_labels.txt')) as f:for line in f:image_id, class_id = line.split()self.class_ids[image_id] = class_idself.data_id = []if self.is_train:with open(os.path.join(self.root, 'train_test_split.txt')) as f:for line in f:image_id, is_train = line.split()if int(is_train):self.data_id.append(image_id)if not self.is_train:with open(os.path.join(self.root, 'train_test_split.txt')) as f:for line in f:image_id, is_train = line.split()if not int(is_train):self.data_id.append(image_id)def __len__(self):return len(self.data_id)def __getitem__(self, index):"""Args:index: index of training datasetReturns:image and its corresponding label"""image_id = self.data_id[index]class_id = int(self._get_class_by_id(image_id)) - 1path = self._get_path_by_id(image_id)image = cv2.imread(os.path.join(self.root, 'images', path))if self.transform:image = self.transform(image)if self.target_transform:class_id = self.target_transform(class_id)return image, class_iddef _get_path_by_id(self, image_id):return self.images_path[image_id]def _get_class_by_id(self, image_id):return self.class_ids[image_id]

- 建立transforms.py文件

import random

import math

import numbersimport cv2

import numpy as npimport torchclass Compose:"""Composes several transforms together.Args:transforms(list of 'Transform' object): list of transforms to compose""" def __init__(self, transforms):self.transforms = transformsdef __call__(self, img):for trans in self.transforms:img = trans(img)return imgdef __repr__(self):format_string = self.__class__.__name__ + '('for t in self.transforms:format_string += '\n'format_string += ' {0}'.format(t)format_string += '\n)'return format_stringclass ToCVImage:"""Convert an Opencv image to a 3 channel uint8 image"""def __call__(self, image):"""Args:image (numpy array): Image to be converted to 32-bit floating pointReturns:image (numpy array): Converted Image"""if len(image.shape) == 2:image = cv2.cvtColor(image, cv2.COLOR_GRAY2BGR)image = image.astype('uint8')return imageclass RandomResizedCrop:"""Randomly crop a rectangle region whose aspect ratio is randomly sampled in [3/4, 4/3] and area randomly sampled in [8%, 100%], then resize the croppedregion into a 224-by-224 square image.Args:size: expected output size of each edgescale: range of size of the origin size croppedratio: range of aspect ratio of the origin aspect ratio cropped (w / h)interpolation: Default: cv2.INTER_LINEAR: """def __init__(self, size, scale=(0.08, 1.0), ratio=(3.0 / 4.0, 4.0 / 3.0), interpolation='linear'):self.methods={"area":cv2.INTER_AREA, "nearest":cv2.INTER_NEAREST, "linear" : cv2.INTER_LINEAR, "cubic" : cv2.INTER_CUBIC, "lanczos4" : cv2.INTER_LANCZOS4}self.size = (size, size)self.interpolation = self.methods[interpolation]self.scale = scaleself.ratio = ratiodef __call__(self, img):h, w, _ = img.shapearea = w * hfor attempt in range(10):target_area = random.uniform(*self.scale) * areatarget_ratio = random.uniform(*self.ratio) output_h = int(round(math.sqrt(target_area * target_ratio)))output_w = int(round(math.sqrt(target_area / target_ratio))) if random.random() < 0.5:output_w, output_h = output_h, output_w if output_w <= w and output_h <= h:topleft_x = random.randint(0, w - output_w)topleft_y = random.randint(0, h - output_h)breakif output_w > w or output_h > h:output_w = min(w, h)output_h = output_wtopleft_x = random.randint(0, w - output_w) topleft_y = random.randint(0, h - output_w)cropped = img[topleft_y : topleft_y + output_h, topleft_x : topleft_x + output_w]resized = cv2.resize(cropped, self.size, interpolation=self.interpolation)return resizeddef __repr__(self):for name, inter in self.methods.items():if inter == self.interpolation:inter_name = nameinterpolate_str = inter_nameformat_str = self.__class__.__name__ + '(size={0}'.format(self.size)format_str += ', scale={0}'.format(tuple(round(s, 4) for s in self.scale))format_str += ', ratio={0}'.format(tuple(round(r, 4) for r in self.ratio))format_str += ', interpolation={0})'.format(interpolate_str)return format_strclass RandomHorizontalFlip:"""Horizontally flip the given opencv image with given probability p.Args:p: probability of the image being flipped"""def __init__(self, p=0.5):self.p = pdef __call__(self, img):"""Args:the image to be flippedReturns:flipped image"""if random.random() < self.p:img = cv2.flip(img, 1)return imgclass ToTensor:"""convert an opencv image (h, w, c) ndarray range from 0 to 255 to a pytorch float tensor (c, h, w) ranged from 0 to 1"""def __call__(self, img):"""Args:a numpy array (h, w, c) range from [0, 255]Returns:a pytorch tensor"""#convert format H W C to C H Wimg = img.transpose(2, 0, 1)img = torch.from_numpy(img)img = img.float() / 255.0return imgclass Normalize:"""Normalize a torch tensor (H, W, BGR order) with mean and standard deviationfor each channel in torch tensor:``input[channel] = (input[channel] - mean[channel]) / std[channel]``Args:mean: sequence of means for each channelstd: sequence of stds for each channel"""def __init__(self, mean, std, inplace=False):self.mean = meanself.std = stdself.inplace = inplacedef __call__(self, img):"""Args:(H W C) format numpy array range from [0, 255]Returns:(H W C) format numpy array in float32 range from [0, 1]""" assert torch.is_tensor(img) and img.ndimension() == 3, 'not an image tensor'if not self.inplace:img = img.clone()mean = torch.tensor(self.mean, dtype=torch.float32)std = torch.tensor(self.std, dtype=torch.float32)img.sub_(mean[:, None, None]).div_(std[:, None, None])return imgclass Resize:def __init__(self, resized=256, interpolation='linear'):methods = {"area":cv2.INTER_AREA, "nearest":cv2.INTER_NEAREST, "linear" : cv2.INTER_LINEAR, "cubic" : cv2.INTER_CUBIC, "lanczos4" : cv2.INTER_LANCZOS4}self.interpolation = methods[interpolation]if isinstance(resized, numbers.Number):resized = (resized, resized)self.resized = resizeddef __call__(self, img):img = cv2.resize(img, self.resized, interpolation=self.interpolation)return img

- 以上两个文件创建完毕后可以在网络中加载数据了

from dataset import CUB

import transforms

from torch.utils.data import DataLoader

import timetime1 = time.time()

IMAGE_SIZE = 448

TRAIN_MEAN = [0.48560741861744905, 0.49941626449353244, 0.43237713785804116]

TRAIN_STD = [0.2321024260764962, 0.22770540015765814, 0.2665100547329813]

TEST_MEAN = [0.4862169586881995, 0.4998156522834164, 0.4311430419332438]

TEST_STD = [0.23264268069040475, 0.22781080253662814, 0.26667253517177186]path = '/home/jim/project/Fine-Grained/Mutual-Channel-Loss-master/CUB_200_2011'train_transforms = transforms.Compose([transforms.ToCVImage(),transforms.RandomResizedCrop(IMAGE_SIZE),transforms.RandomHorizontalFlip(),transforms.ToTensor(),transforms.Normalize(TRAIN_MEAN, TRAIN_STD)])test_transforms = transforms.Compose([transforms.ToCVImage(),transforms.Resize(IMAGE_SIZE),transforms.ToTensor(),transforms.Normalize(TEST_MEAN,TEST_STD)])train_dataset = CUB(path,train=True,transform=train_transforms,target_transform=None)# print(len(train_dataset))

train_dataloader = DataLoader(train_dataset,batch_size=16,num_workers=0,shuffle=True

)test_dataset = CUB(path,train=False,transform=test_transforms,target_transform=None)test_dataloader = DataLoader(test_dataset,batch_size=16,num_workers=0,shuffle=True

)time2 = time.time()print(time2-time1)

print(len(train_dataloader))

print(len(test_dataloader))耗时:0.027139902114868164 s

是的,你没有看错,不到一秒,惊喜吗?意外吗?

本文来自互联网用户投稿,文章观点仅代表作者本人,不代表本站立场,不承担相关法律责任。如若转载,请注明出处。 如若内容造成侵权/违法违规/事实不符,请点击【内容举报】进行投诉反馈!