cuda多gpu编程11 多gpu进行运算

四块gpu相比一块也只快了一倍

四块gpu相比一块也只快了一倍

处理效果如下,全部使用了默认流,没有进行cuda加速

没改之前

没改之前

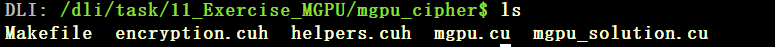

#include 结果文档 mgpu_solution.cu

#include 本文来自互联网用户投稿,文章观点仅代表作者本人,不代表本站立场,不承担相关法律责任。如若转载,请注明出处。 如若内容造成侵权/违法违规/事实不符,请点击【内容举报】进行投诉反馈!