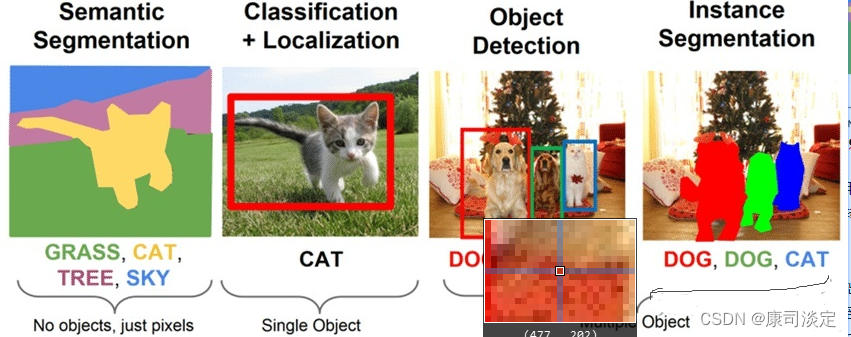

图像分割技术:语义分割和实例分割

-

语义分割会为图像中的每一个像素分配一个类别,但是同一类别之间的对象不会区分;

-

实例分割只对特定的物体进行分类(看起来相似于目标检测)

-

不同是目标检测输出目标是边界框和类别,实例分割睡出的是目标的mask和类别。

-

Mask r-cnn

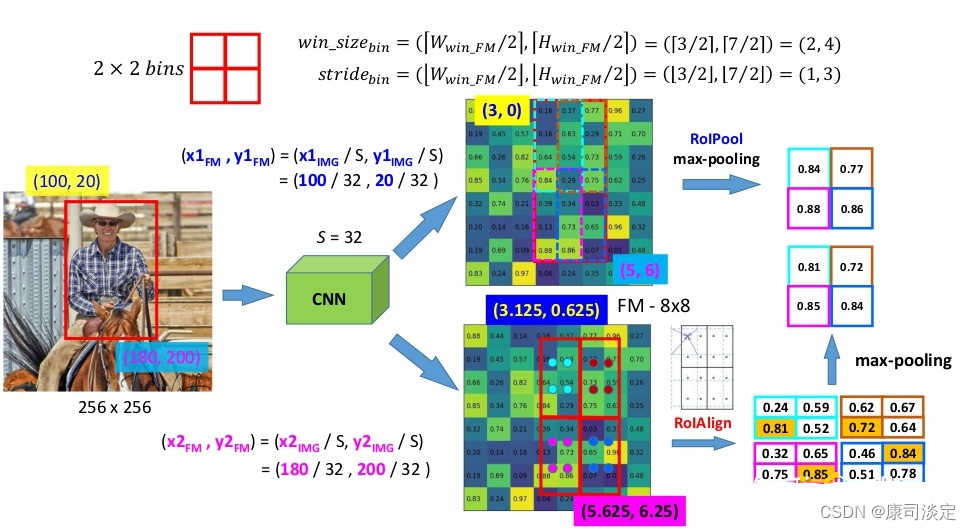

基础是faster R-CNN.faster-rnn使用cnn特征提取器来提取图像特征,利用region proposal网络生成感兴趣区域(ROI),并通过ROI Pooling将他们转换成为固定的维度,最后将其反馈到完全连接的层中进行分类和边界框预测。

Mask R-CNN与Fast RCNN区别在于,Mask R-CNN在Faster R-CNN的基础上(分类+回归分支)增加了一个小型FCN分支,利用卷积和反卷积构建端到端的网络进行语义分割,并将ROI-Pool层替换成ROI-align。下图是Mask R-CNN 基于Faster R-CNN/ResNet的网络架构。 -

Mask R-CNN首先将输入原始图片送入到特征网络得到特征图,然后对特征图的每一个像素位置设定个数的ROI/Anchor(默认15个),将这些ROI区域馈送到RPN网络进行二分类(前景和背景)以及坐标回归,找出所有存在对象的ROI区域。紧接着通过ROIAlign从每一个ROI中提取特征图。最后对这些ROI区域进行多类别分类,候选框回归和引入FCN生成Mask,完成分割任务。

下图是ROI-Pooling和ROIAlign的计算对比。

-

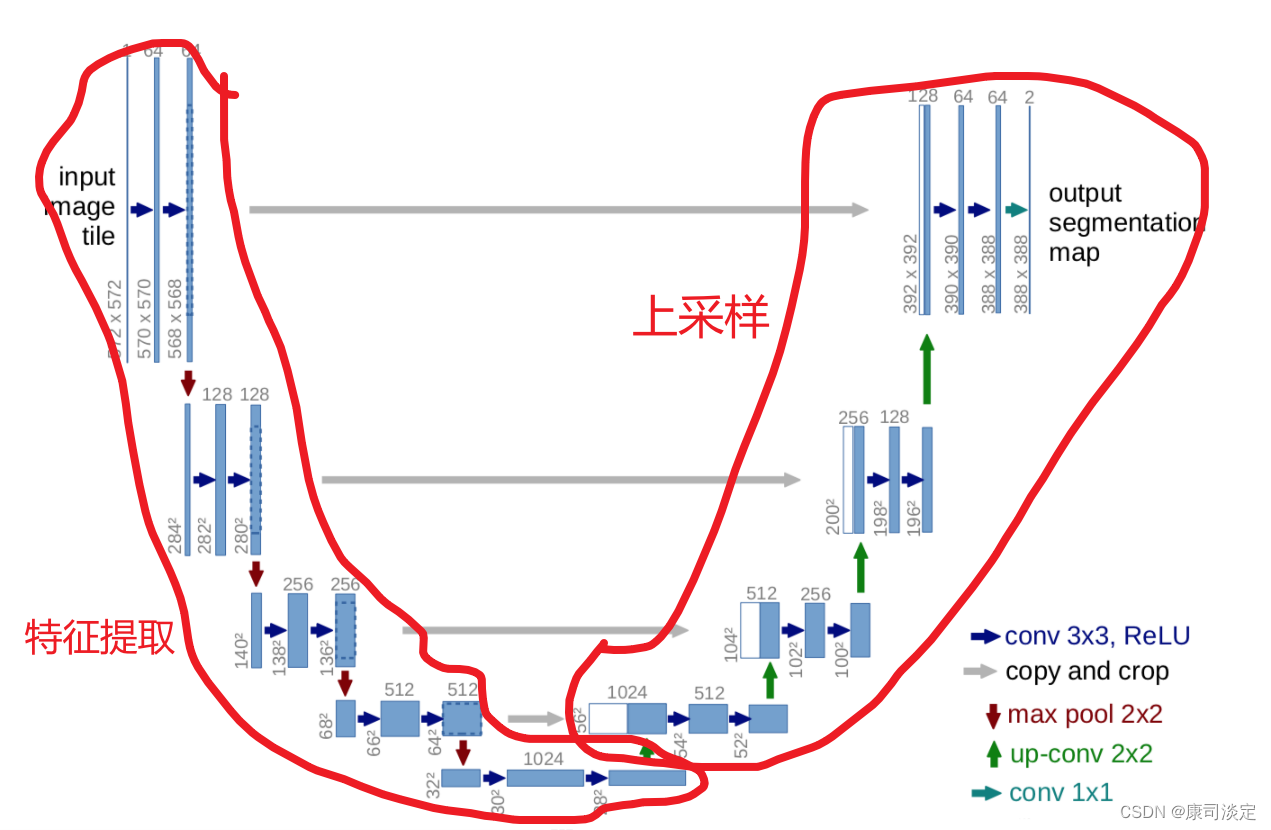

Unet

模型图

7. Unet代码

先把上采样和两个卷积层分别构建好,供Unet模型构建中重复使用。然后模型的输出和输入是相同的尺寸,说明模型可以运行。

import torch

import torch.nn as nn

import torch.nn.functional as Fclass double_conv2d_bn(nn.Module):def __init__(self,in_channels, out_channels, kernel_size=3, stride=1, padding=1):super(double_conv2d_bn, self).__init__()self.conv1 = nn.Conv2d(in_channels, out_channels,kernel_size=kernel_size,stride=stride, padding=padding, bias=True)self.conv2 = nn.Conv2d(out_channels, out_channels,kernel_size=kernel_size,stride=stride, padding=padding, bias=True)self.bn1 = nn.BatchNorm2d(out_channels)self.bn2 = nn.BatchNorm2d(out_channels)def forward(self, x):out = F.relu(self.bn1(self.conv1(x)))out = F.relu(self.bn2(self.conv2(out)))return outclass deconv2d_bn(nn.Module):def __init__(self, in_channels, out_channels, kernel_size=2, stride=2):super(deconv2d_bn, self).__init__()# 转置卷积self.conv1 = nn.ConvTranspose2d(in_channels, out_channels,kernel_size=kernel_size, stride=stride, bias=True)self.bn1 = nn.BatchNorm2d(out_channels)def forword(self, x):out = F.relu(self.bn1(self.conv1(x)))return outclass Unet(nn.Module):def __init__(self):super(Unet, self).__init__()self.layer1_conv = double_conv2d_bn(1,8)self.layer2_conv = double_conv2d_bn(8, 16)self.layer3_conv = double_conv2d_bn(16, 32)self.layer4_conv = double_conv2d_bn(32, 64)self.layer5_conv = double_conv2d_bn(64, 128)self.layer6_conv = double_conv2d_bn(128, 64)self.layer7_conv = double_conv2d_bn(64, 32)self.layer8_conv = double_conv2d_bn(32, 16)self.layer9_conv = double_conv2d_bn(16, 8)self.layer10_conv = nn.Conv2d(8, 1, kernel_size=3, stride=1, padding=1, bias=True)self.deconv1 = deconv2d_bn(128, 64)self.deconv2 = deconv2d_bn(64, 32)self.deconv3 = deconv2d_bn(32, 16)self.deconv4 = deconv2d_bn(16, 8)self.sigmoid = nn.Sigmoid()def forward(self, x):conv1 = self.layer1_conv(x)pool1 = F.max_pool2d(conv1, 2)conv2 = self.layer2_conv(pool1)pool2 = F.max_pool2d(conv2, 2)conv3 = self.layer3_conv(pool2)pool3 = F.max_pool2d(conv3, 2)conv4 = self.layer4_conv(pool3)pool4 = F.max_pool2d(conv4, 2)conv5 = self.layer5_conv(pool4)convt1 = self.deconv1(conv5)concat1 = torch.cat([convt1, conv4], dim=1)conv6 = self.layer6_conv(concat1)convt2 = self.deconv2(conv6)concat2 = torch.cat([convt2, conv3], dim=1)conv7 = self.layer7_conv(concat2)convt3 = self.deconv3(conv7)concat3 = torch.cat([convt3, conv2], dim=1)conv8 = self.layer8_conv(concat3)convt4 = self.deconv4(conv8)concat4 = torch.cat([convt4, conv1], dim=1)conv9 = self.layer9_conv(concat4)outp= self.layer10_conv(conv9)outp = self.sigmoid(outp)return outpmodel = Unet()

inp = torch.rand(10,1,224,224)

outp = model(inp)

print(outp.shape)

本文来自互联网用户投稿,文章观点仅代表作者本人,不代表本站立场,不承担相关法律责任。如若转载,请注明出处。 如若内容造成侵权/违法违规/事实不符,请点击【内容举报】进行投诉反馈!