目标检测YOLOV7【pytorch】--旺仔学习杂记

目标检测

`提示:以下是本篇文章正文内容,仅仅是自我学习,提高自我理解

一、YOLOV7模型结构

yolov7是在2022年7月6日正式提出的

论文地址:

https://link.csdn.net/?target=https%3A%2F%2Farxiv.org%2Fpdf%2F2207.02696.pdf

源代码地址:

https://github.com/WongKinYiu/yolov7

模型优势:

1、模型重参数化

yolov7的网络架构中是引入模型中参数化,用梯度传播路径的概念分析了适用于不同网络中各层结构重参化策略;

2、标签分配策略

当使用动态标签分配策略时,多输出层的模型在训练时会产生新的问题,比如怎样才能为不同分支更好的输出分配动态目标。

yolov7的标签分配策略采用yolov5的跨网格搜索,和yolox的匹配搜索;

3、ELAN高效网络架构

yolov7中提出了一个新的网络架构,主打一个高效;

4、带辅助头的训练

深度监督是一种常用于训练深度网络的技术,其主要概念是在网络的中间层增加额外的辅助头,以及以辅助损失为指导的浅层网络权重。yolov7是由辅助头的训练方法,可以通过增加训练成本,提升精度,但是不影响预测的时间,辅助头是出现在训练过程中。

1、总体模型架构

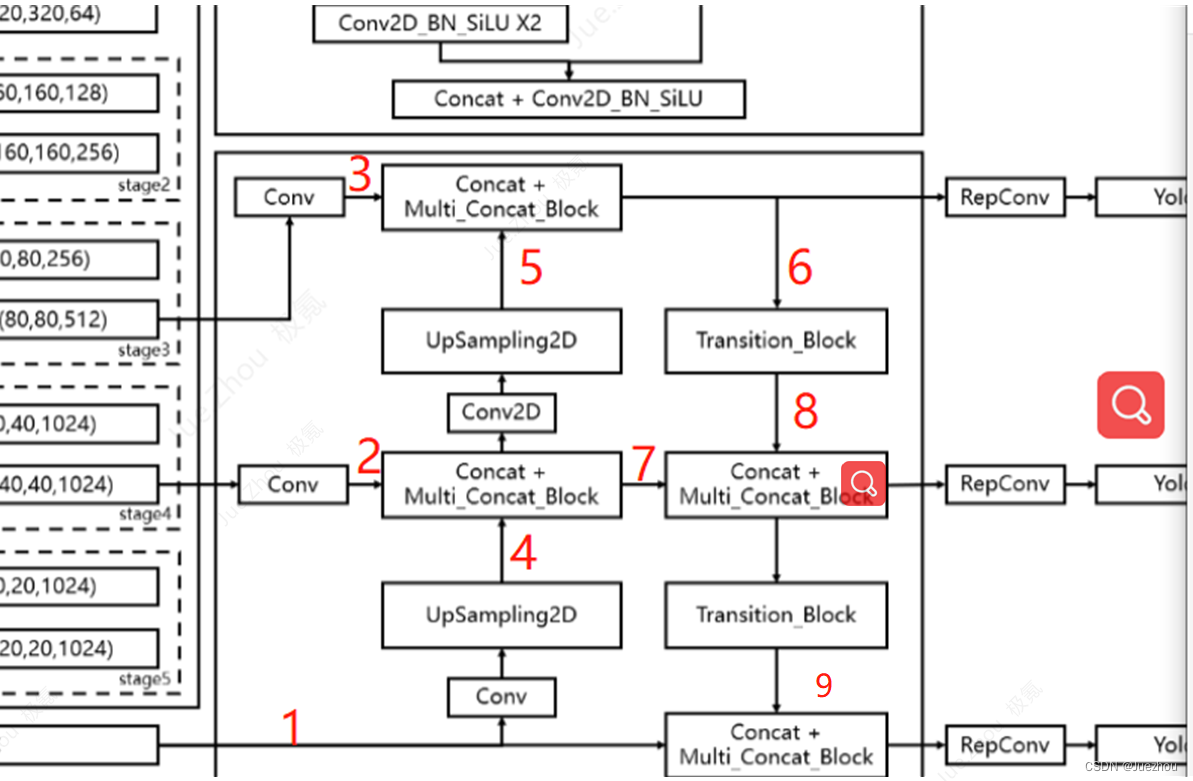

可以看到,yolov7的网络结构如上图所示,可以分为3个部分:Backbone,FPN和Yolo Head。

Backbone:对输入图片进行特征提取,yolov7的backbone可以输出3种不同尺寸的特征层;

Neck:加强特征提取,可以利用上采样和下采样进行实现Backbone中提取的3个不同尺寸的特征词进行特征融合;

Yolo Head:对anchor进行分类和回归,最终输出预测框;

2、Backbone

yolov7的backbone主要是由Multi_Concat_Block和Transition_Block组成;

2.1 Multi_Concat_Block

Multi_Concat_Block是一个高效的网络结构,通过控制最短和最长的梯度路径,能学习到更多特征,并且具有更强的鲁棒性;

Multi_Concat_Block结构如上图所示,由2D卷积+标准+激活函数(Con2D_BN_SiLU)构成,一共有4个链路通道;

- 第一个通道经过1次Con2D_BN_SiLU函数(1x1),主要是为了通道数变化;

- 第二个通道也是经过1次Con2D_BN_SiLU(1x1),也是为了做通道变化;

- 第三个通道经过1次Con2D_BN_SiLU(1x1),再经过2次Con2D_BN_SiLU(3x3),目的为特征提取;

- 第四个通道经过1次Con2D_BN_SiLU(1x1),再经过2次Con2D_BN_SiLU(5x5),目的为特征提取;

这四个通道的特征层Concat堆叠后,再由Con2D_BN_SiLU函数进行特征整合;

2.2 Transition_Block

Transition_Block的模型结构由上图所示,这是一个过度模块,此过度模块由两个分支通道组成:

- 左侧通道为的最大池化层+Con2D_BN_SiLU,最大池化的作用为下采样,再经过1x1的卷积进行通道数改变;

- 右侧通道为Con2D_BN_SiLU+Con2D_BN_SiLU;先进性1x1的卷积做通道数变化,再经过3x3卷积,步长为2的卷积核进行下采样;

这左右两个通道再进行Concat堆叠,这两个通道堆叠在一起就得到了超级下采样的结果;

3、Neck

Yolov7的Backbone提取了3个不同尺寸的特征层,输入的尺寸固定为640x640x3,三个特征层尺寸输出为80x80x512,40x40x1024,20x20x1024。这三个特征层都会进入FPN进行加强特征提取。

FPN结构,将不同尺度的特征进行融合,充分利用Backbone提取的特征信息。

Neck层包括SPPCSPC, ELANPAN

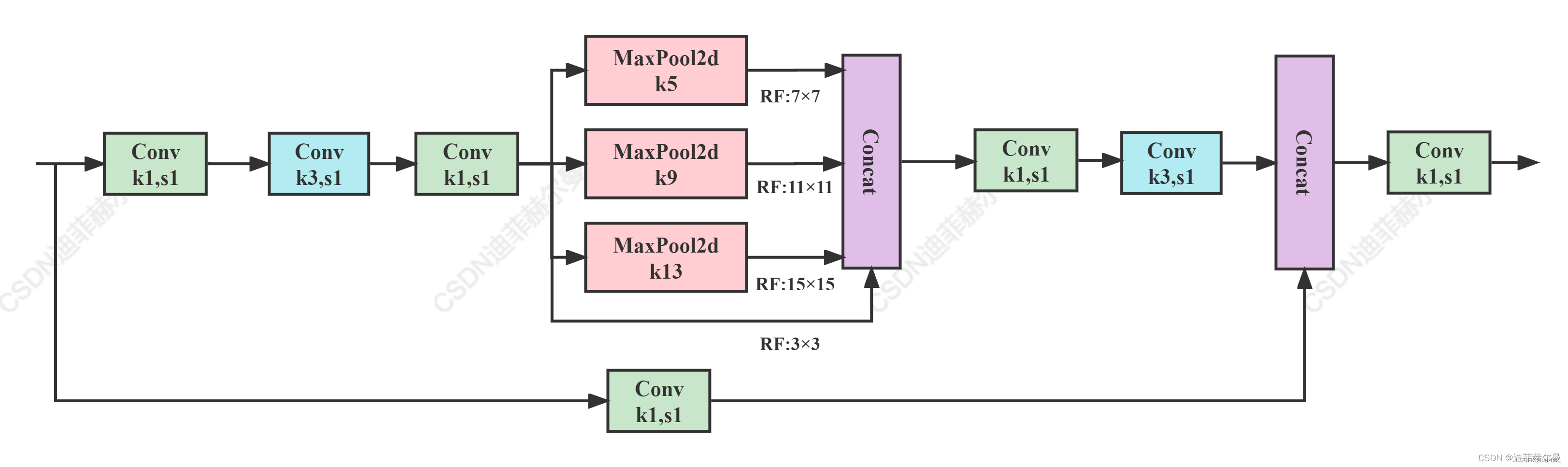

1、最底层特征的尺寸大小为20x20x1024,本层特征会经过SPPCSPC进行特征提取,本结构可以提升YoloV7的感受野;

以上为SPPCSPC的模型结构,更加丰富了特征信息;

3、Yolo Head

YoloV7是Yolo Head前使用了RepConv的结构,在训练时引入一个经过特殊设计的残差结构,在实际预测的时候,该结构等效于一个3x3的卷积;

Rep的模块分为2个,一个时Train,一个是deploy

训练模块有3个分支:上层分支为3x3的卷积,用于特征提取;中层分支为1x1的卷积,用于平滑特征;底层分支为BN,不做卷积操作,最后将其相加

推理模块包含一个3x3的卷积,步长为1,由训练模块重参数化转换而来。

经过Neck(FPN特征金字塔),我们可以得到3个加强特征:(N,20,20,255),(N,40,40,255),(N,80,80,255),N为通道数。将这三层加强特征传入Yolo Head,获得预测结果

每个输出的通道数255都可以分解为3个85,对应这3个先验框的85个参数。

先验框的85个参数可以拆解为4+1+80,4代表每个特征点的回归参数,用于获得调整后的预测框,1代表判断特征点是否含有物体,80用以判断每个特征点所包含的物体种类;

计算步骤:

- 进行中心点预测,利用回归预测(前两位)的结果对中心点偏移进行计算;

- 进行预测框宽高预测,利用回归预测(后两位)的结果计算预测框的宽高;

- 将预测框绘制在图片上

模型重参数化:

模型重参数化的技术在推理阶段将多个计算模块合并为1个,是一个集成技术,可以将其分为2类,即模块级集成和模型级集成。为了获得最终的推理模型,有2中常见的模型级重参数化时间;一种是利用不同额训练数据训练多个相同的模型,然后平均多个训练模型的权重,二是对不同迭代次数的模型权重进行加强平均

二、使用步骤

1.引入库

代码如下(示例):

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

import warnings

warnings.filterwarnings('ignore')

import ssl

ssl._create_default_https_context = ssl._create_unverified_context

2.模块代码

2.1 backbone

import torch

import torch.nn as nndef autopad(k, p=None):if p is None:p = k // 2 if isinstance(k, int) else [x // 2 for x in k] return pclass SiLU(nn.Module): @staticmethoddef forward(x):return x * torch.sigmoid(x)class Conv(nn.Module):def __init__(self, c1, c2, k=1, s=1, p=None, g=1, act=SiLU()): # ch_in, ch_out, kernel, stride, padding, groupssuper(Conv, self).__init__()self.conv = nn.Conv2d(c1, c2, k, s, autopad(k, p), groups=g, bias=False)self.bn = nn.BatchNorm2d(c2, eps=0.001, momentum=0.03)self.act = nn.LeakyReLU(0.1, inplace=True) if act is True else (act if isinstance(act, nn.Module) else nn.Identity())def forward(self, x):return self.act(self.bn(self.conv(x)))def fuseforward(self, x):return self.act(self.conv(x))class Multi_Concat_Block(nn.Module):def __init__(self, c1, c2, c3, n=4, e=1, ids=[0]):super(Multi_Concat_Block, self).__init__()c_ = int(c2 * e)self.ids = idsself.cv1 = Conv(c1, c_, 1, 1)self.cv2 = Conv(c1, c_, 1, 1)self.cv3 = nn.ModuleList([Conv(c_ if i ==0 else c2, c2, 3, 1) for i in range(n)])self.cv4 = Conv(c_ * 2 + c2 * (len(ids) - 2), c3, 1, 1)def forward(self, x):x_1 = self.cv1(x)x_2 = self.cv2(x)x_all = [x_1, x_2]# [-1, -3, -5, -6] => [5, 3, 1, 0]for i in range(len(self.cv3)):x_2 = self.cv3[i](x_2)x_all.append(x_2)out = self.cv4(torch.cat([x_all[id] for id in self.ids], 1))return outclass MP(nn.Module):def __init__(self, k=2):super(MP, self).__init__()self.m = nn.MaxPool2d(kernel_size=k, stride=k)def forward(self, x):return self.m(x)class Transition_Block(nn.Module):def __init__(self, c1, c2):super(Transition_Block, self).__init__()self.cv1 = Conv(c1, c2, 1, 1)self.cv2 = Conv(c1, c2, 1, 1)self.cv3 = Conv(c2, c2, 3, 2)self.mp = MP()def forward(self, x):# 160, 160, 256 => 80, 80, 256 => 80, 80, 128x_1 = self.mp(x)x_1 = self.cv1(x_1)# 160, 160, 256 => 160, 160, 128 => 80, 80, 128x_2 = self.cv2(x)x_2 = self.cv3(x_2)# 80, 80, 128 cat 80, 80, 128 => 80, 80, 256return torch.cat([x_2, x_1], 1)class Backbone(nn.Module):def __init__(self, transition_channels, block_channels, n, phi, pretrained=False):super().__init__()#-----------------------------------------------## 输入图片是640, 640, 3#-----------------------------------------------#ids = {'l' : [-1, -3, -5, -6],'x' : [-1, -3, -5, -7, -8], }[phi]# 640, 640, 3 => 640, 640, 32 => 320, 320, 64self.stem = nn.Sequential(Conv(3, transition_channels, 3, 1),Conv(transition_channels, transition_channels * 2, 3, 2),Conv(transition_channels * 2, transition_channels * 2, 3, 1),)# 320, 320, 64 => 160, 160, 128 => 160, 160, 256self.dark2 = nn.Sequential(Conv(transition_channels * 2, transition_channels * 4, 3, 2),Multi_Concat_Block(transition_channels * 4, block_channels * 2, transition_channels * 8, n=n, ids=ids),)# 160, 160, 256 => 80, 80, 256 => 80, 80, 512self.dark3 = nn.Sequential(Transition_Block(transition_channels * 8, transition_channels * 4),Multi_Concat_Block(transition_channels * 8, block_channels * 4, transition_channels * 16, n=n, ids=ids),)# 80, 80, 512 => 40, 40, 512 => 40, 40, 1024self.dark4 = nn.Sequential(Transition_Block(transition_channels * 16, transition_channels * 8),Multi_Concat_Block(transition_channels * 16, block_channels * 8, transition_channels * 32, n=n, ids=ids),)# 40, 40, 1024 => 20, 20, 1024 => 20, 20, 1024self.dark5 = nn.Sequential(Transition_Block(transition_channels * 32, transition_channels * 16),Multi_Concat_Block(transition_channels * 32, block_channels * 8, transition_channels * 32, n=n, ids=ids),)if pretrained:url = {"l" : 'https://github.com/bubbliiiing/yolov7-pytorch/releases/download/v1.0/yolov7_backbone_weights.pth',"x" : 'https://github.com/bubbliiiing/yolov7-pytorch/releases/download/v1.0/yolov7_x_backbone_weights.pth',}[phi]checkpoint = torch.hub.load_state_dict_from_url(url=url, map_location="cpu", model_dir="./model_data")self.load_state_dict(checkpoint, strict=False)print("Load weights from " + url.split('/')[-1])def forward(self, x):x = self.stem(x)x = self.dark2(x)#-----------------------------------------------## dark3的输出为80, 80, 512,是一个有效特征层#-----------------------------------------------#x = self.dark3(x)feat1 = x#-----------------------------------------------## dark4的输出为40, 40, 1024,是一个有效特征层#-----------------------------------------------#x = self.dark4(x)feat2 = x#-----------------------------------------------## dark5的输出为20, 20, 1024,是一个有效特征层#-----------------------------------------------#x = self.dark5(x)feat3 = xreturn feat1, feat2, feat3

2.2 SPPCSPC

class SPPCSPC(nn.Module):# CSP https://github.com/WongKinYiu/CrossStagePartialNetworksdef __init__(self, c1, c2, n=1, shortcut=False, g=1, e=0.5, k=(5, 9, 13)):super(SPPCSPC, self).__init__()c_ = int(2 * c2 * e) # hidden channelsself.cv1 = Conv(c1, c_, 1, 1)self.cv2 = Conv(c1, c_, 1, 1)self.cv3 = Conv(c_, c_, 3, 1)self.cv4 = Conv(c_, c_, 1, 1)self.m = nn.ModuleList([nn.MaxPool2d(kernel_size=x, stride=1, padding=x // 2) for x in k])self.cv5 = Conv(4 * c_, c_, 1, 1)self.cv6 = Conv(c_, c_, 3, 1)# 输出通道数为c2self.cv7 = Conv(2 * c_, c2, 1, 1)def forward(self, x):x1 = self.cv4(self.cv3(self.cv1(x)))y1 = self.cv6(self.cv5(torch.cat([x1] + [m(x1) for m in self.m], 1)))y2 = self.cv2(x)return self.cv7(torch.cat((y1, y2), dim=1))2.3 Head

class RepConv(nn.Module):# Represented convolution# https://arxiv.org/abs/2101.03697def __init__(self, c1, c2, k=3, s=1, p=None, g=1, act=SiLU(), deploy=False):super(RepConv, self).__init__()self.deploy = deployself.groups = gself.in_channels = c1self.out_channels = c2assert k == 3assert autopad(k, p) == 1padding_11 = autopad(k, p) - k // 2self.act = nn.LeakyReLU(0.1, inplace=True) if act is True else (act if isinstance(act, nn.Module) else nn.Identity())if deploy:self.rbr_reparam = nn.Conv2d(c1, c2, k, s, autopad(k, p), groups=g, bias=True)else:self.rbr_identity = (nn.BatchNorm2d(num_features=c1, eps=0.001, momentum=0.03) if c2 == c1 and s == 1 else None)self.rbr_dense = nn.Sequential(nn.Conv2d(c1, c2, k, s, autopad(k, p), groups=g, bias=False),nn.BatchNorm2d(num_features=c2, eps=0.001, momentum=0.03),)self.rbr_1x1 = nn.Sequential(nn.Conv2d( c1, c2, 1, s, padding_11, groups=g, bias=False),nn.BatchNorm2d(num_features=c2, eps=0.001, momentum=0.03),)def forward(self, inputs):if hasattr(self, "rbr_reparam"):return self.act(self.rbr_reparam(inputs))if self.rbr_identity is None:id_out = 0else:id_out = self.rbr_identity(inputs)return self.act(self.rbr_dense(inputs) + self.rbr_1x1(inputs) + id_out)def get_equivalent_kernel_bias(self):kernel3x3, bias3x3 = self._fuse_bn_tensor(self.rbr_dense)kernel1x1, bias1x1 = self._fuse_bn_tensor(self.rbr_1x1)kernelid, biasid = self._fuse_bn_tensor(self.rbr_identity)return (kernel3x3 + self._pad_1x1_to_3x3_tensor(kernel1x1) + kernelid,bias3x3 + bias1x1 + biasid,)def _pad_1x1_to_3x3_tensor(self, kernel1x1):if kernel1x1 is None:return 0else:return nn.functional.pad(kernel1x1, [1, 1, 1, 1])def _fuse_bn_tensor(self, branch):if branch is None:return 0, 0if isinstance(branch, nn.Sequential):kernel = branch[0].weightrunning_mean = branch[1].running_meanrunning_var = branch[1].running_vargamma = branch[1].weightbeta = branch[1].biaseps = branch[1].epselse:assert isinstance(branch, nn.BatchNorm2d)if not hasattr(self, "id_tensor"):input_dim = self.in_channels // self.groupskernel_value = np.zeros((self.in_channels, input_dim, 3, 3), dtype=np.float32)for i in range(self.in_channels):kernel_value[i, i % input_dim, 1, 1] = 1self.id_tensor = torch.from_numpy(kernel_value).to(branch.weight.device)kernel = self.id_tensorrunning_mean = branch.running_meanrunning_var = branch.running_vargamma = branch.weightbeta = branch.biaseps = branch.epsstd = (running_var + eps).sqrt()t = (gamma / std).reshape(-1, 1, 1, 1)return kernel * t, beta - running_mean * gamma / stddef repvgg_convert(self):kernel, bias = self.get_equivalent_kernel_bias()return (kernel.detach().cpu().numpy(),bias.detach().cpu().numpy(),)def fuse_conv_bn(self, conv, bn):std = (bn.running_var + bn.eps).sqrt()bias = bn.bias - bn.running_mean * bn.weight / stdt = (bn.weight / std).reshape(-1, 1, 1, 1)weights = conv.weight * tbn = nn.Identity()conv = nn.Conv2d(in_channels = conv.in_channels,out_channels = conv.out_channels,kernel_size = conv.kernel_size,stride=conv.stride,padding = conv.padding,dilation = conv.dilation,groups = conv.groups,bias = True,padding_mode = conv.padding_mode)conv.weight = torch.nn.Parameter(weights)conv.bias = torch.nn.Parameter(bias)return convdef fuse_repvgg_block(self): if self.deploy:returnprint(f"RepConv.fuse_repvgg_block")self.rbr_dense = self.fuse_conv_bn(self.rbr_dense[0], self.rbr_dense[1])self.rbr_1x1 = self.fuse_conv_bn(self.rbr_1x1[0], self.rbr_1x1[1])rbr_1x1_bias = self.rbr_1x1.biasweight_1x1_expanded = torch.nn.functional.pad(self.rbr_1x1.weight, [1, 1, 1, 1])# Fuse self.rbr_identityif (isinstance(self.rbr_identity, nn.BatchNorm2d) or isinstance(self.rbr_identity, nn.modules.batchnorm.SyncBatchNorm)):identity_conv_1x1 = nn.Conv2d(in_channels=self.in_channels,out_channels=self.out_channels,kernel_size=1,stride=1,padding=0,groups=self.groups, bias=False)identity_conv_1x1.weight.data = identity_conv_1x1.weight.data.to(self.rbr_1x1.weight.data.device)identity_conv_1x1.weight.data = identity_conv_1x1.weight.data.squeeze().squeeze()identity_conv_1x1.weight.data.fill_(0.0)identity_conv_1x1.weight.data.fill_diagonal_(1.0)identity_conv_1x1.weight.data = identity_conv_1x1.weight.data.unsqueeze(2).unsqueeze(3)identity_conv_1x1 = self.fuse_conv_bn(identity_conv_1x1, self.rbr_identity)bias_identity_expanded = identity_conv_1x1.biasweight_identity_expanded = torch.nn.functional.pad(identity_conv_1x1.weight, [1, 1, 1, 1]) else:bias_identity_expanded = torch.nn.Parameter( torch.zeros_like(rbr_1x1_bias) )weight_identity_expanded = torch.nn.Parameter( torch.zeros_like(weight_1x1_expanded) ) self.rbr_dense.weight = torch.nn.Parameter(self.rbr_dense.weight + weight_1x1_expanded + weight_identity_expanded)self.rbr_dense.bias = torch.nn.Parameter(self.rbr_dense.bias + rbr_1x1_bias + bias_identity_expanded)self.rbr_reparam = self.rbr_denseself.deploy = Trueif self.rbr_identity is not None:del self.rbr_identityself.rbr_identity = Noneif self.rbr_1x1 is not None:del self.rbr_1x1self.rbr_1x1 = Noneif self.rbr_dense is not None:del self.rbr_denseself.rbr_dense = Nonedef fuse_conv_and_bn(conv, bn):fusedconv = nn.Conv2d(conv.in_channels,conv.out_channels,kernel_size=conv.kernel_size,stride=conv.stride,padding=conv.padding,groups=conv.groups,bias=True).requires_grad_(False).to(conv.weight.device)w_conv = conv.weight.clone().view(conv.out_channels, -1)w_bn = torch.diag(bn.weight.div(torch.sqrt(bn.eps + bn.running_var)))# fusedconv.weight.copy_(torch.mm(w_bn, w_conv).view(fusedconv.weight.shape))fusedconv.weight.copy_(torch.mm(w_bn, w_conv).view(fusedconv.weight.shape).detach())b_conv = torch.zeros(conv.weight.size(0), device=conv.weight.device) if conv.bias is None else conv.biasb_bn = bn.bias - bn.weight.mul(bn.running_mean).div(torch.sqrt(bn.running_var + bn.eps))# fusedconv.bias.copy_(torch.mm(w_bn, b_conv.reshape(-1, 1)).reshape(-1) + b_bn)fusedconv.bias.copy_((torch.mm(w_bn, b_conv.reshape(-1, 1)).reshape(-1) + b_bn).detach())return fusedconv2.4 YOLO body

class YoloBody(nn.Module):def __init__(self, anchors_mask, num_classes, phi, pretrained=False):super(YoloBody, self).__init__()#-----------------------------------------------## 定义了不同yolov7版本的参数#-----------------------------------------------#transition_channels = {'l' : 32, 'x' : 40}[phi]block_channels = 32panet_channels = {'l' : 32, 'x' : 64}[phi]e = {'l' : 2, 'x' : 1}[phi]n = {'l' : 4, 'x' : 6}[phi]ids = {'l' : [-1, -2, -3, -4, -5, -6], 'x' : [-1, -3, -5, -7, -8]}[phi]conv = {'l' : RepConv, 'x' : Conv}[phi]#-----------------------------------------------## 输入图片是640, 640, 3#-----------------------------------------------##---------------------------------------------------# # 生成主干模型# 获得三个有效特征层,他们的shape分别是:# 80, 80, 512# 40, 40, 1024# 20, 20, 1024#---------------------------------------------------#self.backbone = Backbone(transition_channels, block_channels, n, phi, pretrained=pretrained)#------------------------加强特征提取网络------------------------# self.upsample = nn.Upsample(scale_factor=2, mode="nearest")# 20, 20, 1024 => 20, 20, 512self.sppcspc = SPPCSPC(transition_channels * 32, transition_channels * 16)# 20, 20, 512 => 20, 20, 256 => 40, 40, 256self.conv_for_P5 = Conv(transition_channels * 16, transition_channels * 8)# 40, 40, 1024 => 40, 40, 256self.conv_for_feat2 = Conv(transition_channels * 32, transition_channels * 8)# 40, 40, 512 => 40, 40, 256self.conv3_for_upsample1 = Multi_Concat_Block(transition_channels * 16, panet_channels * 4, transition_channels * 8, e=e, n=n, ids=ids)# 40, 40, 256 => 40, 40, 128 => 80, 80, 128self.conv_for_P4 = Conv(transition_channels * 8, transition_channels * 4)# 80, 80, 512 => 80, 80, 128self.conv_for_feat1 = Conv(transition_channels * 16, transition_channels * 4)# 80, 80, 256 => 80, 80, 128self.conv3_for_upsample2 = Multi_Concat_Block(transition_channels * 8, panet_channels * 2, transition_channels * 4, e=e, n=n, ids=ids)# 80, 80, 128 => 40, 40, 256self.down_sample1 = Transition_Block(transition_channels * 4, transition_channels * 4)# 40, 40, 512 => 40, 40, 256self.conv3_for_downsample1 = Multi_Concat_Block(transition_channels * 16, panet_channels * 4, transition_channels * 8, e=e, n=n, ids=ids)# 40, 40, 256 => 20, 20, 512self.down_sample2 = Transition_Block(transition_channels * 8, transition_channels * 8)# 20, 20, 1024 => 20, 20, 512self.conv3_for_downsample2 = Multi_Concat_Block(transition_channels * 32, panet_channels * 8, transition_channels * 16, e=e, n=n, ids=ids)#------------------------加强特征提取网络------------------------# # 80, 80, 128 => 80, 80, 256self.rep_conv_1 = conv(transition_channels * 4, transition_channels * 8, 3, 1)# 40, 40, 256 => 40, 40, 512self.rep_conv_2 = conv(transition_channels * 8, transition_channels * 16, 3, 1)# 20, 20, 512 => 20, 20, 1024self.rep_conv_3 = conv(transition_channels * 16, transition_channels * 32, 3, 1)# 4 + 1 + num_classes# 80, 80, 256 => 80, 80, 3 * 25 (4 + 1 + 20) & 85 (4 + 1 + 80)self.yolo_head_P3 = nn.Conv2d(transition_channels * 8, len(anchors_mask[2]) * (5 + num_classes), 1)# 40, 40, 512 => 40, 40, 3 * 25 & 85self.yolo_head_P4 = nn.Conv2d(transition_channels * 16, len(anchors_mask[1]) * (5 + num_classes), 1)# 20, 20, 512 => 20, 20, 3 * 25 & 85self.yolo_head_P5 = nn.Conv2d(transition_channels * 32, len(anchors_mask[0]) * (5 + num_classes), 1)def fuse(self):print('Fusing layers... ')for m in self.modules():if isinstance(m, RepConv):m.fuse_repvgg_block()elif type(m) is Conv and hasattr(m, 'bn'):m.conv = fuse_conv_and_bn(m.conv, m.bn)delattr(m, 'bn')m.forward = m.fuseforwardreturn selfdef forward(self, x):# backbonefeat1, feat2, feat3 = self.backbone.forward(x)#------------------------加强特征提取网络------------------------# # 20, 20, 1024 => 20, 20, 512P5 = self.sppcspc(feat3)# 20, 20, 512 => 20, 20, 256P5_conv = self.conv_for_P5(P5)# 20, 20, 256 => 40, 40, 256P5_upsample = self.upsample(P5_conv)# 40, 40, 256 cat 40, 40, 256 => 40, 40, 512P4 = torch.cat([self.conv_for_feat2(feat2), P5_upsample], 1)# 40, 40, 512 => 40, 40, 256P4 = self.conv3_for_upsample1(P4)# 40, 40, 256 => 40, 40, 128P4_conv = self.conv_for_P4(P4)# 40, 40, 128 => 80, 80, 128P4_upsample = self.upsample(P4_conv)# 80, 80, 128 cat 80, 80, 128 => 80, 80, 256P3 = torch.cat([self.conv_for_feat1(feat1), P4_upsample], 1)# 80, 80, 256 => 80, 80, 128P3 = self.conv3_for_upsample2(P3)# 80, 80, 128 => 40, 40, 256P3_downsample = self.down_sample1(P3)# 40, 40, 256 cat 40, 40, 256 => 40, 40, 512P4 = torch.cat([P3_downsample, P4], 1)# 40, 40, 512 => 40, 40, 256P4 = self.conv3_for_downsample1(P4)# 40, 40, 256 => 20, 20, 512P4_downsample = self.down_sample2(P4)# 20, 20, 512 cat 20, 20, 512 => 20, 20, 1024P5 = torch.cat([P4_downsample, P5], 1)# 20, 20, 1024 => 20, 20, 512P5 = self.conv3_for_downsample2(P5)#------------------------加强特征提取网络------------------------# # P3 80, 80, 128 # P4 40, 40, 256# P5 20, 20, 512P3 = self.rep_conv_1(P3)P4 = self.rep_conv_2(P4)P5 = self.rep_conv_3(P5)#---------------------------------------------------## 第三个特征层# y3=(batch_size, 75, 80, 80)#---------------------------------------------------#out2 = self.yolo_head_P3(P3)#---------------------------------------------------## 第二个特征层# y2=(batch_size, 75, 40, 40)#---------------------------------------------------#out1 = self.yolo_head_P4(P4)#---------------------------------------------------## 第一个特征层# y1=(batch_size, 75, 20, 20)#---------------------------------------------------#out0 = self.yolo_head_P5(P5)return [out0, out1, out2]本文来自互联网用户投稿,文章观点仅代表作者本人,不代表本站立场,不承担相关法律责任。如若转载,请注明出处。 如若内容造成侵权/违法违规/事实不符,请点击【内容举报】进行投诉反馈!