Redis vs Memcached

from http://blog.haohtml.com/archives/9991

在用到一个新产品时,我们肯定会做的一件事就是做性能测试。但是你真的会做性能测试吗?说完这句话我开始遥想了一下当年。我当时肯定让我的领导有点失望。

回归正题,今天要说的是一个关于Redis性能测试的争论。

事情起因是一篇叫做Redis vs Memcached的文章,本文作者在听说Redis之后将其和Memcached进行了一组性能对比测试,测试结果Redis完败。然而其测试方法却引起了不小的争执,其博文下的第一篇回复就是Redis作者antirez同学的回复。antirez非常有风度的感谢了他的测试结果,然后指出其测试中的问题。

而后在antirez的一篇博文(On Redis, Memcached, Speed, Benchmarks and The Toilet)中,他指出了一些做性能测试方面的经验,并自己对Redis和Memcached做了性能对比。结果与上面的相反,Redis全胜。这一篇文章好像一个教程一样,让大家别再不懂装懂瞎做性能测试了。

然而此文后两天,antirez又发布了另一篇博文(An update on the Memcached/Redis benchmark),对其上篇文章中的内容进行了补充。

而正如Alex Popescu说的一样,性能测试一定要基于自己的实际情况,用自己的业务数据来进行测试,才是最靠谱的。

MongoDB vs Redis vs Tokyo Tyrant:http://blog.haohtml.com/archives/9995

----------------------------------------------

antirez weblog

rss / about / itOn Redis, Memcached, Speed, Benchmarks and The Toilet

Tuesday, 21 September 10 The internet is full of things: trolls, awesome programming threads, p0rn, proofs of how cool and creative the human beings can be, and of course crappy benchmarks. In this blog post I want to focus my attention to the latter, and at the same time I'll try to show how good methodology to perform a benchmark is supposed to be, at least from my point of view.Why speed matters?

In the Web Scale era everybody is much more obsessed with scalability than speed. That is, if I've something that is able to do handle a (not better defined) load of L, I want it to handle a load of L*N if I use N instances of this thing. Automagically, Fault tolerantly, Bullet proofly.Stressing scalability so much put somewhat in shadow what in the 80's was one of the most interesting meters of software coolness, that is: raw speed. I mean old-fashion speed, that is, how many operations is this single thing able to perform? How many triangles can you trace in a second? How many queries can you answer? How many square roots can you compute?

Back in the initial days of home computing this was so important since CPU power was a limited resource. Now computers are way, way faster, but is still speed important, or should we just care about scalability, given that we can handle any load after all just adding more nodes? My bet is that raw speed is very important, and this are a few arguments:

- Raw speed is bound to queries per watt. Energy is a serious problem, not just for the environment, but also cost-wise. More computers running to serve your 1000 requests per second, the bigger your monthly bill.

- Large systems are hard to handle, and hardware is not for free. If I can handle the same workload with 10 servers instead of 100 servers, everything will be cheaper and simpler to handle.

- 95% of all the web innovation today is driven by startups. Startups start very very small, they don't have budget for large powerful computers. A software system that is able to handle a reasonable amount of load with a cheap Linux box is going to win a lot of acceptance in the most fertile of the environments for new technology toys, that is, startups.

- Scalable systems many times can work better with a smaller number of nodes, so even if there is the ability to scale it's better to run a 10 nodes cluster than a 100 nodes cluster. So the single node of a scalable system should be as fast as possible.

Systems for the toilet

Apparently I'm not alone in reconsidering the importance of raw speed. The sys/toilet blog published a benchmark comparing the raw speed of Redis and Memcached. The author of this blog firmly believes that many open source programs (and not only) should better go to the toilet instead of running in real systems, since they are not good. I can't agree more with this sentiment. Unfortunately I also think that our dude is part of the problem, since running crappy benchmarks is not going to help our pop culture.You should check it yourself reading the original post, but this is why the sys/toilet benchmark is ill conceived.

- All the tests are run using a single client into a busy loop. I love '80s busy loops but... well, let's be serious. I left a comment in the blog about this problem, and the author replied that in the real world things are different and threading is hard. Sorry but I was not thinking about multi thread programming: a DB under load receives many concurrent queries by different instances of the web server(s) (that can be threads, but much more easily different Apache processes). So it is not required that your web dude starts writing concurrent software to have N queries at the same time against your DB. If your DB is under serious load, you can bet that you are serving many requests at the same time. Sorry if this is obvious for most of the readers.

- Not just the above, when you run single clients benchmarks what you are metering actually is, also: the round trip time between the client and the server, and all the other kind of latencies involved, and of course, the speed of the client library implementation.

- The test was performed with very different client libraries. For instance libmemcached accepted as input a buffer pointer and a length, while credis, the library used in the mentioned benchmark, was calling strlen() for every key. So in one benchmark there was a table of pre-computed lengths passed to the library, and in the other the lib was calling strlen() itself. This is just an example, there are much bigger parsing, buffering, and other implementation details affecting the outcome especially if you are using a single-client approach, that is perfect to meter this things instead of the actual server performance.

I checked the implementation better and found a problem in the query parsing code that resulted in a quadratic algorithm due to a wrong positioned strchr() call. The problem is now fixed in this branch, that is what I'm using to run the benchmarks, and that will be merged in Redis master within days. After this fix large requests are now parsed five times faster than before.

But at this point I started to be curious...

Redis VS Memcached

Even if Redis provides much more features than memcached, including persistence, complex data types, replication, and so forth, it's easy to say that it is an almost strict superset of memcached. There are GET and SET operations, timeouts, a networking layer, check-and-set operations and so forth. This two systems are very, very similar in their essence, so comparing the two is definitely an apple to apple comparison.Not only this, but memcached is a truly mature software, that undertook a lot of real world stress testing and changes to work well in the practice. It's definitely a good meter to have for Redis. So given the great similarity between the systems if there are huge discrepancies likely there is a bug somewhere, either in Redis or memcached. And this actually was the case with MGET! I fixed a bug thanks to the comparison of numbers with memcached.

Apple to Apple, with the same mouth

But even comparing Apples to Apples is not trivial. You need to really have the very same semantics client side, do sensible things about the testing environment, number of simulated clients and so forth.In Redis land we already have redis-benchmark that assisted us in the course of the latest one and half years, and worked as a great advertising tool: we were able to show that our system was performing well.

Memcached and Redis protocols are not so different, both are simple ASCII protocols with similar formats. So I used a few hours of my time to port redis-benchmark to the memcached protocol. The result is mc-benchmark.

If you compare redis-benchmark and mc-benchmark with diff, you'll notice that apart from a lot of code removed since memcached does not support many of the operations that redis-benchmark was running against Redis, the only real difference in the source code is the following:

@@ -213,8 +206,15 @@char *tail = strstr(p,"\r\n");if (tail == NULL)return 0;

- *tail = '\0';

- *len = atoi(p+1);

+

+ if (p[0] == 'E') { /* END -- key not present */

+ *len = -1;

+ } else {

+ char *x = tail;

+ while (*x != ' ') x--;

+ *tail = '\0';

+ *len = atoi(x)+5; /* We add 5 bytes for the final "END\r\n" */

+ }return tail+2-p;}

Basically in the memcached protocol the length of the payload was one of the space separated arguments, while in the Redis protocol is at fixed offset (there is just a "$" character before). So there was to add a short while loop (that will scan just two bytes in our actual benchmark), and an explicit very fast test for the "END" condition of the memcached protocol. Note that mc-benchmark and redis-benchmark are designed to skip the reply so the actual processing is just the absolute minimal required to read the reply. Also note that the few lines of code added to turn redis-benchmark into mc-benchmark are inherently needed for the memcached protocol design. This means that we have an apples to apples comparison, and with the same mouth!.

Methodology

The following is the methodology used for this test:- I used Redis master, the "betterparsing" branch to run the test. The result of the benchmark should be pretty similar to what you'll get with Redis 2.0, excluding the MGET test, that I'm not including now since it's not part of redis-benchmark currently.

- I used the latest memcached stable release, that is, memcached-1.4.5.

- Both systems were compiled with the same GCC, same optimization level (-O2).

- I disabled persistence in Redis to avoid overhead due to the forking child in the benchmark.

- I ran memcached with -m 5000 in order to avoid any kind of expire of old keys. This way both Redis and memcached were loading the whole dataset in memory.

- The test was performed against real hardware, an oldish Linux box completely idle.

- Every test was ran three times, the higher figure obtained was used.

- The size of payload was 32 bytes. Sizes of 64, 128, 1024 bytes were tested without big differences in the results. Once you start to go out of the bounds of the MTU, performances will degradate a lot in both systems.

#!/bin/bash# BIN=./redis-benchmark BIN=./mc-benchmark payload=32 iterations=100000 keyspace=100000for clients in 1 5 10 20 30 40 50 60 70 80 90 100 200 300 doSPEED=0for dummy in 0 1 2doS=$($BIN -n $iterations -r $keyspace -d $payload -c $clients | grep 'per second' | tail -1 | cut -f 1 -d'.')if [ $(($S > $SPEED)) != "0" ]thenSPEED=$Sfidoneecho "$clients $SPEED" doneSorry, the comparison with != "0" is surely lame but I did not remembered the right way to do it with shell scripting :)

Results

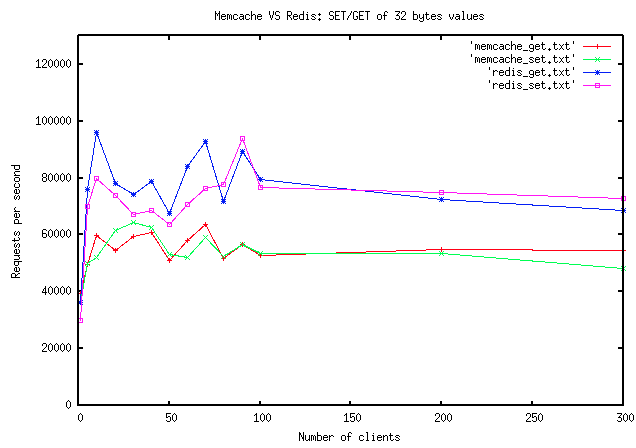

Finally... here are the results, in form of a graph generated with gnuplot:

As you can see there are no huge differences in the range of one order of magnitude, but surely Redis is considerably faster in this test. If you check the left side of the graph you'll notice that the speed is about the same with a single client. The more we add simultaneous clients the more Redis starts to go faster.

It's worth to note that I measured the CPU used by both the systems in a 1 million keys GET/SET test, obtaining the following results:

- memcache: user 8.640 seconds, system 19.370 seconds

- redis: user 8.95 seconds, system 20.59 seconds

Atomic operations can be traded for speed

To really understand what is the potential of the two systems as caching systems, there is to take into account another aspect. Redis can run complex atomic operations against hashes, lists, sets and sorted sets at the same speed it can run GET and SET operations (you can check this fact using redis-benchmark). The atomic operations and more advanced data structures allow to do much more in a single operation, avoiding check-and-set operations most of the time. So I think that once we drop the limit of just using GET and SET and uncover the full power of the Redis semantic there is a lot you can do with Redis to run a lot of concurrent users with very little hardware.Conclusions

This is a limited test that can't be interpreted as Redis is faster. It is surely faster under the tested conditions, but different real world work loads can lead to different results. For sure after this tests I've the impressions that the two systems are well designed, and that in Redis we are not overlooking any obvious optimization.As a result of this new branch of investigation in the next days I'll create speed.redis.io that will be something like a continuous speed testing of the different branches of Redis, with history. This way we can be sure that our changes will not modify performances, or we can try to enhance performances while checking how the numbers will change after every commit.

Disclaimer

I used a limited amount of time to setup and run this tests. Please if you find any kind of flaw or error drop me a note or a comment in this blog post, so that I can fix it and publish updated results. 54215 views * Posted at 14:41:03 | permalink | 17 comments | print Do you like this article?Subscribe to the RSS feed of this blog or use the newsletter service in order to receive a notification every time there is something of new to read here.

Note: you'll not see this box again if you are a usual reader.

Comments

1 Julien writes: 21 Sep 10, 14:59:00 What do you call a "oldish Linux box"? I'm asking because I'd love to optimize our various redis instances, and we're not getting the same kind of performance that you get. It would greatly help to have some kind of "ckecklist" on how to have a faster redis. 2 antirez writes: 21 Sep 10, 15:05:02 Julien: this is what I get from cat /proc/cpuinfo:processor : 0

vendor_id : GenuineIntel

cpu family : 6

model : 23

model name : Intel(R) Core(TM)2 Quad CPU Q8300 @ 2.50GHz

stepping : 10

cpu MHz : 1998.000

cache size : 2048 KB

So it's not so bad actually, but we bought it for 300 euros, so I guess not at the level of a decent Linux server.

About optimizations, it seems like Redis is mostly CPU bound so the faster CPU the better (trying to compile with -O3 can help as well). The other obvious part where it's worth investigating is parallelism and networking stack: network links between DB and web servers and so forth. 3 Pedro Teixeira writes: 21 Sep 10, 15:21:37 @Julien: also maybe you are not using the "betterparsing" branch of Redis? 4 antirez writes: 21 Sep 10, 15:23:04 @Pedro: unlikely to make any difference, with redis-benchmark the numbers are exactly the same. The only difference if you send an MGET with many many keys in the same command. 5 WolfHumble writes: 21 Sep 10, 17:11:38 Semantics -- you saw it coming ;-)

> if [ $(($S > $SPEED)) != "0" ]

If "S" is always a whole number (looks like that from the cut part) I think I would have gone for either:

if [ $S -ge $SPEED ]

OR

if (($S > $SPEED))

For more, see:

http://tldp.org/LDP/abs/html/comparison-ops.html#I...

WolfHumble 6 Effie writes: 21 Sep 10, 18:06:41 http://en.oreilly.com/velocity2010/public/schedule... outlines a methodology to actually quantify scalability as shown with memcached and outlines problems in that software. Have a look and see if that would be useful as an approach... 7 Keytweetlouie writes: 21 Sep 10, 19:04:12 I agree with you that lightning fast speed is important before horizontal scaling. My guess would be people shard before they have truly optimized all of their code. 8 brianthecoder writes: 21 Sep 10, 19:17:07 @Julien my guess is you don't have persistence turned off like @antirez does since that's not the default 9 obs writes: 22 Sep 10, 01:53:33 I like the idea of speed.redis.io it'll be interesting to see the performance of redis change as features are added. 10 Robert writes: 23 Sep 10, 16:35:24 Just wanted to say thank you for caring so much about speed, and for creating redis.

To me redis fills a different role than memcached and so comparing the two isn't something that I'm all that interested in.

I'm not using redis yet in any live projects, but I have used it in two projects I was working on developing and am likely to use it in future projects :) 11 Asad writes: 29 Sep 10, 06:22:54 Why do you consider memcached and Redis comparison an apple to apple comparison -- memcached has no guarantee that if a set was successful, the next get would return successfully too (even if expiration time is not passed and there is no process restart or network outage). Redis on the other hand must support this (if no expiration + no restart + no network outage) (correct me if I am wrong). Because of this difference memcached can use UDP while Redis can't. For that matter, I wonder if memcached with UDP would be faster than Redis. 12 burberry shoes writes: 17 Dec 10, 02:43:48 More please, this information helped me consider a few more things, keep up the good work. http://www.watcheshall.com/burberry-shoes.html 13 Mikhail Mikheev writes: 31 Jan 11, 15:03:28 Antirez,

Does release candidate 2.2 contain the fix of MGET performance you wrote about? As I was not able to find anything about improving MGET performance in release notes for neither release 2.0 nor 2.2 14 Gleb Peregud writes: 15 Mar 11, 10:07:17 Mikhail, I've checked it with rebasing betterparsing branch onto 2.2.2 tag and it shown that changes contianed in the betterparsing are already in 2.2.2 tree. Antirez, please correct me if I'm wrong. 15 Steve Crane writes: 16 Mar 11, 06:50:33 I'm investigating how to turn persistence off in Redis; the documentation is not specific about this but I came across this article and wondered if you could tell me how you turned off the persistence? Thanks. 16 Steve Crane writes: 16 Mar 11, 07:37:13 My last comment was a little premature and I have discovered the comment in the config file that explains how to disable persistence. 17 jjmaestro writes: 07 Apr 11, 00:51:32 First things first: **awesome** article! Kudos!

Next, What happened to speed.redis.io? :) It would be a really cool thing to have.

Cheers!

------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

Redis vs Memcached

- 08/09/2010 – 4:45 pm

- Posted in Benchmarks, Linux, Open Source

This month’s Linux Journal has an article about Redis. I read about it while sitting on the shitter, because that’s about all that Linux Journal is useful for. The article itself was crap, but the introduction to this product was at least tempting.

The basics: take memcached and add a disk backing, replication, virtual memory, and some cool additional data structures. Hashes, lists, sets, sorting, joining, transactions, yay! I instantly got a geek boner scanning the feature list. My boner quickly faded to about half mast when I saw the C clients that are available. No consistent hashing? Wait, no server hashing at all? Yet they have a gay Ruby client, fully featured? C is the lowest common denominator when it comes to language support. Start there, then add high level bindings using this low level library. libmemcached got it right. You start on the bottom and work your way up to higher level bindings. It seems that Redis took the wrong approach to this which will result in every language having a different client implementation. This leads to inconsistencies between languages, which is bad news. That on first glance gave me that headed-for-the-toilet feeling for this project.

With a half-mast boner, I decided to do some benchmark comparisons. Perhaps I’ll see some real numbers that might arouse me. Stand back, I’m going to do some science!

The Test Setup

I used the same machine as client and server for both tests to avoid network adding a skew to the results. (This should stress the algorithms themselves) The hardware itself doesn’t matter, it’s an apples to apples comparison. The software does however:

- Redis 2.0.0 rc4

- Memcached 1.4.5 (release)

- Credis (libcredis client) 0.2.2

- Libmemcached 0.31 (I know this is a little outdated, but for this test it works fine)

- Memcache client benchmark app: mc_stress.c

- Redis client benchmark app: redis_stress.c

The clients I wrote are just simple iterations of setting and getting keys and timing the results. Since WP sucks for posting source code snippets I had to stuff these into PDF files. Feel free to recreate my tests and post your results, since that’s what science is all about.

Results

This test varies the key size with a static value of only 3 bytes. (Using 100k unique keys) I’m guessing this will stress the internal hashing algorithms used for processing the key names. As you can see here, Redis came up short by a range of 20-26% the speed of memcache.

Same test, but showing the Multi-GET performance. Redis clearly has some kind of problem here, and I believe it might be due to requesting a large amount of keys in a single MGET operation. 100,000 keys seemed to be the upper limit… but in every case, Redis came up short.

This test stresses the different value sizes. Using a small 10 byte key length, I used varying sizes of value lengths up to around 16KB. Even though memcached advertises a 1MB value limitation, performance dropssignificantly over 8192 bytes. SET speed was reduced by 100 times and depending on the test, GET speed was either significantly reduced, or reduced by 100 times as well. I suspect that playing around with slab sizes might assist with the speed here, but that becomes quite the pain in the ass. The main point: If you use memcache, do not stuff large values into a single key. Hash them out into namespaces, you’ll be much better off.

Adding to this, Redis boasts a 1GB value size. We would need to graph another relationship of transfer rate to determine if there is an inefficiency in handling larger values, or if it’s just due to the size of the value itself. Memcache shows a similar trend as value size increases, but again Redis is 20-40% slower than memcache.

Here is the same test, showing Multi-GET performance. Similar trend as key length test.

Conclusion

After crunching all of these numbers and screwing around with the annoying intricacies of OpenOffice, I’m giving Redis a big thumbs down. My initial sexual arousal from the feature list is long gone. Granted, Redis might have its place in a large architecture, but certainly not a replacement to memcache. When your site is hammering 20,000 keys per second and memcache latency is heavily dependent on delivery times, it makes no business sense to transparently drop in Redis. The features are neat, and the extra data structures could be used to offload more RDBMS activity… but 20% is just too much to gamble on the heart of your architecture.

Maybe sometime in the future Redis will be up to par with Memcached performance. Or, it could be the extra VM and disk backing is inherently flawed to begin with. In that case, Memcached will always win, and Redis will be only useful in a niche market somewhere between DB caching and NoSQL fanboys.

Addition:

Anyone that would like to post their own benchmarks please do. But remember to test apples with apples. Keep the tests simple and very specific to avoid adding outside variables that would skew results. Try to avoid shit like using VMs as they’re too chaotic for timed tests like this.

At some point in the future when I have time, I’ll try to revisit this test again. (It seems to have become quite popular)

Anyone that would like to post their own benchmarks please do. But remember to test apples with apples. Keep the tests simple and very specific to avoid adding outside variables that would skew results. Try to avoid shit like using VMs as they’re too chaotic for timed tests like this.

At some point in the future when I have time, I’ll try to revisit this test again. (It seems to have become quite popular)

Like One blogger likes this post.28 Comments

7 Trackbacks/Pingbacks

- By In Which Redis Guy is Unfair To Sys Toilet Guy « A Curious Programmer on 23 Sep 2010 at 10:03 pm

[...] the sys/toilet guy writes a post benchmarking Redis vs Memcached and concludes that Memcached is faster and Redis doesn’t do so well at setup and [...]

- By Redis, что такое и с чем его есть? | Степан Орда on 26 Oct 2010 at 6:05 pm

[...] При этом скорость работы по многим тестам (тест, тест2) даже превосходит Memcached. Это отличная идея для высоко [...]

- By Redis vs Memcached + PHP Libs for Redis | LDG Tech Blog on 13 May 2011 at 4:31 pm

[...] is one comparison with Memcached, Redis vs Memcached the intro: take memcached and add a disk backing, replication, virtual memory, and some cool [...]

- By Redis Vs Memcached,Slower or Faster? | How to Build the Hign Performance WebSites? on 12 Jul 2011 at 5:30 am

[...] on behalf of two kinds of options,and tell us how to make a benchmark to redis and memcached : 1.Redis vs Memcached 2.On Redis, Memcached, Speed, Benchmarks and The Toilet An update on the Memcached/Redis [...]

- By Redis, the more Powerful NoSql Alternative to Memcached - Christopher J. Noyes on 17 Sep 2011 at 6:14 pm

[...] is also fast. It is comparable in speed to Memcached. A writer did a benchmark comparison, though Salvatore Sanfililipo the lead developer of Redis found the benchmark problematical [...]

- By Install redis with MAMP instead memcache on 17 Jan 2012 at 7:52 pm

[...] easier to install (from my experience) and it has the same functions if not more. Memcache is a lot faster though, but has less features. But I have a php wrapper that checks on which environment it’s [...]

- By Redis vs Memcached « Trần Quang Trung on 19 Jan 2012 at 12:54 pm

[...] http://systoilet.wordpress.com/2010/08/09/redis-vs-memcached/ Share this:TwitterFacebookLike this:LikeBe the first to like this post. [...]

Leave a Reply

本文来自互联网用户投稿,文章观点仅代表作者本人,不代表本站立场,不承担相关法律责任。如若转载,请注明出处。 如若内容造成侵权/违法违规/事实不符,请点击【内容举报】进行投诉反馈!

Hello, thanks for giving a try to Redis.

I think in Redis master we resolved the issues with multi-bulk transfers that are now an order of magnitude faster, but I’m trying to reproduce your results.

Anyway I noticed that you did not ran the most meaningful test that you could run, that is, multiple threads asking for random small data.

Single threaded tests with big data are still interesting in some rare application, and I’m trying to understand if there is still something wrong about this in Redis master. But what makes the difference is the ability to the database/cache to resist against many concurrent *small* requests IMHO, in 90% of real world applications.

I’ll take you posted with my results, but I think you have a serious methodology test here.

Benchmarks are *very* hard.

Well, I’m a little star struck that the Redis creator found my little corner of the Intertubes!

I think I’d disagree with you on the concurrency level and the average use case. Granted, most of the modern web languages are threaded by nature. (Rails, Pylons, etc) which naturally creates a threaded client to whichever data backend you choose. That, is a perfect world: connections are pooled and shared, stacks are shared, and there is little overhead for each request.

In the real, dirty world, threading is difficult to implement, and not everyone does it. (I speak for myself, and I might be biased… can I get a whoop whoop from anyone still using CGI?) This is how I use memcache, unfortunately. Lots of single connections hammering the hell out of it. Memcache appears to do a great job at setup and teardown of individual clients, and that is the test I set out to bench Redis against.

Secondly, in regards to key size and value size, I think our sample space is all over the place. You do not know how many times one of our web developers have come to me asking, “Hey admin, what was the limitation on the memcache key size?” … “WHAT? Only 256 bytes? Well I’m going to have to work around that. What is the limit on the value I can do then? … ONLY 1MB!?”

That is the typical conversation. It pains me to think about it, because I already know how memcache performs under large key/val sizes. The point is, we can *never* assume what a real world application is. We can only advertise what a product can do, or can not do.

I realize it’s impossible for Redis to perform with every possible use case, but perhaps it can be *configured* for different use cases? Rather than choose between cache or database for the goals of your project, perhaps you can let the user decide. Drop in memcache replacement with better features? Or speedy (enough) NoSQL solution? Both require a different set of tunables. I believe Redis could really gain popularity if it can do both jobs well.

And yes, benchmarks ARE very hard… but they are a requirement in our field, as scientists. If we make boastful statements like “Redis … is as fast as memcached but with a number of features more.” we should provide the evidence to prove it.

Salvatore has responded to your post here:

http://antirez.com/post/redis-memcached-benchmark.html

I recently tested both memcached and redis using php for my simple application. I found redis to be about 30% faster than memcached in my novice test.

And.. Well played, Salvatore.

Val,

I’d be interested to see your test setup and more details about your results. After all, I’m merely in search of the truth here. If my benchmarks are eventually proven as inaccurate data, then I’ll eat my potty mouth… if that’s even possible.

I’ll be working on a more thorough test setup later. If Redis is indeed faster in my use case, I would switch in a heartbeat. I’m a whore like that.

Not sure I should trust your benchmark. Looking at your source code, I see a bunch of malloc and not a single line of dealloc. I don’t know whether you ran into any segfault issue during your benchmark run.

some projects become successful mostly by using niche keywords for paranoids, like “replication”, “faster”, “much faster”, “much more faster”, “more safety” and by throwing flames at existent software (e.g. the redis devs case against libevent). 99% of the people using or wanting to use replicated caches don’t even need it. sell them dreamcatchers for nightmares they will probably never have and you’ll be in business. replicated caches are the poor man’s solution to speed-and-reliability problem. too lazy to really understand what your data is about? just go replicated caching alltogether … here’s the redis string hack review.

@Doug: his code looks fine to me, he’s just being lazy. He doesn’t need to free his keys/randomvals until the bench is done. And when it does there’s no need to free because the program finishes and the kernel will take care of it. i don’t even know why am i explaning this to you but whatever. systoilet is probably a bofh. he loves to curse developers writing code this way but he also loves seeing himself doing the same thing. he also probably touches himself a lot.

I ran very simple test to set and get 100k unique keys and values against redis-2.2.2 and memcached. Both are running on linux VM(CentOS) and my client code(pasted below) runs on windows desktop.

Redis

Time taken to store 100000 values is = 18954ms

Time taken to load 100000 values is = 18328ms

Memcached

Time taken to store 100000 values is = 797ms

Time taken to retrieve 100000 values is = 38984ms

Jedis jed = new Jedis("localhost", 6379);

int count = 100000;

long startTime = System.currentTimeMillis();

for (int i=0; i

jed.set("u112-"+i, "v51"+i);

}

long endTime = System.currentTimeMillis();

System.out.println("Time taken to store "+ count + " values is ="+(endTime-startTime)+"ms");

startTime = System.currentTimeMillis();

for (int i=0; i

client.get("u112-"+i);

}

endTime = System.currentTimeMillis();

System.out.println("Time taken to retrieve "+ count + " values is ="+(endTime-startTime)+"ms");

I used the default redis.conf on the server side and tested this with Jedis client. I was surprised to see that redis took ~18 secs to store 100k values, as opposed to 797ms taken by memcached.

Anyone has better numbers for redis+java?

Tested Redis 2.4.0 – rc5 VS. Memcached 1.4.5

100k keys

REDIS done in 7s

MEMC done in 6s

Storage

REDIS 8174544 bytes = 7.79585266 megabytes

MEMC 800056 bytes = 0.762992859 megabytes

REDIS 90.3% BIGGER !!!

[note] 100k inserts

Anyone that would like to post their own benchmarks please do. But remember to test apples with apples. Keep the tests simple and very specific to avoid adding outside variables that would skew results. Try to avoid shit like using VMs as they’re too chaotic for timed tests like this.

At some point in the future when I have time, I’ll try to revisit this test again. (It seems to have become quite popular)

hi there.

do u nention that memcache works with all CPUs, but redis use just 1?

That’s a good point, no I haven’t. Although, I don’t believe that fact would have skewed my test results. I was using a single threaded client, against a single thread of Redis and a single thread in memcache.

can you do the benchmark against the latest stable redis release (2.4 i think)? i am wondering if you results still hold.

in my mind the biggest drawback of redis is that it isn’t really a distributed cache. although to be fair, they are working on it.

numan salati, can you define “distributed”? Because both redis and memcache have the same idea and there for memcache is also not distributed… ?

Wouldn’t it have been logical to make the line for Redis RED in the line charts? Maybe that came under the “annoying intricacies of OpenOffice” you’re referring to.

A “gay Ruby client”? Really? Are you 15?

Yeah, pretty much. Nobody uses Ruby, so that’s why it’s gay. So suck it, Tony!

greetings from pluto @theadminblagger…we don’t use ruby here. however, we’ve heard that the entire earth is addicted to ruby! #get-out-of-your-room-once-in-awhile

Well, maybe I’ll write up another article on Perl vs Ruby, and offend all of Japan. Oooooo mee sooo sorrry!

I really enjoyed the commentary when reading this post, even though it is very technical, you add some humor to it, I like that. Since this was posted around 08/09/2010 and at the end of the article you said “At some point in the future when I have time, I’ll try to revisit this test again.”

I was just going to ask if you have tried any test recently or if you could possibly, it would be nice to see an updated test

Thanks Jason. I haven’t had time to revisit the Redis test. It’s very low on my massive TODO list as an administrator. Presently though, I like Mongo. A few preliminary tests show it’s a similar speed to memcache, and it fits our architecture a little better.

Since it’s a document database, it’s not an apples-apples comparison with Redis. But, it works great. It’s redundant, horizontally scalable, and seems pretty speedy.

“Yet they have a gay Ruby client, fully featured?”

how about next time you omit the homophobia you bigoted fuck

butthurt itt

Tony, only a gaywad liberal douche would be offended by that statement. How about you leave your whiny comments in Nova Scotia, and just go back to having butt sex with young boys from Newfoundland.

admin =1 liberal douche = 0

damn I almost lost your blog in one of those windows ass wipes – buy new laptop – wipe again.

You always crack me up and get the point across. TO me your benchmark is still realistic. is how I still have my real world use case with PHP (threads? what’s that hehe)