python webviewer爬虫_Python:的web爬虫实现及原理(BeautifulSoup工具)

最近一直在学习python,学习完了基本语法就练习了一个爬虫demo,下面总结下。

主要逻辑是

1)初始化url管理器,也就是将rooturl加入到url管理器中

2)在url管理器中得到新的new_url

3)根据新new_url得到它的内容html_cont (工具 urllib.request.urlopen(url))

4)解析这个新页面的内容html_cont并得到新的子url,并保存解析内容结果 (利用BeautifulSoup工具)

5)将新得到的子url保存到url管理器

6)迭代2-5步骤,知道输出某个阈值的数量即可停止

7)输出爬去的结果

注意编码问题,一致为UTF-8 -- .decode('UTF-8')

BeautifulSoup工具的安装方式:进入Python3.x\Script下 输入指令 pip install beautifulsoup4

主页面spider_main.py:

'''Created on 2016-3-30@author: rongyu'''frombike_spider import url_manager, html_downloader, html_parser, html_outputerclass SpiderMain(object):

def __init__(self):

self.urls=url_manager.UrlManager()

self.downloader=html_downloader.HtmlDownloader()

self.parser=html_parser.HtmlParser()

self.outputer=html_outputer.HtmlOutputer()

def craw(self, root_url):

count= 1self.urls.add_new_url(root_url)whileself.urls.has_new_url():try:

new_url=self.urls.get_new_url()

print ('craw %d:%s'%(count,new_url))

html_cont=self.downloader.download(new_url)

new_urls,new_data=self.parser.parse(new_url,html_cont)

self.urls.add_new_urls(new_urls)

self.outputer.collect_data(new_data)if count == 100:breakcount= count + 1except:

print('craw failed')

self.outputer.output_html()

#主程序入口if __name__=="__main__":

root_url= "http://baike.baidu.com/view/21087.htm"obj_spider=SpiderMain()

obj_spider.craw(root_url) #根据url开始爬取

url管理器页面UrlManager.py

'''Created on 2016-3-30@author: rongyu'''

class UrlManager(object):

def __init__(self):

self.new_urls= set()

self.old_urls= set()

def add_new_url(self,url):if url isNone:return

if url not in self.new_urls and url not inself.old_urls:

self.new_urls.add(url)

def has_new_url(self):return len(self.new_urls) != 0def get_new_url(self):

new_url=self.new_urls.pop()

self.old_urls.add(new_url)returnnew_url

def add_new_urls(self,urls):if urls is None or len(urls) == 0:return

for url inurls:

self.add_new_url(url)

下载器页面 HtmlDownloader.py

import urllib.requestclass HtmlDownloader(object):

def download(self,url):if url isNone:returnNone

response=urllib.request.urlopen(url)return response.read().decode('UTF-8')

解析器页面HtmlParser.py

frombs4 import BeautifulSoup

import re

import urllib.parseclass HtmlParser(object):

def _get_new_urls(self, page_url, soup):

new_urls= set()

#/view/234.htm

links= soup.find_all('a',href=re.compile(r"/view/\d+\.htm"))for link inlinks:

new_url= link['href']

new_full_url=urllib.parse.urljoin(page_url,new_url)

new_urls.add(new_full_url)returnnew_urls

def _get_new_data(self, page_url, soup):

res_data={}

#url

res_data['url'] =page_url

#

Python

title_node= soup.find('dd',class_="lemmaWgt-lemmaTitle-title").find("h1")res_data['title'] =title_node.get_text()

#

summary_node= soup.find('div',class_="lemma-summary")res_data['summary'] =summary_node.get_text()returnres_data

def parse(self,page_url,html_cont):if page_url is None or html_cont isNone:returnsoup= BeautifulSoup(html_cont,'html.parser',from_encoding='UTF-8')

new_urls=self._get_new_urls(page_url,soup)

new_data=self._get_new_data(page_url,soup)returnnew_urls,new_data

输出器的代码HtmlOutputer.py

class HtmlOutputer(object):

def __init__(self):

self.datas=[]

def collect_data(self,data):if data isNone:

#print("collect_data -data is none!")returnself.datas.append(data)

#print(self.datas)

def output_html(self):

fout= open('output.html','w')

fout.write("")

fout.write("

")fout.write("

fout.write("

")fout.write("

%s"%data['url'].encode('UTF-8'))fout.write("

%s"%data['title'].encode('UTF-8'))fout.write("

%s"%data['summary'].encode('UTF-8'))fout.write("

")fout.write("

")fout.write("")

fout.write("")

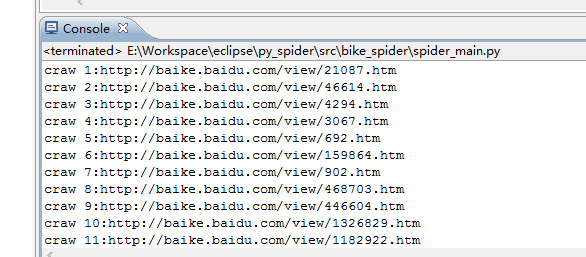

实验结果:

控制台输出

本文来自互联网用户投稿,文章观点仅代表作者本人,不代表本站立场,不承担相关法律责任。如若转载,请注明出处。 如若内容造成侵权/违法违规/事实不符,请点击【内容举报】进行投诉反馈!