RNA-Seq下机分析的一般流程

RNA-Seq的一般流程

- RNA-Seq数据的下载和转换

- 下载.sra文件并转为fq文件

- 得到fq文件的第一步:质控

- 第二步:比对,mapping到参考基因组上(可以得到bam文件)

- 可选步骤:IGV可视化(这里写的乱七八糟)

- 第三步:定量

- 数据的下游分析

RNA-Seq数据的下载和转换

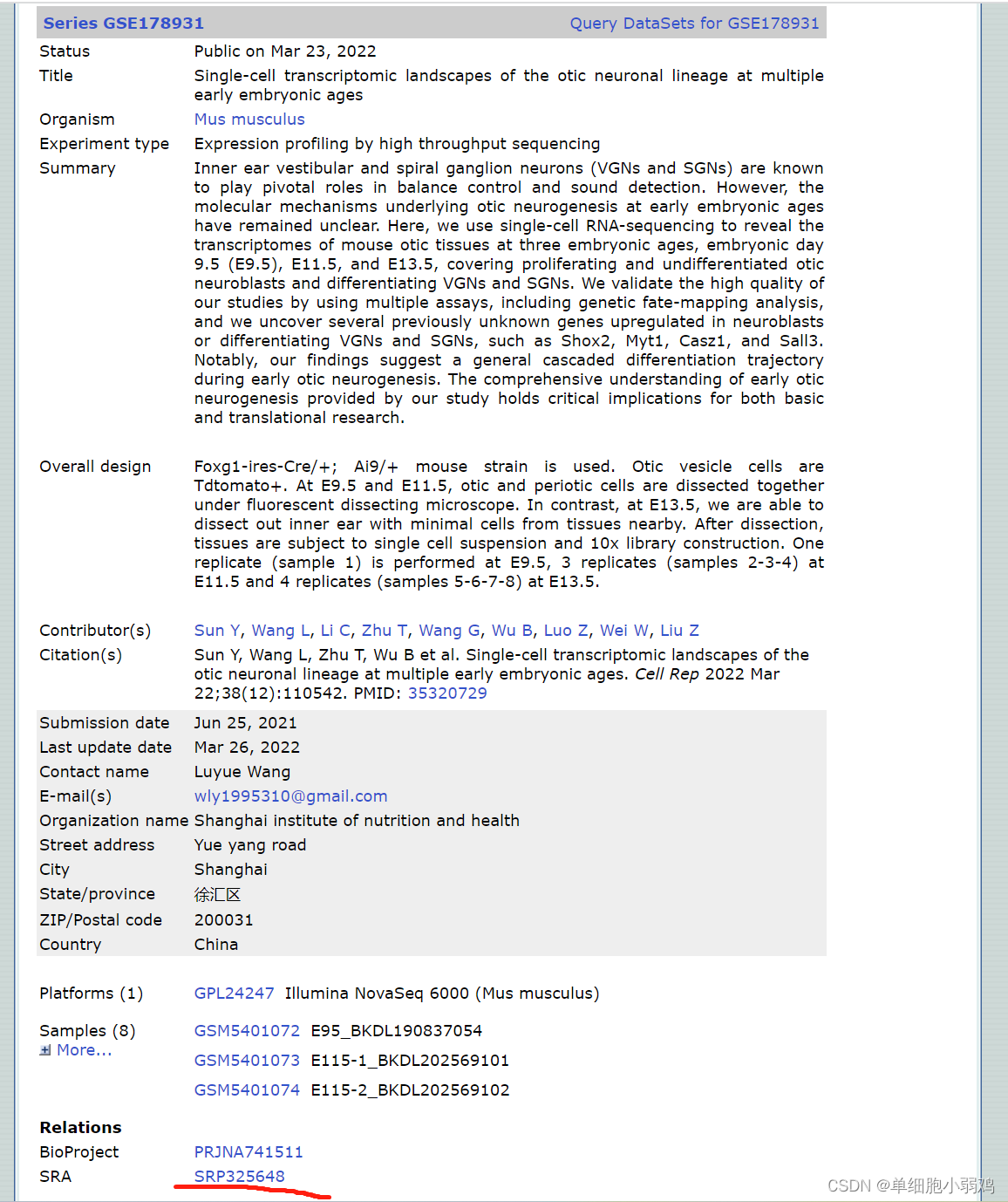

我们现在接触到的有关RNA-Seq的样本其实已经很多了,自己掏钱去做测序的,公司一般直接就可以给到counts、fastq文件等等,如果是想要复现文章里面的结果,就需要用到一个SRA的数据库了,一般文章都会提供一个GEO数据

点击跳转至SRA数据库,就可以批量下载原始测序文件

下载.sra文件并转为fq文件

官方的建议下载软件是sratools,但是因为网络原因,所以大家就自行选择下载数据的方式,这里就简单介绍一下sratools常用的批量下载数据的方法

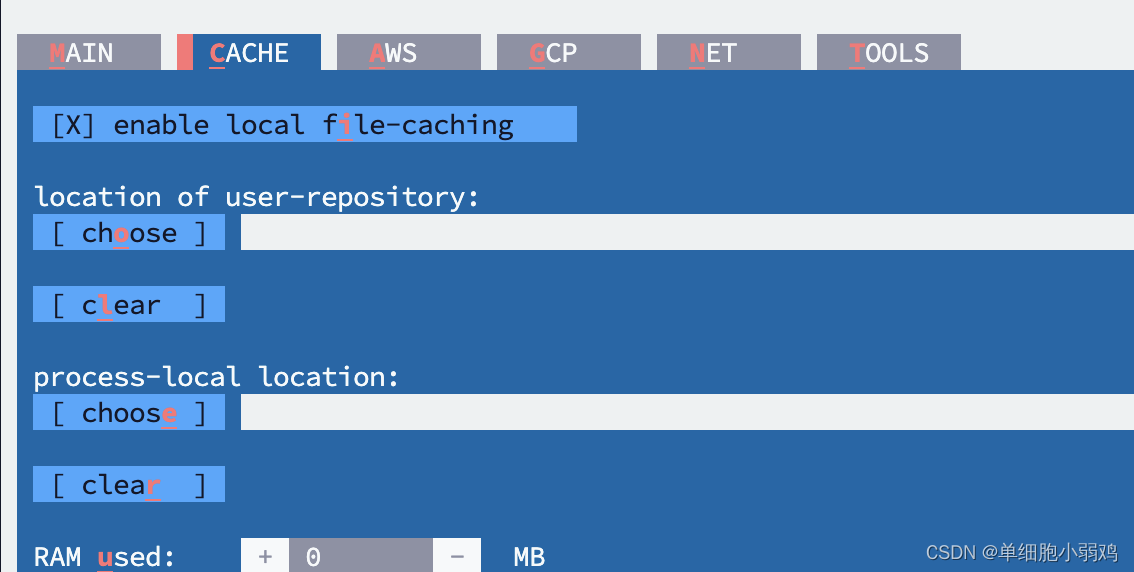

按照官网流程安装对应版本的sratools之后,需要对其进行简单的设置

vdb-config.exe -i

会打开一个利用键盘进行操作的交互窗口,Tab键可以很好的帮助切换选项,当然最重要的就是这个存储地址的选项,建议不要选择已经有文件的文件夹,会直接被覆盖

##批量下载代码

prefetch.exe --option-file SRR_ACC_List.txt

##批量转换为fq文件,split这个参数(1为单端测序,2为双端测序,3自动检测)

cat SRR_ACC_List.txt | while read i;do fastq-dump --split-3 $i

done

得到fq文件的第一步:质控

使用fastqc、multiqc去查看测序的质量

使用cutadapt等软件进行初步质控

##批量下载代码

prefetch.exe --option-file SRR_ACC_List.txt

##批量转换为fq文件,split这个参数(1为单端测序,2为双端测序,3自动检测)

cat SRR_ACC_List.txt | while read i;do fastq-dump --split-3 $i

done

# 一:质控前的初看测序数据质量:fastqc与multiqc

# 1.激活专门用于RNAseq数据处理的小环境rna,进行fastqc与multiqc

conda activate rna #激活转录组测序数据处理环境

cd /public/home/wangxuan/data/zhang/sra ##

# 2.先进行fastqc

nohup fastqc -t 21 -o ./ SRR*.fastq.gz >qc.log &

# 3.对fastqc后的zip数据进行multiqc

nohup multiqc ./*.zip -o ./ > ./multiqc.log &# 二:trimmgalore质控

ls *gz |while read id;do (nohup trim_galore -q 25 --phred33 --length 36 --stringency 3 --paired -o ./ $id & );done## 三:质控后数据也需要用fastqc与multiqc看看质控效果

# 01批量fastqc

nohup fastqc -t 42 -o ./ SRR*_trimmed.fq.gz >qc_trimmed.log &

# 02开始multiqc

nohup multiqc *.zip -o ./ > ./multiqc_t.log &

第二步:比对,mapping到参考基因组上(可以得到bam文件)

这一步可以使用的软件有很多,Hisat,Botwie,STAR等

推荐大家使用STAR

STAR做比对的第一步需要构建参考基因集,从(http://asia.ensembl.org/index.html)网站下载参考基因组及其注释文件

构建STAR索引,参考基因组不同的需要单独构建索引

source /public/apps/anaconda3/bin/activate rna

WorkDIR=/public/home/wangxuan/data/F350007154

McutadaptDIR=/public/home/wangxuan/data/F350007154/Mouse/cutadapt

HcutadaptDIR=/public/home/wangxuan/data/F350007154/Human/cutadapt

fasta=/public/home/wangxuan/data/RNAref/human/Homo_sapiens.GRCh38.dna.primary_assembly.fa

gtf=/public/home/wangxuan/data/RNAref/human/Homo_sapiens.GRCh38.108.gtf

MBamDIR=/public/home/wangxuan/data/F350007154/Mouse/STAR

HBamDIR=/public/home/wangxuan/data/F350007154/Human/STARSTAR --runMode genomeGenerate \

--runThreadN 32 \

--genomeFastaFiles $fasta \

--genomeDir /public/home/wangxuan/data/RNAref/human \

--sjdbGTFfile $gtf \

--genomeSAindexNbases 13 \

--sjdbOverhang 149cd $HcutadaptDIR

ls -1 *.fq.gz | xargs -n 2 | while read {i,j};do

STAR --runThreadN 20 \--twopassMode Basic \--readFilesCommand gunzip -c \--quantMode TranscriptomeSAM GeneCounts \--genomeDir /public/home/wangxuan/data/RNAref/human \--readFilesIn ${i} ${j} \--outFileNamePrefix ${i%_*} \--outSAMtype BAM SortedByCoordinate \--outSAMattributes All

可选步骤:IGV可视化(这里写的乱七八糟)

#!/bin/bash

#SBATCH -p com

#SBATCH -J test

#SBATCH -N 3

#SBATCH -o test.o

#SBATCH -e test.esource /public/apps/anaconda3/bin/activate rnaWorkDIR=/public/home/wangxuan/data/zhang

doit() {WorkDIR=/public/home/wangxuan/data/zhangRawBamDIR=$WorkDIR/bamfile/sortedQCBamDIR=$WorkDIR/qcbamfile ##去重sortBamDIR=$WorkDIR/sortbamfile BedDIR=$WorkDIR/bedfileRawBedgraphDIR=$WorkDIR/bedgraphnormBedgraphDIR=$WorkDIR/normbedgraphbwDIR=$WorkDIR/bwfilehg38=/public/home/wangxuan/data/RNAref/mouse/Mus_musculus.GRCm39.dna.primary_assembly.fa.fai##参考基因组文件SampleID=$1gatk MarkDuplicates \-I $RawBamDIR/${SampleID}Aligned.sortedByCoord.out.bam \-O $QCBamDIR/$SampleID.bam \-M $QCBamDIR/$SampleID.txt \--CREATE_INDEX true \--BARCODE_TAG RXsamtools sort $QCBamDIR/$SampleID.bam -o $sortBamDIR/$SampleID.sorted.bambedtools bamtobed -i $sortBamDIR/$SampleID.sorted.bam > $BedDIR/$SampleID.bedgenomeCoverageBed -bga -split -ibam $sortBamDIR/$SampleID.sorted.bam -g /public/home/wangxuan/data/RNAref/mouse/chrNameLength.txt > $RawBedgraphDIR/$SampleID.bedgraph ##参考基因组染色体长度文件num1=$(samtools view -c $sortBamDIR/$SampleID.sorted.bam)num2=1000000num3=$(echo "scale=10;${num2}/${num1}"|bc)cat $RawBedgraphDIR/$SampleID.bedgraph |awk '{print $1"\t"$2"\t"$3"\t"$4*"'$num3'"}' > $normBedgraphDIR/$SampleID.bedgraph(head -n 1 $normBedgraphDIR/$SampleID.bedgraph && tail -n +2 $normBedgraphDIR/$SampleID.bedgraph | sort -k1,1 -k2,2n | awk '{print $1,$2,$3,$4}' OFS="\t") > $normBedgraphDIR/$SampleID.sort &&bedGraphToBigWig $normBedgraphDIR/$SampleID.sort $hg38 $bwDIR/$SampleID.bw

}

export -f doit

cat $WorkDIR/samplename.txt | parallel -j 5 doit

\1. Bedtools bamtobed to make a bed file from bam

\2. Calculate normalization factor. Literally it is the number of reads divided by 1 million

\3. Bedtools coverage (我不记得是不是这个了,你可以看看bed tools的帮助文档) to convert bed to bedgraph

\4. Use the normalizationrmalization factor to normalize bedgraph. The signal is on the 4th column of bedgraph, you can divide the signals to the factor

\5. Bedgraphtobigwig to convert bedgraph to bigwig

第三步:定量

gtf=$HOME/database/GRCm39.106/Mus_musculus.GRCm39.106.chr.gtf

nohup featureCounts -T 5 -p -t exon -g gene_id -a $gtf \

-o all.id.txt *bam 1>counts.id.log 2>&1 &

数据的下游分析

#人类样本一致性

rm(list=ls())

setwd("E:/")

h97 <- read.table("p1_1108.txt",header = T)#[c(1,7)]

h98 <- read.table("p2_1108.txt",header = T)[c(1,7)]

h100 <- read.table("ty_1109.txt",header = T)[c(1,7)]

h102 <- read.table("ty_1111.txt",header = T)[c(1,7)]h99 <- read.table("tl_1109.txt",header = T)[c(1,7)]

h101 <- read.table("tl_1111.txt",header = T)[c(1,7)]

#table(rownames(h97) ==rownames(h102))

datExpr1 <- data.frame(c(h97,h98[2],h100[2],h102[2]))

colnames(datExpr1) <- c("geneid","P11108","P21108","TY1109","TY1111")datExpr2 <- data.frame(c(h99,h101[2]))

colnames(datExpr2) <- c("geneid","TL1109","TL1111")mtcars<- datExpr1[-1]

library(corrplot)

M = cor(mtcars)

pdf("4samples_corplot.pdf")

corrplot(M, method = 'number',type = 'upper')

dev.off()#鼠差异分析

rm(list=ls())

setwd("E:/华西/程/华大人鼠转录组测序质量评估/鼠/")

m15 <- read.table("wt_102.txt",header = T)[c(1,7)]

m16 <- read.table("wt_104.txt",header = T)[c(1,7)]#P2

m41 <- read.table("wt_105.txt",header = T)[c(1,7)]

m42 <- read.table("wt_106.txt",header = T)[c(1,7)]m44 <- read.table("oza_123.txt",header = T)[c(1,7)]

m45 <- read.table("oza_124.txt",header = T)[c(1,7)]

m46 <- read.table("oza_126.txt",header = T)[c(1,7)]

m47 <- read.table("oza_128.txt",header = T)[c(1,7)]

m48 <- read.table("oza_129.txt",header = T)[c(1,7)]

#table(rownames(m15) ==rownames(m16))

datExpr <- data.frame(c(m15,m16[2],m41[2],m42[2],m44[2],m45[2],m46[2],m47[2],m48[2]))

colnames(datExpr) <- c("ENSEMBL","wt102","wt104","wt105","wt106","oza123","oza124","oza126","oza128","oza129")library(clusterProfiler)

library(org.Mm.eg.db)

library(stringr)

geneID = bitr(datExpr$ENSEMBL, fromType="ENSEMBL", toType="SYMBOL", OrgDb="org.Mm.eg.db")

datExpr <- merge(geneID,datExpr,by="ENSEMBL")

table(duplicated(datExpr$SYMBOL))

datExpr<- datExpr[!duplicated(datExpr$SYMBOL),]

counts <- datExpr[,-c(1,2)]

rownames(counts) <- datExpr$SYMBOL

Group <- factor(c(rep("wt",4),rep("oza",5)))

Sample <- colnames(counts)

datMeta <- data.frame(Sample,Group)library(DESeq2)

dds <- DESeqDataSetFromMatrix(countData = counts, colData = datMeta, design= ~Group)

dds <- dds[ rowSums(counts(dds)) > 1, ]

dds

rld <- rlog(dds)

plotPCA(rld, intgroup=c("Sample","Group"))dds <- DESeq(dds)

res <- results(dds)

head(res)

summary(res)

sigdeg <- subset((data.frame(res)),log2FoldChange>2 | log2FoldChange < -2 & padj< 0.05)

write.csv(sigdeg,"wt_oza_sigdeg.csv")#####################################################

library("edgeR")#这里调用edgeR是为了过滤掉低表达基因,用这个方法过滤后开始用voom标准化

dge <- DGEList(counts=counts)#create a DgEList object using the edgeR package

design<-model.matrix(~Group,data=datMeta)

keep <- filterByExpr(dge, design)#remove rows that consistently have zero or very low counts

dge <- dge[keep,,keep.lib.sizes=FALSE]

dge <- calcNormFactors(dge)#normalization

v <- voom(dge, design, plot=TRUE)

datExpr=v$Elibrary("WGCNA")

sdout <- 2;

normadj <- (0.5+0.5*bicor(datExpr, use='pairwise.complete.obs'))^2#bicor 有效地计算矩阵的双权中相关。

netsummary <- fundamentalNetworkConcepts(normadj); #此函数基于邻接矩阵和可选的节点重要性度量计算基本网络概念

ku <- netsummary$Connectivity;

z.ku <- (ku-mean(ku))/sqrt(var(ku))

outliers <- (z.ku > mean(z.ku)+sdout*sd(z.ku))|(z.ku < mean(z.ku)-sdout*sd(z.ku))

print(paste("There are ",sum(outliers)," outliers samples based on a bicor distance sample network connectivity standard deviation above ",sdout,sep="")); print(colnames(datExpr)[outliers]); print(table(outliers))

pdf("./voom_outlier.pdf",width=10, height=10)

plot(z.ku, col = as.numeric(datMeta$Group), pch=19, main="Outlier detection", ylab="Connectivity (Z)")#PCA图

legend("bottomright",legend=levels(datMeta$Group),text.col=unique(as.numeric(datMeta$Group)))

abline(h=-2, lty=2)

text(z.ku,rownames(datMeta),cex=0.7)

dev.off()

datExpr = datExpr[,!outliers]#表达数据过滤离群值

datMeta = as.data.frame(datMeta[!outliers,])#描述数据过滤离群值

datMeta

##########################

datExpr <- data.frame(c(m16,m41[2],m42[2],m44[2],m45[2],m46[2],m47[2],m48[2]))

colnames(datExpr) <- c("ENSEMBL","wt104","wt105","wt106","oza123","oza124","oza126","oza128","oza129")

本文来自互联网用户投稿,文章观点仅代表作者本人,不代表本站立场,不承担相关法律责任。如若转载,请注明出处。 如若内容造成侵权/违法违规/事实不符,请点击【内容举报】进行投诉反馈!