第二课 词向量简介

》词向量:在很多时候需要把单词转换为数值,单词包含人类表达信息。

》离散表示:One-hot

语料库 John likes to watch movies. Mary likes too.

John also likes to watch football games.

词典 {"John": 1, "likes": 2, "to": 3, "watch": 4, "movies": 5, "also": 6, "football": 7, "games": 8, "Mary": 9, "too": 10}

One-hot表示 John: [1, 0, 0, 0, 0, 0, 0, 0, 0, 0]

likes: [0, 1, 0, 0, 0, 0, 0, 0, 0, 0]

too : [0, 0, 0, 0, 0, 0, 0, 0, 0, 1]

·词典包含10个单词,每个单词有唯一索引

·在词典中的顺序和在句子中的顺序没有关联

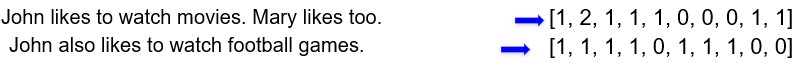

》离散表示:Bag of Words

文档的向量表示可以直接将各词的词向量表示加和

词权重 ( 词在文档中的顺序没有被考虑)

TF-IDF (Term Frequency - Inverse Document Frequency)

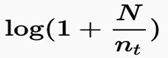

词t的IDF weight

N: 文档总数, nt: 含有词t的文档数

N: 文档总数, nt: 含有词t的文档数

[0.693, 1.386, 0.693, 0.693, 1.099, 0, 0, 0, 0.693, 0.693]

Binary weighting

短文本相似性,Bernoulli Naive Bayes

[1, 1, 1, 1, 1, 0, 0, 0, 1, 1]

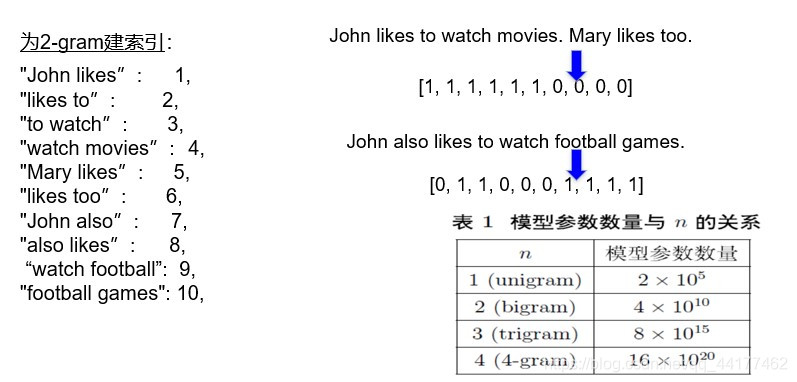

》离散表示:Bi-gram和N-gram

优点:考虑了词的顺序

缺点:词表的膨胀

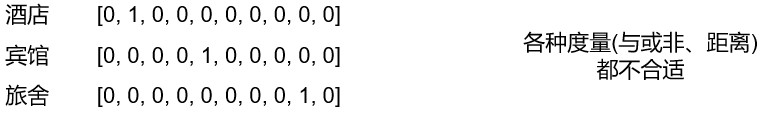

》离散表示的问题

无法平衡词向量之间的关系

太稀疏,很难捕捉文本的含义

·词表维度随着语料库增长膨胀

·n-gram词序列随语料库膨胀更快

·数据稀疏问题

》词编码需要保证词的相似性

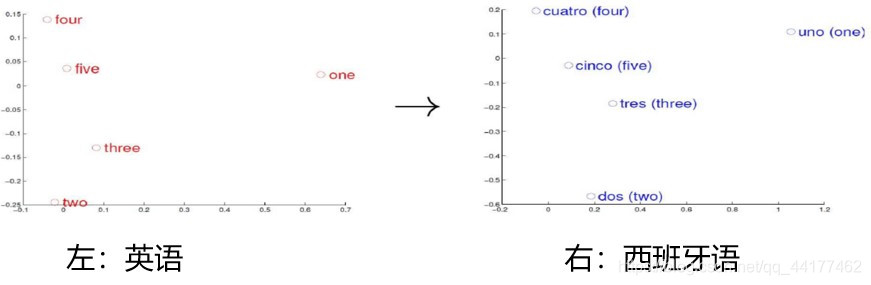

》简单 词/短语 翻译

向量空间分布的相似性

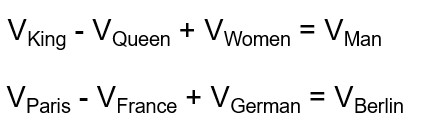

》向量空间子结构

最终目标:词向量表示作为机器学习、特别是深度学习的输入和表示空间

》分布式表示(Distributed representation)

用一个词附近的其他词来表示该词

现代统计自然语言处理中最有创见的想法之一

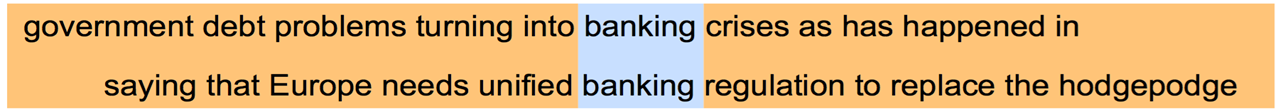

banking附近的词将会代表banking的含义

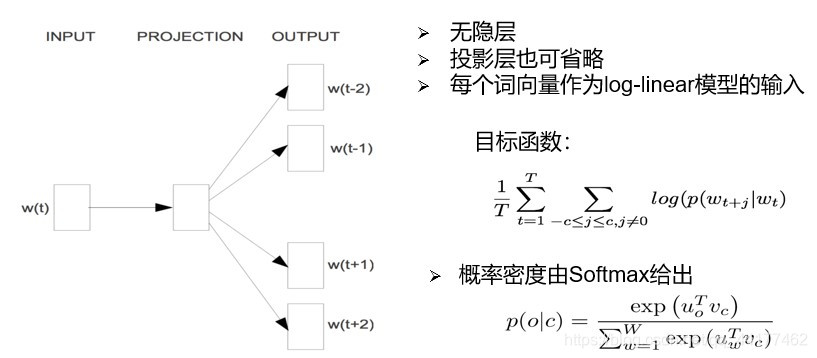

》Word2Vec: Skip-Gram模型

》Skip-gram的损失函数

》Skip-Gram:负例采样

P(w|context(w)): 一个正样本,V-1个负样本,对负样本做采样

》词嵌入可视化: 公司 — CEO、词向、比较级和最高级

》词嵌入效果评估:词类比任务、词相似度任务、作为特征用于CRF实体识别

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.utils.data as tud

from torch.nn.parameter import Parameterfrom collections import Counter

import numpy as np

import random

import mathimport pandas as pd

import scipy

import sklearn

from sklearn.metrics.pairwise import cosine_similarityUSE_CUDA = torch.cuda.is_available()# 为了保证实验结果可以复现,我们经常会把各种random seed固定在某一个值

random.seed(53113)

np.random.seed(53113)

torch.manual_seed(53113)

if USE_CUDA:torch.cuda.manual_seed(53113)# 设定一些超参数K = 100 # number of negative samples

C = 3 # nearby words threshold

NUM_EPOCHS = 2 # The number of epochs of training

MAX_VOCAB_SIZE = 30000 # the vocabulary size

BATCH_SIZE = 128 # the batch size

LEARNING_RATE = 0.2 # the initial learning rate

EMBEDDING_SIZE = 100LOG_FILE = "word-embedding.log"# 定义一个tokenize函数,把一篇文本转化成一个个单词

def word_tokenize(text):return text.split()- 从文本文件中读取所有的文字,通过这些文本创建一个vocabulary

- 由于单词数量可能太大,我们只选取最常见的MAX_VOCAB_SIZE个单词

- 我们添加一个UNK单词表示所有不常见的单词

- 我们需要记录单词到index的mapping,以及index到单词的mapping,单词的count,单词的(normalized) frequency,以及单词总数。

with open("text8.train.txt", "r") as fin:text = fin.read()text = [w for w in word_tokenize(text.lower())]

vocab = dict(Counter(text).most_common(MAX_VOCAB_SIZE-1))

vocab[""] = len(text) - np.sum(list(vocab.values()))

idx_to_word = [word for word in vocab.keys()]

word_to_idx = {word:i for i, word in enumerate(idx_to_word)}word_counts = np.array([count for count in vocab.values()], dtype=np.float32)

word_freqs = word_counts / np.sum(word_counts)

word_freqs = word_freqs ** (3./4.)

word_freqs = word_freqs / np.sum(word_freqs) # 用来做 negative sampling

VOCAB_SIZE = len(idx_to_word)

VOCAB_SIZE

30000实现Dataloader

一个dataloader需要以下内容:

- 把所有text编码成数字,然后用subsampling预处理这些文字。

- 保存vocabulary,单词count,normalized word frequency

- 每个iteration sample一个中心词

- 根据当前的中心词返回context单词

- 根据中心词sample一些negative单词

- 返回单词的counts

这里有一个好的tutorial介绍如何使用PyTorch dataloader. 为了使用dataloader,我们需要定义以下两个function:

__len__function需要返回整个数据集中有多少个item__get__根据给定的index返回一个item

有了dataloader之后,我们可以轻松随机打乱整个数据集,拿到一个batch的数据等等。

class WordEmbeddingDataset(tud.Dataset):def __init__(self, text, word_to_idx, idx_to_word, word_freqs, word_counts):''' text: a list of words, all text from the training datasetword_to_idx: the dictionary from word to idxidx_to_word: idx to word mappingword_freq: the frequency of each wordword_counts: the word counts'''super(WordEmbeddingDataset, self).__init__()self.text_encoded = [word_to_idx.get(t, VOCAB_SIZE-1) for t in text]self.text_encoded = torch.Tensor(self.text_encoded).long()self.word_to_idx = word_to_idxself.idx_to_word = idx_to_wordself.word_freqs = torch.Tensor(word_freqs)self.word_counts = torch.Tensor(word_counts)def __len__(self):''' 返回整个数据集(所有单词)的长度'''return len(self.text_encoded)def __getitem__(self, idx):''' 这个function返回以下数据用于训练- 中心词- 这个单词附近的(positive)单词- 随机采样的K个单词作为negative sample'''center_word = self.text_encoded[idx]pos_indices = list(range(idx-C, idx)) + list(range(idx+1, idx+C+1))pos_indices = [i%len(self.text_encoded) for i in pos_indices]pos_words = self.text_encoded[pos_indices] neg_words = torch.multinomial(self.word_freqs, K * pos_words.shape[0], True)return center_word, pos_words, neg_words

创建dataset和dataloader

dataset = WordEmbeddingDataset(text, word_to_idx, idx_to_word, word_freqs, word_counts)

dataloader = tud.DataLoader(dataset, batch_size=BATCH_SIZE, shuffle=True, num_workers=4)

定义PyTorch模型¶

class EmbeddingModel(nn.Module):def __init__(self, vocab_size, embed_size):''' 初始化输出和输出embedding'''super(EmbeddingModel, self).__init__()self.vocab_size = vocab_sizeself.embed_size = embed_sizeinitrange = 0.5 / self.embed_sizeself.out_embed = nn.Embedding(self.vocab_size, self.embed_size, sparse=False)self.out_embed.weight.data.uniform_(-initrange, initrange)self.in_embed = nn.Embedding(self.vocab_size, self.embed_size, sparse=False)self.in_embed.weight.data.uniform_(-initrange, initrange)def forward(self, input_labels, pos_labels, neg_labels):'''input_labels: 中心词, [batch_size]pos_labels: 中心词周围 context window 出现过的单词 [batch_size * (window_size * 2)]neg_labelss: 中心词周围没有出现过的单词,从 negative sampling 得到 [batch_size, (window_size * 2 * K)]return: loss, [batch_size]'''batch_size = input_labels.size(0)input_embedding = self.in_embed(input_labels) # B * embed_sizepos_embedding = self.out_embed(pos_labels) # B * (2*C) * embed_sizeneg_embedding = self.out_embed(neg_labels) # B * (2*C * K) * embed_sizelog_pos = torch.bmm(pos_embedding, input_embedding.unsqueeze(2)).squeeze() # B * (2*C)log_neg = torch.bmm(neg_embedding, -input_embedding.unsqueeze(2)).squeeze() # B * (2*C*K)log_pos = F.logsigmoid(log_pos).sum(1)log_neg = F.logsigmoid(log_neg).sum(1) # batch_sizeloss = log_pos + log_negreturn -lossdef input_embeddings(self):return self.in_embed.weight.data.cpu().numpy()定义一个模型以及把模型移动到GPU

model = EmbeddingModel(VOCAB_SIZE, EMBEDDING_SIZE)

if USE_CUDA:model = model.cuda()

下面是评估模型的代码,以及训练模型的代码

def evaluate(filename, embedding_weights): if filename.endswith(".csv"):data = pd.read_csv(filename, sep=",")else:data = pd.read_csv(filename, sep="\t")human_similarity = []model_similarity = []for i in data.iloc[:, 0:2].index:word1, word2 = data.iloc[i, 0], data.iloc[i, 1]if word1 not in word_to_idx or word2 not in word_to_idx:continueelse:word1_idx, word2_idx = word_to_idx[word1], word_to_idx[word2]word1_embed, word2_embed = embedding_weights[[word1_idx]], embedding_weights[[word2_idx]]model_similarity.append(float(sklearn.metrics.pairwise.cosine_similarity(word1_embed, word2_embed)))human_similarity.append(float(data.iloc[i, 2]))return scipy.stats.spearmanr(human_similarity, model_similarity)# , model_similaritydef find_nearest(word):index = word_to_idx[word]embedding = embedding_weights[index]cos_dis = np.array([scipy.spatial.distance.cosine(e, embedding) for e in embedding_weights])return [idx_to_word[i] for i in cos_dis.argsort()[:10]]

训练模型:

- 模型一般需要训练若干个epoch

- 每个epoch我们都把所有的数据分成若干个batch

- 把每个batch的输入和输出都包装成cuda tensor

- forward pass,通过输入的句子预测每个单词的下一个单词

- 用模型的预测和正确的下一个单词计算cross entropy loss

- 清空模型当前gradient

- backward pass

- 更新模型参数

- 每隔一定的iteration输出模型在当前iteration的loss,以及在验证数据集上做模型的评估

optimizer = torch.optim.SGD(model.parameters(), lr=LEARNING_RATE)

for e in range(NUM_EPOCHS):for i, (input_labels, pos_labels, neg_labels) in enumerate(dataloader):# TODOinput_labels = input_labels.long()pos_labels = pos_labels.long()neg_labels = neg_labels.long()if USE_CUDA:input_labels = input_labels.cuda()pos_labels = pos_labels.cuda()neg_labels = neg_labels.cuda()optimizer.zero_grad()loss = model(input_labels, pos_labels, neg_labels).mean()loss.backward()optimizer.step()if i % 100 == 0:with open(LOG_FILE, "a") as fout:fout.write("epoch: {}, iter: {}, loss: {}\n".format(e, i, loss.item()))print("epoch: {}, iter: {}, loss: {}".format(e, i, loss.item()))if i % 2000 == 0:embedding_weights = model.input_embeddings()sim_simlex = evaluate("simlex-999.txt", embedding_weights)sim_men = evaluate("men.txt", embedding_weights)sim_353 = evaluate("wordsim353.csv", embedding_weights)with open(LOG_FILE, "a") as fout:print("epoch: {}, iteration: {}, simlex-999: {}, men: {}, sim353: {}, nearest to monster: {}\n".format(e, i, sim_simlex, sim_men, sim_353, find_nearest("monster")))fout.write("epoch: {}, iteration: {}, simlex-999: {}, men: {}, sim353: {}, nearest to monster: {}\n".format(e, i, sim_simlex, sim_men, sim_353, find_nearest("monster")))embedding_weights = model.input_embeddings()np.save("embedding-{}".format(EMBEDDING_SIZE), embedding_weights)torch.save(model.state_dict(), "embedding-{}.th".format(EMBEDDING_SIZE))

model.load_state_dict(torch.load("embedding-{}.th".format(EMBEDDING_SIZE)))

在 MEN 和 Simplex-999 数据集上做评估

embedding_weights = model.input_embeddings()

print("simlex-999", evaluate("simlex-999.txt", embedding_weights))

print("men", evaluate("men.txt", embedding_weights))

print("wordsim353", evaluate("wordsim353.csv", embedding_weights))

simlex-999 SpearmanrResult(correlation=0.17251697429101504, pvalue=7.863946056740345e-08)

men SpearmanrResult(correlation=0.1778096817088841, pvalue=7.565661657312768e-20)

wordsim353 SpearmanrResult(correlation=0.27153702278146635, pvalue=8.842165885381714e-07)

寻找nearest neighbors

for word in ["good", "fresh", "monster", "green", "like", "america", "chicago", "work", "computer", "language"]:print(word, find_nearest(word))

单词之间的关系

man_idx = word_to_idx["man"]

king_idx = word_to_idx["king"]

woman_idx = word_to_idx["woman"]

embedding = embedding_weights[woman_idx] - embedding_weights[man_idx] + embedding_weights[king_idx]

cos_dis = np.array([scipy.spatial.distance.cosine(e, embedding) for e in embedding_weights])

for i in cos_dis.argsort()[:20]:print(idx_to_word[i])

king

henry

charles

pope

queen

iii

prince

elizabeth

alexander

constantine

edward

son

iv

louis

emperor

mary

james

joseph

frederick

francis

本文来自互联网用户投稿,文章观点仅代表作者本人,不代表本站立场,不承担相关法律责任。如若转载,请注明出处。 如若内容造成侵权/违法违规/事实不符,请点击【内容举报】进行投诉反馈!