scrapy通用随机下载延迟、IP代理、UA

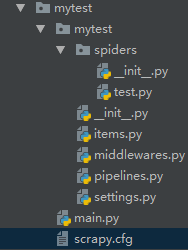

- 目录结构

- main.py文件

# -*- coding:utf-8 -*-from scrapy import cmdlinecmdline.execute('scrapy crawl test'.split())

settings.py文件

# -*- coding: utf-8 -*-BOT_NAME = 'mytest'SPIDER_MODULES = ['mytest.spiders']

NEWSPIDER_MODULE = 'mytest.spiders'# Crawl responsibly by identifying yourself (and your website) on the user-agent

#USER_AGENT = 'mytest (+http://www.yourdomain.com)'# Obey robots.txt rules

ROBOTSTXT_OBEY = False#随机下载延迟

RANDOM_DELAY = 2DOWNLOADER_MIDDLEWARES = {# 'mytest.middlewares.MytestDownloaderMiddleware': 543,'mytest.middlewares.RandomDelayMiddleware': 100,'mytest.middlewares.UserAgentMiddleware': 100,# 'mytest.middlewares.ProxyMiddleware': 100,

}

# 代理IP池

PROXIES =['http://47.94.230.42:9999','http://117.87.177.58:9000','http://125.73.220.18:49128','http://117.191.11.72:8080']# Configure item pipelines

# See https://doc.scrapy.org/en/latest/topics/item-pipeline.html

# ITEM_PIPELINES = {

# 'mytest.pipelines.MytestPipeline': 300,

# }

pipline文件

# -*- coding: utf-8 -*-import pymysqlclass MytestPipeline(object):def open_spider(self,spider):self.conn = pymysql.connect(host='192.168.186.128',user='root',password='root',db='python',charset='utf8')def process_item(self, item, spider):print('hahahahahahahhah')csl = self.conn.cursor()count = csl.execute('select title,comment from goods where comment<=5')# 打印受影响的行数print("查询到%d条数据:" % count)for i in range(count):# 获取查询的结果result = csl.fetchone()# 打印查询的结果print(result)# 获取查询的结果# 关闭Cursor对象csl.close()return itemdef close_spider(self,spider):self.conn.close()

middlerware文件

# -*- coding: utf-8 -*-from scrapy import signals

from fake_useragent import UserAgent

import time,random,loggingclass UserAgentMiddleware(object):def process_request(self, request, spider):request.headers.setdefault(b'User-Agent', UserAgent().random)class RandomDelayMiddleware(object):def __init__(self, delay):self.delay = delay@classmethoddef from_crawler(cls, crawler):delay = crawler.spider.settings.get("RANDOM_DELAY", 10)if not isinstance(delay, int):raise ValueError("RANDOM_DELAY need a int")return cls(delay)def process_request(self, request, spider):delay = random.randint(0, self.delay)logging.debug("### random delay: %s s ###" % delay)time.sleep(delay)class ProxyMiddleware(object):'''设置Proxy'''def __init__(self, ip):self.ip = ip@classmethoddef from_crawler(cls, crawler):return cls(ip=crawler.settings.get('PROXIES'))def process_request(self, request, spider):try:ip = random.choice(self.ip)request.meta['proxy'] = ipexcept:passtest.py文件

# -*- coding: utf-8 -*-

import scrapy

from mytest.items import MytestItemclass TestSpider(scrapy.Spider):name = 'test'# allowed_domains = ['test.com']# start_urls = ['https://www.baidu.com/']'''测试IP代理'''def start_requests(self):url = 'http://httpbin.org/get'for i in range(1):yield scrapy.Request(url=url, callback=self.parse, dont_filter=True)def parse(self, response):itme = MytestItem()itme['name'] = response.text# print(response.text)yield itme# print(response.request.headers)本文来自互联网用户投稿,文章观点仅代表作者本人,不代表本站立场,不承担相关法律责任。如若转载,请注明出处。 如若内容造成侵权/违法违规/事实不符,请点击【内容举报】进行投诉反馈!