爬取微信文章改进(20.12.15)+mysql

目录

- 1. 目标网址

- 2. 整体思路

- 1. 输入关键词,获取当前页面

- 2. 获取当前页面所有文章的链接

- 3. 跳转到具体文章,解析后获取数据内容

- 3. 具体实现

- 3.1 设置代理池

- 3.2 获取页面

- 3.3 获取文章的链接

- 3.4 根据文章的链接访问页面

- 3.5 解析页面,获取数据

- 3.6 存储到mysql

- 3.6.1 设计表

- 3.6.2 存储到数据库代码

- 4. 结果显示

- 完整代码

- 参考

1. 目标网址

https://weixin.sogou.com/

2. 整体思路

1. 输入关键词,获取当前页面

2. 获取当前页面所有文章的链接

3. 跳转到具体文章,解析后获取数据内容

3. 具体实现

3.1 设置代理池

当网站检测到异常时会提示输入验证码,所以要设置代理池。

代码: https://github.com/Python3WebSpider/ProxyPool.

代理池是一个flask,运行run.py文件就会获取ip地址,并将可用的ip地址实时传到 http://localhost:5555/random,通过调用这个接口就可获得可用的ip地址。

base_url = 'https://weixin.sogou.com/weixin?'proxy_pool_url = 'http://127.0.0.1:5555/random'

proxy = None # 默认为不使用

max_count = 5 # 使用连接池的次数的上限def get_proxy():try:response = requests.get(proxy_pool_url)if response.status_code == 200:return response.textreturn Noneexcept ConnectionError:return None

注意:在运行最终的代码前,要先运行代理池的 run.py

3.2 获取页面

ua = UserAgent().random

headers = {'Cookie': 'SUV=00BC42EFDA11E2615BD9501783FF7490; CXID=62F139BEE160D023DCA77FFE46DF91D4; SUID=61E211DA4D238B0A5BDAB0B900055D85; ad=Yd1L5yllll2tbusclllllVeEkmUlllllT1Xywkllll9llllllZtll5@@@@@@@@@@; SNUID=A60850E83832BB84FAA2B6F438762A9E; IPLOC=CN4400; ld=Nlllllllll2tPpd8lllllVh9bTGlllllTLk@6yllll9llllljklll5@@@@@@@@@@; ABTEST=0|1552183166|v1; weixinIndexVisited=1; sct=1; ppinf=5|1552189565|1553399165|dHJ1c3Q6MToxfGNsaWVudGlkOjQ6MjAxN3x1bmlxbmFtZTo4OnRyaWFuZ2xlfGNydDoxMDoxNTUyMTg5NTY1fHJlZm5pY2s6ODp0cmlhbmdsZXx1c2VyaWQ6NDQ6bzl0Mmx1UHBWaElMOWYtYjBhNTNmWEEyY0RRWUB3ZWl4aW4uc29odS5jb218; pprdig=eKbU5eBV3EJe0dTpD9TJ9zQaC2Sq7rMxdIk7_8L7Auw0WcJRpE-AepJO7YGSnxk9K6iItnJuxRuhmAFJChGU84zYiQDMr08dIbTParlp32kHMtVFYV55MNF1rGsvFdPUP9wU-eLjl5bAr77Sahi6mDDozvBYjxOp1kfwkIVfRWA; sgid=12-39650667-AVyEiaH25LM0Xc0oS7saTeFQ; ppmdig=15522139360000003552a8b2e2dcbc238f5f9cc3bc460fd0; JSESSIONID=aaak4O9nDyOCAgPVQKZKw','Host': 'weixin.sogou.com','Upgrade-Insecure-Requests': '1','User-Agent': ua

}def get_html(url, count = 1):print('Crawling', url)print("Trying Count",count)global proxy # 当连接连接次数超过上限就不再尝试连接代理池 避免陷入循环 if count >= max_count:print("Tried too many times.")return Nonetry:# 默认不使用连接池 if proxy:proxies = {'http':'http://'+proxy}response = requests.get(url, allow_redirects=False, headers=headers, proxies = proxies)else:response = requests.get(url, allow_redirects=False, headers=headers)if response.status_code == 200:return response.textif response.status_code == 302: # ip地址被封,需要代理print('302') proxy = get_proxy()if proxy:print("Using proxy:",proxy)return get_html(url)else:print("Get proxy failed")return Noneexcept ConnectionError as e:print('Error Occurred:',e.args)proxy = get_proxy()count += 1return get_html(url,count)def get_index(keyword, page):data = {'query': keyword,'type': 2, # 2代表文章'page': page,'ie': 'utf8'}queries = urlencode(data)url = base_url + querieshtml = get_html(url)return html

3.3 获取文章的链接

def parse_index(html):doc = pq(html)items = doc('.news-box .news-list li .txt-box h3 a').items()for item in items:yield item.attr('href')

由上图可以看到,得到的href为:

/link?url=dn9a_-gY295K0Rci_xozVXfdMkSQTLW6cwJThYulHEtVjXrGTiVgS-rno93y_JxOpf1C2jxkAw4hVwHUQRJuVVqXa8Fplpd9wyjMtmGjNqxmQWVDvD3PqMhFPcioV9wY4iklZeggZ24EYPR8T9twBPAbFJ6bA24h2frbAB5xqh2U5Ewm_aHbG1stK3oLsSVFDAJhMTb-cqez0OxoEdkhAgp_DnZnbR4J_qpT3VF3fzd3my6TrpazLYN_7_1Bu1d9Vd91YizYiPfvzHZXxeLdyg…&type=2&query=catti&token=55310FC489CC15A27D79CA006DBB23B07DBDB8105FD86BA9

并不是文章的链接,我们在前面加上前缀,构成新的href,可以跳转到文章。

article_url = 'https://weixin.sogou.com'+article_url

https://weixin.sogou.com/link?url=dn9a_-gY295K0Rci_xozVXfdMkSQTLW6cwJThYulHEtVjXrGTiVgS-rno93y_JxOpf1C2jxkAw4hVwHUQRJuVVqXa8Fplpd9wyjMtmGjNqxmQWVDvD3PqMhFPcioV9wY4iklZeggZ24EYPR8T9twBPAbFJ6bA24h2frbAB5xqh2U5Ewm_aHbG1stK3oLsSVFDAJhMTb-cqez0OxoEdkhAgp_DnZnbR4J_qpT3VF3fzd3my6TrpazLYN_7_1Bu1d9Vd91YizYiPfvzHZXxeLdyg…&type=2&query=catti&token=55310FC489CC15A27D79CA006DBB23B07DBDB8105FD86BA9.

3.4 根据文章的链接访问页面

仿照3.2节:

def get_detail_1(url,count = 1):global proxytry:if proxy:proxies = {'http': 'http://' + proxy}response = requests.get(url, allow_redirects=False, headers=headers, proxies=proxies)else:response = requests.get(url, allow_redirects=False, headers=headers)if response.status_code == 200:return response.textif response.status_code == 302: # ip地址被封,需要代理print('302')proxy = get_proxy()if proxy:print("Using proxy:", proxy)return get_detail_1(url)else:print("Get proxy failed")return Noneexcept ConnectionError as e:print('Error Occurred:', e.args)proxy = get_proxy()count += 1return get_detail_1(url, count)

但是,执行得到的结果为:

并不是我们预想的结果,这里我们观察文章的真实链接为:

https://mp.weixin.qq.com/ssrc=11×tamp=1608009750&ver=2767&signature=hNnJS112pZJgNeG1sbq0oJCq5Q927in07vW9Gss5AFquqYwYrWN5WwVRf6uuS9KO31czphm*wULpMKQK51gZVDygs08MaRrqIgw-l19AEW-jV7nhfMylyvxnhoakJ3lJ&new=1

恰好是上图url拼接之后的结果,说明我们在3.3节获取的url并不是文章真正的url,所以我们要获取文章真正的url

def find_true_url(html):n_url = ''parts = re.findall("url.*?'(.*?)';", html)for i in parts:n_url += in_url.replace("@", "")return n_url

运行结果:http://mp.weixin.qq.com/ssrc=11×tamp=1608020034&ver=2767&signature=hNnJS112pZJgNeG1sbq0oJCq5Q927in07vW9Gss5AFoCxjwmrTO9a1Z9F75auubN6AFgG4SvjCPH27KDHtlF3Zi7MouGxKDlSjNH7EELYkGodtygaeqCTLQeN4ovCr&new=1

此时我们猜得到文章真正的url, 然后再根据这个url重新访问页面。

注意:这里并没有使用上面的requests方法,这是因为使用requests方法后得到的html中,发布时间 “publish_time” 的数据缺失,这里推测为使用了js加载,所以采用selenium方法获取html

def get_detail_2(url):options = webdriver.ChromeOptions()# 不加载图片,加快访问速度options.add_experimental_option("prefs", {"profile.managed_default_content_settings.images": 2})# 不跳出options.add_argument('headless')driver = webdriver.Chrome(chrome_options=options)driver.get(url)driver.implicitly_wait(20)html = driver.page_sourcedriver.close()return html

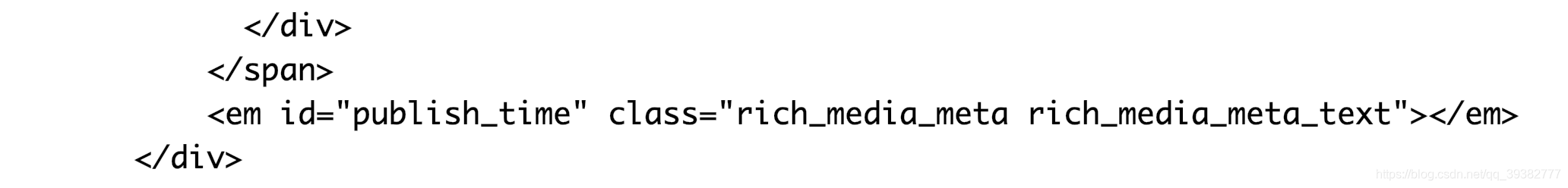

3.5 解析页面,获取数据

使用PyQuery进行解析,观察文章结构得到数据

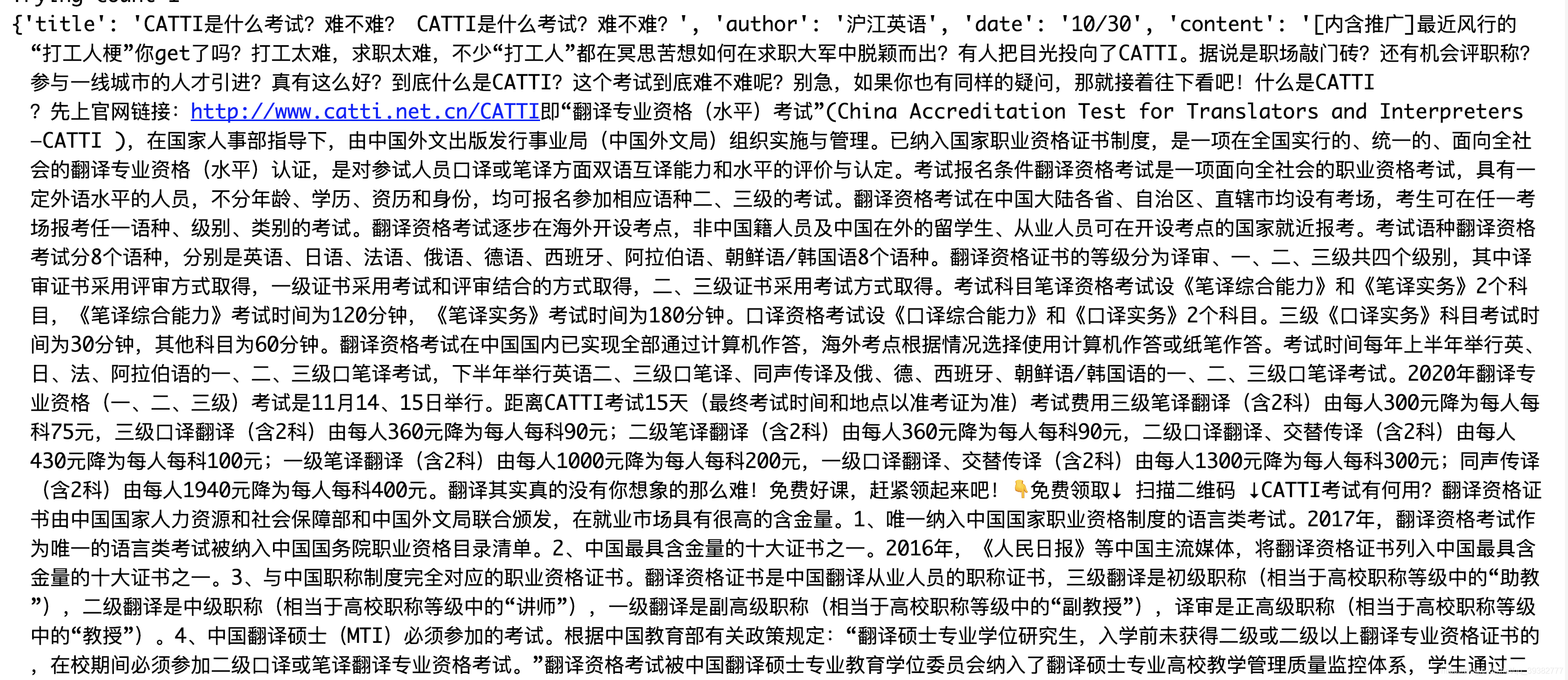

from pyquery import PyQuery as pqdef parse_detail(html):doc = pq(html)title = doc('.rich_media_title').text()content = doc('.rich_media_content').text().replace("\n", "")date = doc('#publish_time').text()name = doc('#js_name').text()return {'title':title,'author': name,'date':date,'content': content,}

3.6 存储到mysql

3.6.1 设计表

创建数据库:weixin

创建表:data

注意1:由于获取的文章内容过大,超出varchar的上限,所以把content的格式设为text。

注意2:网页抓取的内容中有表情等符号,存储到mysql时会报错:InternalError: (1366, u"Incorrect string value: '\xF0\x9F\x90\ ,所以要设置数据库的编码为utf8mb4,在数据库中使用下面语句:

alter table 表的名字(我用的是data) convert to character set utf8mb4 collate utf8mb4_bin

注意3:在连接数据库的时候也要设置字符编码 charset=‘utf8mb4’

3.6.2 存储到数据库代码

def get_conn():conn = pymysql.connect(host="127.0.0.1",user="root",password="密码",db="weixin",charset='utf8mb4')cursor = conn.cursor()return conn, cursordef close_conn(conn, cursor):cursor.close()conn.commit()conn.close()def save_to_mysql(result):conn, cursor = get_conn()if result!= None:data = [result.get('title'), result.get('author'), result.get('date'), result.get('content')]sql = "insert into data(title, author, date, content) values(%s, %s, %s, %s)"cursor.execute(sql, (data[0], data[1], data[2], data[3]))close_conn(conn, cursor)4. 结果显示

这里抓取了前2页的数据用作展示:

完整代码

import re

from urllib.parse import urlencode

from requests.exceptions import ConnectionError

import requests

from pyquery import PyQuery as pq

from selenium import webdriver

from fake_useragent import UserAgent

import pymysqlbase_url = 'https://weixin.sogou.com/weixin?'

ua = UserAgent().random

headers = {'Cookie': 'SUV=00BC42EFDA11E2615BD9501783FF7490; CXID=62F139BEE160D023DCA77FFE46DF91D4; SUID=61E211DA4D238B0A5BDAB0B900055D85; ad=Yd1L5yllll2tbusclllllVeEkmUlllllT1Xywkllll9llllllZtll5@@@@@@@@@@; SNUID=A60850E83832BB84FAA2B6F438762A9E; IPLOC=CN4400; ld=Nlllllllll2tPpd8lllllVh9bTGlllllTLk@6yllll9llllljklll5@@@@@@@@@@; ABTEST=0|1552183166|v1; weixinIndexVisited=1; sct=1; ppinf=5|1552189565|1553399165|dHJ1c3Q6MToxfGNsaWVudGlkOjQ6MjAxN3x1bmlxbmFtZTo4OnRyaWFuZ2xlfGNydDoxMDoxNTUyMTg5NTY1fHJlZm5pY2s6ODp0cmlhbmdsZXx1c2VyaWQ6NDQ6bzl0Mmx1UHBWaElMOWYtYjBhNTNmWEEyY0RRWUB3ZWl4aW4uc29odS5jb218; pprdig=eKbU5eBV3EJe0dTpD9TJ9zQaC2Sq7rMxdIk7_8L7Auw0WcJRpE-AepJO7YGSnxk9K6iItnJuxRuhmAFJChGU84zYiQDMr08dIbTParlp32kHMtVFYV55MNF1rGsvFdPUP9wU-eLjl5bAr77Sahi6mDDozvBYjxOp1kfwkIVfRWA; sgid=12-39650667-AVyEiaH25LM0Xc0oS7saTeFQ; ppmdig=15522139360000003552a8b2e2dcbc238f5f9cc3bc460fd0; JSESSIONID=aaak4O9nDyOCAgPVQKZKw','Host': 'weixin.sogou.com','Upgrade-Insecure-Requests': '1','User-Agent': ua

}

proxy_pool_url = 'http://127.0.0.1:5555/random'

proxy = None

max_count = 5def get_proxy():try:response = requests.get(proxy_pool_url)if response.status_code == 200:return response.textreturn Noneexcept ConnectionError:return Nonedef get_html(url, count = 1):global proxyif count >= max_count:print("Tried too many times.")return Nonetry:if proxy:proxies = {'http':'http://'+proxy}response = requests.get(url, allow_redirects=False, headers=headers, proxies = proxies)else:response = requests.get(url, allow_redirects=False, headers=headers)if response.status_code == 200:return response.textif response.status_code == 302: # ip地址被封,需要代理print('302')proxy = get_proxy()if proxy:print("Using proxy:",proxy)return get_html(url)else:print("Get proxy failed")return Noneexcept ConnectionError as e:print('Error Occurred:',e.args)proxy = get_proxy()count += 1return get_html(url,count)def get_index(keyword, page):data = {'query': keyword,'type': 2, # 代表文章'page': page,'ie': 'utf8'}queries = urlencode(data)url = base_url + querieshtml = get_html(url)return htmldef parse_index(html):doc = pq(html)items = doc('.news-box .news-list li .txt-box h3 a').items()for item in items:yield item.attr('href')def find_true_url(html):n_url = ''parts = re.findall("url.*?'(.*?)';", html)for i in parts:n_url += in_url.replace("@", "")return n_urldef get_detail_1(url,count = 1):global proxytry:if proxy:proxies = {'http': 'http://' + proxy}response = requests.get(url, allow_redirects=False, headers=headers, proxies=proxies)else:response = requests.get(url, allow_redirects=False, headers=headers)if response.status_code == 200:return response.textif response.status_code == 302: # ip地址被封,需要代理print('302')proxy = get_proxy()if proxy:print("Using proxy:", proxy)return get_detail_1(url)else:print("Get proxy failed")return Noneexcept ConnectionError as e:print('Error Occurred:', e.args)proxy = get_proxy()count += 1return get_detail_1(url, count)# 抓取的html的文件中 id为publish_time的数据缺失 推测为js加载 所以使用selenium

def get_detail_2(url):options = webdriver.ChromeOptions()# 不加载图片,加快访问速度options.add_experimental_option("prefs", {"profile.managed_default_content_settings.images": 2})# 不跳出options.add_argument('headless')driver = webdriver.Chrome(chrome_options=options)driver.get(url)driver.implicitly_wait(20)html = driver.page_sourcedriver.close()return htmldef parse_detail(html):doc = pq(html)title = doc('.rich_media_title').text()content = doc('.rich_media_content').text().replace("\n", "")date = doc('#publish_time').text()name = doc('#js_name').text()return {'title':title,'author': name,'date':date,'content': content,}def get_conn():conn = pymysql.connect(host="127.0.0.1",user="root",password="密码",db="weixin",charset='utf8mb4')cursor = conn.cursor()return conn, cursordef close_conn(conn, cursor):cursor.close()conn.commit()conn.close()def save_to_mysql(result):conn, cursor = get_conn()if result!= None:data = [result.get('title'), result.get('author'), result.get('date'), result.get('content')]sql = "insert into data(title, author, date, content) values(%s, %s, %s, %s)"cursor.execute(sql, (data[0], data[1], data[2], data[3]))close_conn(conn, cursor)def main():for page in range(1,3):html = get_index('catti', page)if html:article_urls = parse_index(html)for article_url in article_urls:article_url = 'https://weixin.sogou.com'+article_urlarticle_html = get_detail_1(article_url)a = find_true_url(article_html)b = get_detail_2(a)if b:article_data = parse_detail(b)save_to_mysql(article_data)print(article_data)print("==========================================================")if __name__ == '__main__':main()参考

[1] : python3 爬取搜狗微信的文章.

[2] : Python crawler抓取搜狗微信文章(代理池+重新解决跳转链接问题)(详情),python,爬虫,爬取,re,详细.

[3] : python网络爬虫入门与实战(新手强烈推荐)-- 内含全套视频,代码,课件资料.

本文来自互联网用户投稿,文章观点仅代表作者本人,不代表本站立场,不承担相关法律责任。如若转载,请注明出处。 如若内容造成侵权/违法违规/事实不符,请点击【内容举报】进行投诉反馈!