Python爬虫 爬取优品PPT模板(requests+BeautifulSoup+fake_useragent+os)

好久没来发文章了,来水一下爬虫的,这爬虫还是比较简单的,各位Python小白可以复制粘贴玩玩,但得注意安装导入的前三个库哟,话不多说,上代码:

import requests

from fake_useragent import UserAgent #随机请求头库,比较好用

from bs4 import BeautifulSoup #HTML解析库,用的是lxml解析器,得安装lxml库,当然你也可以使用lxml解析库下的Xpath,因为博主没学,所以用BeautifulSoup了

import osclass APP: #简单的Python类使用,当然你也可以不这样写,博主也就写着玩玩,熟练一下def __init__(self,key):self.url = "https://www.ypppt.com/p/search.php?kwtype=1&q={}".format(key)self.headers = {'User-Agent':str(UserAgent().random)}self.path = "./优品PPT/"def Path(self):if not os.path.exists(self.path):os.makedirs(self.path)def get_pages(self):req = requests.get(self.url,headers=self.headers)req.encoding = "utf-8"soup = BeautifulSoup(req.text,"lxml")urls = soup.find("ul",class_="posts clear").find_all("li")for url in urls:url_details = "https://www.ypppt.com{}".format(url.a["href"])name = url.find("a",class_="p-title").textself.details(url_details,name)def details(self,url,name):req = requests.get(url,headers=self.headers)#req.encoding = "utf-8"soup = BeautifulSoup(req.text,"lxml")url = "https://www.ypppt.com{}".format(soup.find("div",class_="button").a["href"])req = requests.get(url,headers=self.headers)#req.encoding = "utf-8"soup = BeautifulSoup(req.text,"lxml")down_url = soup.find("ul",class_="down clear").li.a["href"]name = name + down_url[-4:]self.save(down_url,name)def save(self,url,name):response = requests.get(url,headers=self.headers)path = self.path + nameif not os.path.exists(path):with open(path,"wb") as fp:fp.write(response.content)print("正在下载{}".format(name))else:print("{}已存在,正在跳过...".format(name))def main(self):self.Path()self.get_pages()passif __name__ == "__main__":key = input("请输入PPT分类:\n")a = APP(key)a.main()print("下载完成!!!")

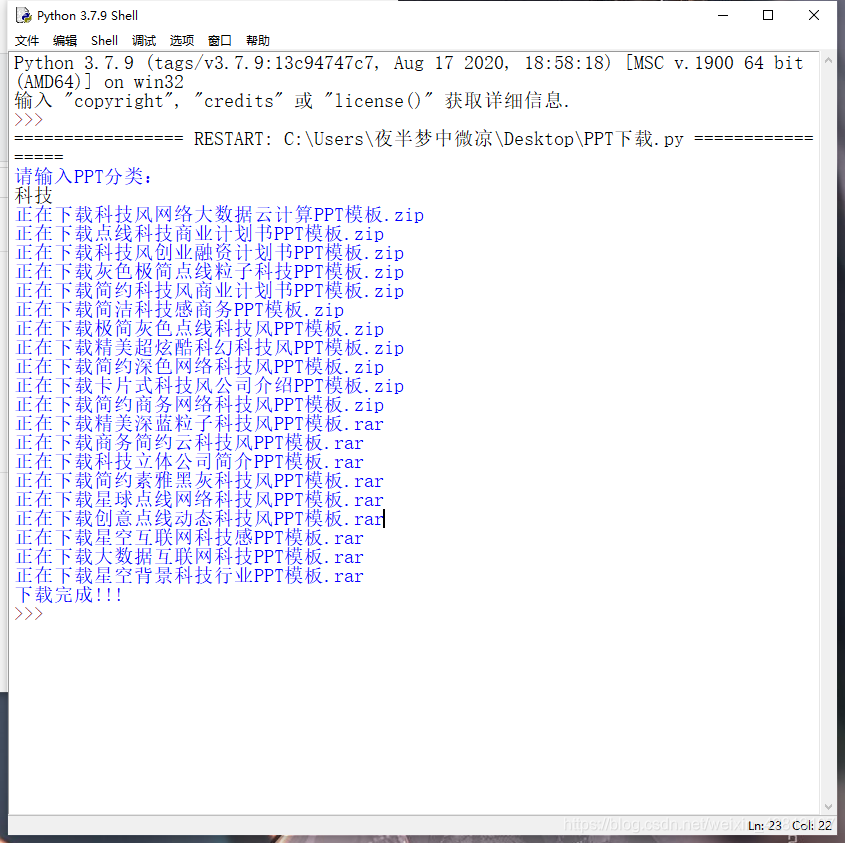

运行效果如下图:

注释就这样吧,有不懂的可以私信博主,实在懒得写注释了

本文来自互联网用户投稿,文章观点仅代表作者本人,不代表本站立场,不承担相关法律责任。如若转载,请注明出处。 如若内容造成侵权/违法违规/事实不符,请点击【内容举报】进行投诉反馈!