k8s常用资源介绍

文章目录

- 5. k8s常用资源

- Pod

- Pod控制器

- Name

- Namespace

- Label

- Label选择器

- Service

- Ingress

- 5.1 创建Pod资源

- 5.2 ReplicationController资源

- 5.2.1 创建一个rc

- 5.2.2 rc的滚动升级

- 5.3 service资源

- 5.4 deployment资源

- 5.5 tomcat+mysql部署

- 5.6 k8s部署wordpress

- 5.7 k8s部署Zabbix

- 5.8 k8s部署zabbix升级版

5. k8s常用资源

Pod

- Pod是K8S里能够被运行的最小的逻辑单元(原子单元)

- 1个Pod里面可以运行多个容器,它们共享UTS+NET+IPC名称空间

- 可以把Pod理解成豌豆荚,而同一Pod内的每个容器是一颗颗豌豆

- 一个Pod里运行多个容器,又叫:边车( SideCar )模式

Pod控制器

- Pod控制器是Pod启动的一种模板,用来保证在K8S里启动的Pod应始终按照人们的预期运行(副本数、生命周期、健康状态检查…)

- K8S内提供了众多的Pod控制器,常用的有以下几种:

– Deployment(部署)

– DaemonSet(每个运算节点起一份)

– ReplicaSet(不直接对外提供服务)

– StatefulSet(管理有状态应用的Pod控制器)

– Job(管理任务)

– Cronjob(管理定时任务)

Name

- 由于K8S内部,使用“资源”来定义每一种逻辑概念(功能)故每种“资源”,都应该有自己的“名称”

- “资源”有api版本( apiVersion )类别( kind )、元数据( metadata )、定义清单( spec )、状态(status)等配置信息

- “名称”通常定义在“资源”的“元数据”信息里

Namespace

- 随着项目增多、人员增加、集群规模的扩大,需要一种能够隔离K8S内各种“资源”的方法,这就是名称空间

- 名称空间可以理解为K8S内部的虚拟集群组

- 不同名称空间内的“资源”,名称可以相同,相同名称空间内的同种“资源”,“名称”不能相同

- 合理的使用K8S的名称空间,使得集群管理员能够更好的对交付到K8S里的服务进行分类管理和浏览

- K8S里默认存在的名称空间有: default、kube-system、kube-public

- 查询K8S里特定“资源”要带上相应的名称空间

Label

- 标签是k8s特色的管理方式,便于分类管理资源对象。

- 一个标签可以对应多个资源,一个资源也可以有多个标签,它们是多对多的关系。

- 一个资源拥有多个标签,可以实现不同维度的管理。

- 标签的组成:key=value

- 与标签类似的,还有一种“注解”( annotations )

Label选择器

- 给资源打上标签后,可以使用标签选择器过滤指定的标签

- 标签选择器目前有两个:基于等值关系(等于、不等于)和基于集合关系(属于、不属于、存在)

- 许多资源支持内嵌标签选择器字段

–matchLabels

–matchExpressions

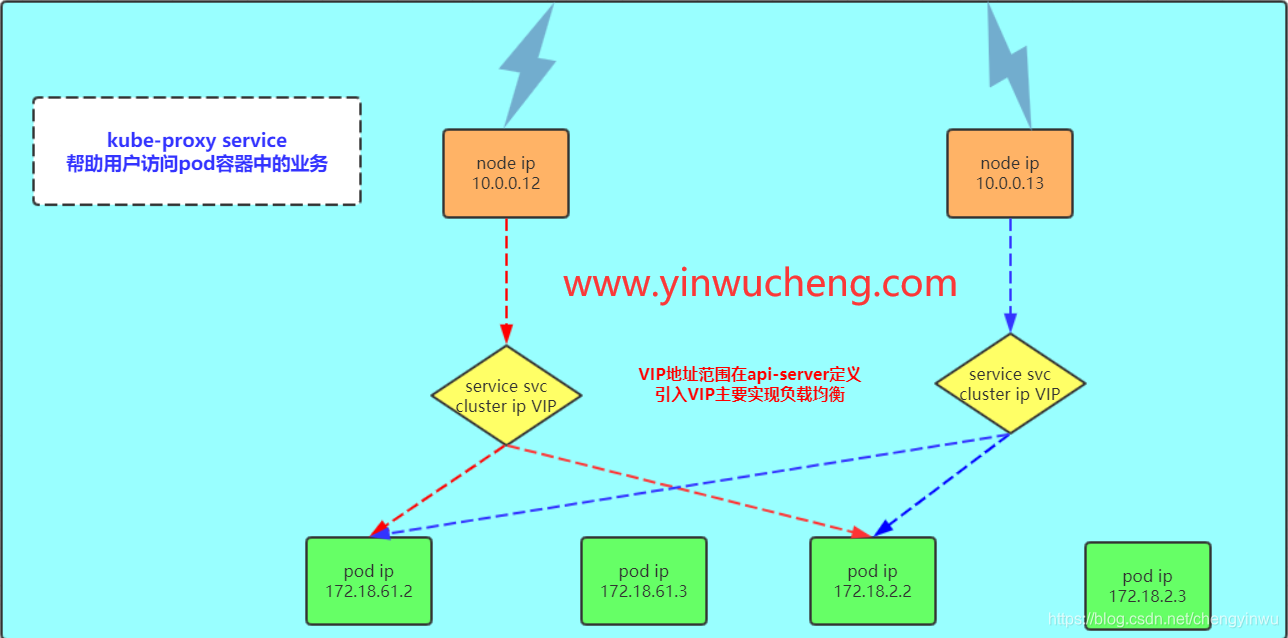

Service

- 在K8S的世界里,虽然每个Pod都会被分配一个单独的IP地址,但这个IP地址会随着Pod的销毁而消失

- Service(服务)就是用来解决这个问题的核心概念

- 一个Service可以看作一组提供相同服务的Pod的对外访问接口

- Service作用于哪些Pod是通过标签选择器来定义的

Ingress

- Ingress是K8S集群里工作在OSI网络参考模型下,第7层的应用,对外暴露的接口

- Service只能进行L4流量调度,表现形式是ip+port

- Ingress则可以调度不同业务域、不同URL访问路径的业务流量

核心组件

- 配置存储中心→etcd服务

- 主控( master )节点

~ kube-apiserver服务

~ kube-controller-manager服务

~ kube-scheduler服务 - 运算( node )节点

~ kube-kubelet服务

~ Kube-proxy服务 - CLI客户端

~ kubectl - 核心附件

~ CNI网络插件→flannel/calico

~ 服务发现用插件→coredns

~ 服务暴露用插件→traefik

~ GUI管理插件→Dashboard

5.1 创建Pod资源

pod是最小资源单位

任何的一个k8s资源都可以由yml清单文件来定义

k8s yaml的主要组成:

apiVersion: v1 api版本

kind: pod 资源类型

metadata: 元数据、属性

spec: 详细信息master节点

1.创建存放pod的目录

mkdir -p k8s_ymal/pod && cd k8s_ymal/2.编写yaml

[root@k8s-master k8s_yaml]# cat k8s_pod.yml

apiVersion: v1

kind: Pod

metadata:name: nginxlabels:app: web

spec:containers:- name: nginximage: 10.0.0.11:5000/nginx:1.13ports:- containerPort: 80注意:

vim /etc/kubernetes/apiserver

删除那个serveraccept 然后重启apiserver

systemctl restart kube-apiserver.service 3.创建资源

[root@k8s-master pod]# kubectl create -f k8s_pod.yaml4.查看资源

[root@k8s-master pod]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx 0/1 ContainerCreating 0 52s查看调度到哪一个节点

[root@k8s-master pod]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx 0/1 ContainerCreating 0 1m <none> 10.0.0.135.查看资源的描述

[root@k8s-master pod]# kubectl describe pod nginx

Error syncing pod, skipping: failed to "StartContainer" for "POD" with ErrImagePull: "image pull failed for registry.access.redhat.com/rhel7/pod-infrastructure:latest, this may be because there are no credentials on this request. details: (open /etc/docker/certs.d/registry.access.redhat.com/redhat-ca.crt: no such file or directory)"

解决方案看下边:6. node节点操作:

k8s-node2上传pod-infrastructure-latest.tar.gz和nginx

[root@k8s-node-2 ~]# docker load -i pod-infrastructure-latest.tar.gz [root@k8s-node-2 ~]# docker tag docker.io/tianyebj/pod-infrastructure:latest 10.0.0.11:5000/pod-infrastructure:latest

[root@k8s-node-2 ~]# docker push 10.0.0.11:5000/pod-infrastructure:latest[root@k8s-node-2 ~]# docker load -i docker_nginx1.13.tar.gz

[root@k8s-node-2 ~]# docker tag docker.io/nginx:1.13 10.0.0.11:5000/nginx:1.13

[root@k8s-node-2 ~]# docker push 10.0.0.11:5000/nginx:1.13

=====================================================================

master节点:

kubectl describe pod nginx #查看描述状态

kubectl get pod

======================================================================

7. node1和node2节点都要操作:

7.1.修改镜像地址

vim /etc/kubernetes/kubeletKUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=10.0.0.11:5000/pod-infrastructure:latest"7.2.重启服务

systemctl restart kubelet.service8. master节点验证:

[root@k8s-master k8s_yaml]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx 1/1 Running 0 3m 172.18.92.2 10.0.0.13为什么创建一个pod资源,k8s需要启动两个容器

业务容器:nginx ,

基础容器:pod(是k8s最小的资源单位) ,定制化功能,

为了实现k8s自带的那些高级功能

===============================================================================================pod资源: 至少由两个容器组成,pod基础容器和业务容器组成(最多1+4)

pod配置文件2:

[root@k8s-master pod]# vim k8s_pod3.yml

apiVersion: v1

kind: Pod

metadata:name: testlabels:app: web

spec:containers:- name: nginximage: 10.0.0.11:5000/nginx:1.13ports:- containerPort: 80- name: busyboximage: 10.0.0.11:5000/busybox:latestcommand: ["sleep","1000"][root@k8s-master pod]# kubectl create -f k8s_pod3.yml

pod "test" created[root@k8s-master pod]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx 1/1 Running 0 54m

test 2/2 Running 0 30s

5.2 ReplicationController资源

rc功能: 保证指定数量的pod始终存活,rc通过标签选择器select来关联pod

k8s资源的常见命令:

kubectl create -f xxx.yaml 创建资源

kubectl get pod|rc|node 查看资源列表

kubectl describe pod nginx 查看具体资源的详细信息【排错专用】

kubectl delete pod nginx 或者kubectl delete -f xxx.yaml 删除资源=资源的类型+名字 删除资源还可以直接删除yaml文件即可

kubectl edit pod nginx 修改资源的属性和配置文件

kubectl get pod -o wide --show-labels 查看标签信息

kubectl get rc -o wide 查看rc使用哪个标签

5.2.1 创建一个rc

[root@k8s-master k8s_yaml]# mkdir rc

[root@k8s-master k8s_yaml]# cd rc/

[root@k8s-master rc]# vim k8s_rc.yml

apiVersion: v1

kind: ReplicationController

metadata:name: nginx

spec:replicas: 5 #5个podselector:app: mywebtemplate: #模板metadata:labels:app: mywebspec:containers:- name: mywebimage: 10.0.0.11:5000/nginx:1.13ports:- containerPort: 80[root@k8s-master rc]# kubectl create -f k8s_rc.yml

replicationcontroller "nginx" created

[root@k8s-master rc]# kubectl get rc

NAME DESIRED CURRENT READY AGE

nginx 5 5 5 4s[root@k8s-master rc]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx 1/1 Running 0 31m 172.18.7.2 10.0.0.13

nginx-9b36r 1/1 Running 0 59s 172.18.7.4 10.0.0.13

nginx-jt31n 1/1 Running 0 59s 172.18.81.3 10.0.0.12

nginx-lhzgt 1/1 Running 0 59s 172.18.7.5 10.0.0.13

nginx-v8mzm 1/1 Running 0 59s 172.18.81.4 10.0.0.12

nginx-vcn83 1/1 Running 0 59s 172.18.81.5 10.0.0.12

nginx2 1/1 Running 0 11m 172.18.81.2 10.0.0.12

test 2/2 Running 0 8m 172.18.7.3 10.0.0.13模拟某一个node2节点故障:

[root@k8s-node-2 ~]# systemctl stop kubelet.service

[root@k8s-master rc]# kubectl get nodes

NAME STATUS AGE

10.0.0.12 Ready 9h

10.0.0.13 NotReady 9h

这时候master节点检测出node2已经故障,k8s会重试拉起node2,它会把故障的驱逐至另一个节点;

其实是驱逐,但是它起的是新pod,这个就是rc的使命[root@k8s-master rc]# kubectl delete node 10.0.0.13

node "10.0.0.13" deleted

master删除node2后,它会很快的迁移至另一个节点

[root@k8s-master rc]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx-jt31n 1/1 Running 0 5m 172.18.81.3 10.0.0.12

nginx-ml7j3 1/1 Running 0 14s 172.18.81.7 10.0.0.12 #启动新pod

nginx-v8mzm 1/1 Running 0 5m 172.18.81.4 10.0.0.12

nginx-vcn83 1/1 Running 0 5m 172.18.81.5 10.0.0.12

nginx-vkgmv 1/1 Running 0 14s 172.18.81.6 10.0.0.12 #启动新pod

nginx2 1/1 Running 0 16m 172.18.81.2 10.0.0.12启动node3

[root@k8s-node-2 ~]# systemctl start kubelet.service

[root@k8s-master rc]# kubectl get nodes

NAME STATUS AGE

10.0.0.12 Ready 9h

10.0.0.13 Ready 49s接下来创建的新Pod它会先选择node2,直到两个节点平等,如果配置不一样的话,它会优先选择配置好的;如果你在node节点清除容器,它会自动重新启动,kubelet它自带保护机制,这也是它的优势所在

[root@k8s-master rc]# kubectl get pod -o wide --show-labels

[root@k8s-master rc]# kubectl get rc -o wide总结:

kubelet只监控本机的docker容器,如果本机的pod删除了,它会启动新的容器

k8s集群,pod数量少了(kubectl down机),controller-manager启动新的pod(api-server找schema调度)

rc和pod是通过标签选择器关联的

5.2.2 rc的滚动升级

0. 前提是在我们的node节点上传nginx1.15版本--->docker load -i --->docker tag ---->docker push

[root@k8s-node-2 ~]# docker load -i docker_nginx1.15.tar.gz

[root@k8s-node-2 ~]# docker tag docker.io/nginx:latest 10.0.0.11:5000/nginx:1.15

[root@k8s-node-2 ~]# docker push 10.0.0.11:5000/nginx:1.15

=================================================================1.编写升级版本yml文件

[root@k8s-master rc]# cat k8s_rc2.yml

apiVersion: v1

kind: ReplicationController

metadata:name: nginx2

spec:replicas: 5 #副本5selector:app: myweb2 #标签选择器template:metadata:labels:app: myweb2 #标签spec:containers:- name: mywebimage: 10.0.0.11:5000/nginx:1.15 #版本ports:- containerPort: 802.滚动升级以及验证:

[root@k8s-master rc]# kubectl rolling-update nginx -f k8s_rc2.yml --update-period=5s

Created nginx2

Scaling up nginx2 from 0 to 5, scaling down nginx from 5 to 0 (keep 5 pods available, don't exceed 6 pods)

Scaling nginx2 up to 1

Scaling nginx down to 4

Scaling nginx2 up to 2

Scaling nginx down to 3

Scaling nginx2 up to 3

Scaling nginx down to 2

Scaling nginx2 up to 4

Scaling nginx down to 1

Scaling nginx2 up to 5

Scaling nginx down to 0

Update succeeded. Deleting nginx

replicationcontroller "nginx" rolling updated to "nginx2"[root@k8s-master rc]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx 1/1 Running 0 19m 172.18.7.2 10.0.0.13

nginx2 1/1 Running 0 38m 172.18.81.2 10.0.0.12

nginx2-0xhz7 1/1 Running 0 1m 172.18.81.7 10.0.0.12

nginx2-8psw5 1/1 Running 0 1m 172.18.7.5 10.0.0.13

nginx2-lqw6t 1/1 Running 0 56s 172.18.81.3 10.0.0.12

nginx2-w7jpn 1/1 Running 0 1m 172.18.7.3 10.0.0.13

nginx2-xntt8 1/1 Running 0 1m 172.18.7.4 10.0.0.13[root@k8s-master rc]# curl -I 172.18.7.3

HTTP/1.1 200 OK

Server: nginx/1.15.5 #升级至1.15

Date: Mon, 27 Jan 2020 10:50:00 GMT

Content-Type: text/html

Content-Length: 612

Last-Modified: Tue, 02 Oct 2018 14:49:27 GMT

Connection: keep-alive

ETag: "5bb38577-264"

Accept-Ranges: bytes3.回滚操作并验证:回滚主要靠的是yaml文件

[root@k8s-master rc]# kubectl rolling-update nginx2 -f k8s_rc.yml --update-period=2s

Created nginx

Scaling up nginx from 0 to 5, scaling down nginx2 from 5 to 0 (keep 5 pods available, don't exceed 6 pods)

Scaling nginx up to 1

Scaling nginx2 down to 4

Scaling nginx up to 2

Scaling nginx2 down to 3

Scaling nginx up to 3

Scaling nginx2 down to 2

Scaling nginx up to 4

Scaling nginx2 down to 1

Scaling nginx up to 5

Scaling nginx2 down to 0

Update succeeded. Deleting nginx2

replicationcontroller "nginx2" rolling updated to "nginx"[root@k8s-master rc]# kubectl get pod -o wide[root@k8s-master rc]# curl -I 172.18.81.5

HTTP/1.1 200 OK

Server: nginx/1.13.12

Date: Mon, 27 Jan 2020 10:52:05 GMT

Content-Type: text/html

Content-Length: 612

Last-Modified: Mon, 09 Apr 2018 16:01:09 GMT

Connection: keep-alive

ETag: "5acb8e45-264"

Accept-Ranges: bytes总结:

--update-period=2s

如果忘了没指定更新时间,默认是1分钟回滚操作主要依赖的是yaml文件

5.3 service资源

service:简称svc,帮助pod暴漏端口

1.创建一个service

[root@k8s-master k8s_yaml]# mkdir svc

[root@k8s-master k8s_yaml]# cd svc/

[root@k8s-master svc]# vim k8s_svc.yml

apiVersion: v1

kind: Service

metadata:name: myweb #资源的名称

spec:type: NodePort #ClusterIPports:- port: 80 #clusterIP VIPnodePort: 30000 #node port宿主机端口targetPort: 80 #pod port容器端口selector:app: myweb #关联的标签2. 创建svc

[root@k8s-master svc]# kubectl create -f k8s_svc.yml

service "myweb" created3.查看

[root@k8s-master svc]# kubectl get service -o wide

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

kubernetes 10.254.0.1 <none> 443/TCP 3h <none>

myweb 10.254.153.203 <nodes> 80:30000/TCP 50s app=myweb[root@k8s-master svc]# kubectl describe svc myweb

Name: myweb

Namespace: default

Labels: <none>

Selector: app=myweb

Type: NodePort

IP: 10.254.153.203

Port: <unset> 80/TCP

NodePort: <unset> 30000/TCP

Endpoints: 172.18.7.5:80,172.18.7.6:80,172.18.81.3:80 + 2 more...

Session Affinity: None

No events.kubectl scale rc nginx --replicas=2 动态调整副本数4. 进入pod修改nginx;实现负载均衡功能与自动发现

[root@k8s-master svc]# kubectl exec -it nginx-0r0s9 /bin/bash

root@nginx-0r0s9:/# cd /usr/share/nginx/html/

root@nginx-0r0s9:/usr/share/nginx/html# ls

50x.html index.html

root@nginx-0r0s9:/usr/share/nginx/html# echo 'web01...' >index.html

root@nginx-0r0s9:/usr/share/nginx/html# exit

exit

[root@k8s-master svc]# kubectl exec -it nginx-11zqw /bin/bash

root@nginx-11zqw:/# echo 'web02' > /usr/share/nginx/html/index.html

root@nginx-11zqw:/# exit

exit

[root@k8s-master svc]# kubectl exec -it nginx-4f8wz /bin/bash

root@nginx-4f8wz:/# echo 'web03' > /usr/share/nginx/html/index.html

root@nginx-4f8wz:/# exit

exit5.测试node1和node2

[root@k8s-node-2 ~]# curl http://10.0.0.12:30000

web03

[root@k8s-node-2 ~]# curl http://10.0.0.12:30000

web01...

[root@k8s-node-2 ~]# curl http://10.0.0.12:30000

web02

由kube-proxy实现负载均衡 6.修改nodePort范围;默认允许对外暴漏的端口只有3000-50000

[root@k8s-master svc]# kubectl create -f k8s_svc2.yml

The Service "myweb2" is invalid: spec.ports[0].nodePort: Invalid value: 30000: provided port is already allocated

解决方法:

vim /etc/kubernetes/apiserver 加入最后一行

KUBE_API_ARGS="--service-node-port-range=3000-50000"[root@k8s-master svc]# systemctl restart kube-apiserver.service

[root@k8s-master svc]# kubectl create -f k8s_svc2.yml

service "myweb2" created7.命令行创建service资源;随机端口映射到指定容器端口

[root@k8s-master svc]# kubectl expose rc nginx --port=80 --target-port=80 --type=NodePort

service "nginx" exposed[root@k8s-master svc]# kubectl get svc -o wide

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

kubernetes 10.254.0.1 <none> 443/TCP 4h <none>

myweb 10.254.153.203 <nodes> 80:30000/TCP 25m app=myweb

myweb2 10.254.210.174 <nodes> 80:3000/TCP 2m app=myweb

nginx 10.254.52.144 <nodes> 80:3949/TCP 1m app=myweb[root@k8s-master svc]# kubectl delete svc nginx28.低版本service默认使用iptables来实现负载均衡, k8s 1.8新版本中推荐使用lvs(四层负载均衡 传输层tcp,udp)

8.1 service负载均衡:- 默认使用iptables,性能差- 1.8以后版本推荐使用lvs,性能好8.2 kubenetes三种类型IP- node ip #配置文件:/etc/kubernetes/apiserver- vip #配置文件:/etc/kubernetes/apiserver- pod ip #etcd中定义flannel网段

==========================================================================================

查看所有

kubectl get all -o wide网络出现问题解决方法:

systemctl restart flanneld docker kubelet kube-proxy

5.4 deployment资源

rc在滚动升级之后,会造成服务访问中断,于是k8s引入了deployment资源

服务访问中断

1.未升级可以curl通

[root@k8s-master svc]# curl -I 10.0.0.12:30000

HTTP/1.1 200 OK

Server: nginx/1.13.12

Date: Mon, 27 Jan 2020 12:57:13 GMT

Content-Type: text/html

Content-Length: 6

Last-Modified: Mon, 27 Jan 2020 12:19:45 GMT

Connection: keep-alive

ETag: "5e2ed561-6"

Accept-Ranges: bytes2.做升级操作

[root@k8s-master rc]# kubectl rolling-update nginx -f k8s_rc2.yml --update-period=3s

Created nginx2

Scaling up nginx2 from 0 to 5, scaling down nginx from 3 to 0 (keep 5 pods available, don't exceed 6 pods)

Scaling nginx2 up to 3

Scaling nginx down to 2

Scaling nginx2 up to 4

Scaling nginx down to 1

Scaling nginx2 up to 5

Scaling nginx down to 0

Update succeeded. Deleting nginx

replicationcontroller "nginx" rolling updated to "nginx2"3.出现服务访问中断

[root@k8s-master rc]# curl -I 10.0.0.12:30000

^C4.检测

[root@k8s-master rc]# kubectl get all -o wide

NAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR

rc/nginx2 5 5 5 2m myweb 10.0.0.11:5000/nginx:1.15 app=myweb2NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

svc/kubernetes 10.254.0.1 <none> 443/TCP 4h <none>

svc/myweb 10.254.153.203 <nodes> 80:30000/TCP 51m app=myweb痛点:

出现标签不一致;rc在升级时改变了标签,而service不会改标签,最终导致服务中断

解决方案:

1.编写deployment

[root@k8s-master deployment]# cat k8s_deploy.yml

apiVersion: extensions/v1beta1 #扩展版的

kind: Deployment #资源类型

metadata: #资源属性name: nginx-deployment #资源的名称

spec:replicas: 3 #副本数minReadySeconds: 60 #滚动升级间隔template:metadata: #模板labels:app: nginx #容器的标签spec:containers:- name: nginx #容器的名称image: 10.0.0.11:5000/nginx:1.13 #容器所使用的镜像ports:- containerPort: 80 #容器对外开放的端口resources: #资源限制limits: #最大cpu: 100m #cpu时间片requests: #最小cpu: 100m2.创建deployment

[root@k8s-master deploy]# kubectl create -f k8s_deploy.yml3.查看所有资源

[root@k8s-master deploy]# kubectl get all -o wide

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/nginx-deployment 3 3 3 0 5sNAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR

rc/nginx2 5 5 5 11m myweb 10.0.0.11:5000/nginx:1.15 app=myweb2NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

svc/kubernetes 10.254.0.1 <none> 443/TCP 4h <none>

svc/myweb 10.254.153.203 <nodes> 80:30000/TCP 59m app=mywebNAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR

rs/nginx-deployment-2807576163 3 3 3 5s nginx 10.0.0.11:5000/nginx:1.13 app=nginx,pod-template-hash=2807576163NAME READY STATUS RESTARTS AGE IP NODE

po/nginx 1/1 Running 0 2h 172.18.7.2 10.0.0.13

po/nginx-deployment-2807576163-4vccn 1/1 Running 0 5s 172.18.7.6 10.0.0.13

po/nginx-deployment-2807576163-5qwxf 1/1 Running 0 5s 172.18.81.4 10.0.0.12

po/nginx-deployment-2807576163-kmw9m 1/1 Running 0 5s 172.18.7.5 10.0.0.13

po/nginx2-1cx68 1/1 Running 0 11m 172.18.81.5 10.0.0.12

po/nginx2-41ppf 1/1 Running 0 11m 172.18.7.4 10.0.0.13

po/nginx2-9j6g4 1/1 Running 0 10m 172.18.81.3 10.0.0.12

po/nginx2-ftqt0 1/1 Running 0 11m 172.18.81.2 10.0.0.12

po/nginx2-w9twq 1/1 Running 0 11m 172.18.7.3 10.0.0.134. 创建访问端口

[root@k8s-master deploy]# kubectl expose deployment nginx-deployment --port=80 --target-port=80 --type=NodePort5. 查看端口

[root@k8s-master deployment]# kubectl get svc -o wide

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

kubernetes 10.254.0.1 <none> 443/TCP 4h <none>

myweb 10.254.153.203 <nodes> 80:30000/TCP 1h app=myweb

nginx-deployment 10.254.230.84 <nodes> 80:39576/TCP 1m app=nginx6.测试

[root@k8s-master deployment]# curl -I 10.0.0.12:39576

HTTP/1.1 200 OK

Server: nginx/1.13.12

Date: Mon, 27 Jan 2020 13:12:53 GMT

Content-Type: text/html

Content-Length: 612

Last-Modified: Mon, 09 Apr 2018 16:01:09 GMT

Connection: keep-alive

ETag: "5acb8e45-264"

Accept-Ranges: bytes7. 修改deployment资源--升级

[root@k8s-master deploy]# kubectl edit deployment nginx-deployment #进去修改版本,然后直接验证即可再次curl一下地址+端口,是否能看的版本的升级

[root@k8s-master deployment]# curl -I 10.0.0.12:39576

HTTP/1.1 200 OK

Server: nginx/1.15.5

Date: Mon, 27 Jan 2020 13:16:40 GMT

Content-Type: text/html

Content-Length: 612

Last-Modified: Tue, 02 Oct 2018 14:49:27 GMT

Connection: keep-alive

ETag: "5bb38577-264"

Accept-Ranges: bytes8. 进去调节两个参数

[root@k8s-master deploy]# kubectl edit deployment nginx-deployment

.........

spec:minReadySeconds: 60 #滚动升级间隔60s;要调replicas: 3selector:matchLabels:app: nginxstrategy:rollingUpdate: #由于replicas为3,则整个升级,pod个数在2-4个之间maxSurge: 2 #滚动升级时会先启动1个pod,要调maxUnavailable: 1 #滚动升级时允许的最大unavailable的pod个数type: RollingUpdate

.........

9.调整副本数

[root@k8s-master deploy]# kubectl scale deployment nginx-deployment --replicas=610.修改版本为nginx:1.17

[root@k8s-master deploy]# kubectl edit deployment nginx-deployment #提前在node2上传nginx1.17---docker load -i --->docker tag --->docker push 60秒左右调整一次11. 检查

[root@k8s-master deployment]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx 1/1 Running 0 3h 172.18.7.2 10.0.0.13

nginx-deployment-3221239399-7j2cq 1/1 Running 0 5m 172.18.7.8 10.0.0.13

nginx-deployment-3221239399-fgm9m 1/1 Running 0 1m 172.18.7.6 10.0.0.13

nginx-deployment-3221239399-fzlrr 1/1 Running 0 2m 172.18.81.7 10.0.0.12

nginx-deployment-3221239399-m7vpj 1/1 Running 0 5m 172.18.7.9 10.0.0.13

nginx-deployment-3221239399-pt8r3 1/1 Running 0 2m 172.18.81.4 10.0.0.12

nginx-deployment-3221239399-xxpqr 1/1 Running 0 5m 172.18.81.8 10.0.0.12

nginx2-1cx68 1/1 Running 0 34m 172.18.81.5 10.0.0.12

nginx2-41ppf 1/1 Running 0 34m 172.18.7.4 10.0.0.13

nginx2-9j6g4 1/1 Running 0 34m 172.18.81.3 10.0.0.12

nginx2-ftqt0 1/1 Running 0 34m 172.18.81.2 10.0.0.12

nginx2-w9twq 1/1 Running 0 34m 172.18.7.3 10.0.0.1312.查看rs

[root@k8s-master deployment]# kubectl get rs

NAME DESIRED CURRENT READY AGE

nginx-deployment-2807576163 0 0 0 27m

nginx-deployment-3014407781 0 0 0 19m

nginx-deployment-3221239399 6 6 6 8m[root@k8s-master deploy]# kubectl delete rs nginx-deployment-3428071017

replicaset "nginx-deployment-3428071017" deleted============================================================================

13.deployment升级和回滚

1.命令行创建deployment资源,可记录版本信息,方便回滚

kubectl run nginx --image=10.0.0.11:5000/nginx:1.13 --replicas=3 --record2.命令行升级版本

kubectl set image deploy nginx nginx=10.0.0.11:5000/nginx:1.153.查看deployment所有历史版本

kubectl rollout history deployment nginx4.deployment回滚到上一个版本

kubectl rollout undo deployment nginx5.deployment回滚到指定版本

kubectl rollout undo deployment nginx --to-revision=2

=======================================================================================

6.查看deployment的版本

[root@k8s-master deploy]# kubectl rollout history deployment nginx-deployment7.模拟测试deployment的版本

[root@k8s-master deploy]# kubectl delete -f k8s_deploy.yml

deployment "nginx-deployment" deleted

[root@k8s-master deploy]# kubectl create -f k8s_deploy.yml --record

deployment "nginx-deployment" created

[root@k8s-master deploy]# kubectl rollout history deployment nginx-deployment

deployments "nginx-deployment"

REVISION CHANGE-CAUSE

1 kubectl create -f k8s_deploy.yml --record

可以看到执行的命令,但这不是我们日常所使用的

==========================================================================================14.另一种方法:虽然可以记录版本的信息,但是升级的话是不可取的

[root@k8s-master deploy]# kubectl run nginx --image=10.0.0.11:5000/nginx:1.13 --replicas=3 --record

deployment "nginx" created14.1 查看资源

[root@k8s-master deploy]# kubectl rollout history deployment nginx

deployments "nginx"

REVISION CHANGE-CAUSE

1 kubectl run nginx --image=10.0.0.11:5000/nginx:1.13 --replicas=3 --record14.2 暴露端口

[root@k8s-master deploy]# kubectl expose deployment nginx --port=80 --target-port=80 --type=NodePort

service "nginx" exposed[root@k8s-master deploy]# kubectl get svc

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes 10.254.0.1 <none> 443/TCP 1d

myweb 10.254.215.251 <nodes> 80:30000/TCP 2h

nginx 10.254.45.223 <nodes> 80:11111/TCP 17s

nginx-deployment 10.254.9.183 <nodes> 80:8416/TCP 32m14.3 访问测试

[root@k8s-master deploy]# curl -I 10.0.0.12:11111

HTTP/1.1 200 OK

Server: nginx/1.13.1214.4 版本升级

[root@k8s-master deploy]# kubectl set image deployment nginx nginx=10.0.0.11:5000/nginx:1.15

deployment "nginx" image updated14.5 查看是否有版本升级记录

[root@k8s-master deploy]# kubectl rollout history deployment nginx

deployments "nginx"

REVISION CHANGE-CAUSE

1 kubectl run nginx --image=10.0.0.11:5000/nginx:1.13 --replicas=3 --record

2 kubectl set image deployment nginx nginx=10.0.0.11:5000/nginx:1.1514.6 升级至1.17

[root@k8s-master deploy]# kubectl set image deployment nginx nginx=10.0.0.11:5000/nginx:1.17

deployment "nginx" image updated

[root@k8s-master deploy]# kubectl rollout history deployment nginx

deployments "nginx"

REVISION CHANGE-CAUSE

1 kubectl run nginx --image=10.0.0.11:5000/nginx:1.13 --replicas=3 --record

2 kubectl set image deployment nginx nginx=10.0.0.11:5000/nginx:1.15

3 kubectl set image deployment nginx nginx=10.0.0.11:5000/nginx:1.1714.7 版本回滚:rollout默认只会回滚至上一个版本

[root@k8s-master deploy]# kubectl rollout undo deployment nginx

deployment "nginx" rolled back

[root@k8s-master deploy]# kubectl rollout history deployment nginx

deployments "nginx"

REVISION CHANGE-CAUSE

1 kubectl run nginx --image=10.0.0.11:5000/nginx:1.13 --replicas=3 --record

3 kubectl set image deployment nginx nginx=10.0.0.11:5000/nginx:1.17

4 kubectl set image deployment nginx nginx=10.0.0.11:5000/nginx:1.15

============================================================================================

[root@k8s-master deploy]# kubectl rollout undo deployment nginx

deployment "nginx" rolled back

[root@k8s-master deploy]# kubectl rollout history deployment nginx

deployments "nginx"

REVISION CHANGE-CAUSE

1 kubectl run nginx --image=10.0.0.11:5000/nginx:1.13 --replicas=3 --record

4 kubectl set image deployment nginx nginx=10.0.0.11:5000/nginx:1.15

5 kubectl set image deployment nginx nginx=10.0.0.11:5000/nginx:1.17

=============================================================================================

14.8 回滚至指定版本:例如1.13 --->只需要在后面加 --to-revision=你要回滚的版本

[root@k8s-master deploy]# kubectl rollout undo deployment nginx --to-revision=1

deployment "nginx" rolled back

[root@k8s-master deploy]# kubectl rollout history deployment nginx

deployments "nginx"

REVISION CHANGE-CAUSE

4 kubectl set image deployment nginx nginx=10.0.0.11:5000/nginx:1.15

5 kubectl set image deployment nginx nginx=10.0.0.11:5000/nginx:1.17

6 kubectl run nginx --image=10.0.0.11:5000/nginx:1.13 --replicas=3 --record

=============================================================================================

15.deployment和rc比较

deployment升级不会中断服务的访问

deployment升级不需要依赖配置文件

deployment升级时可以指定升级间隔

deployment 创建 rs replcation set replication controller16. rs和rc的关系?

rs:新一代副本控制器

rc:副本控制器

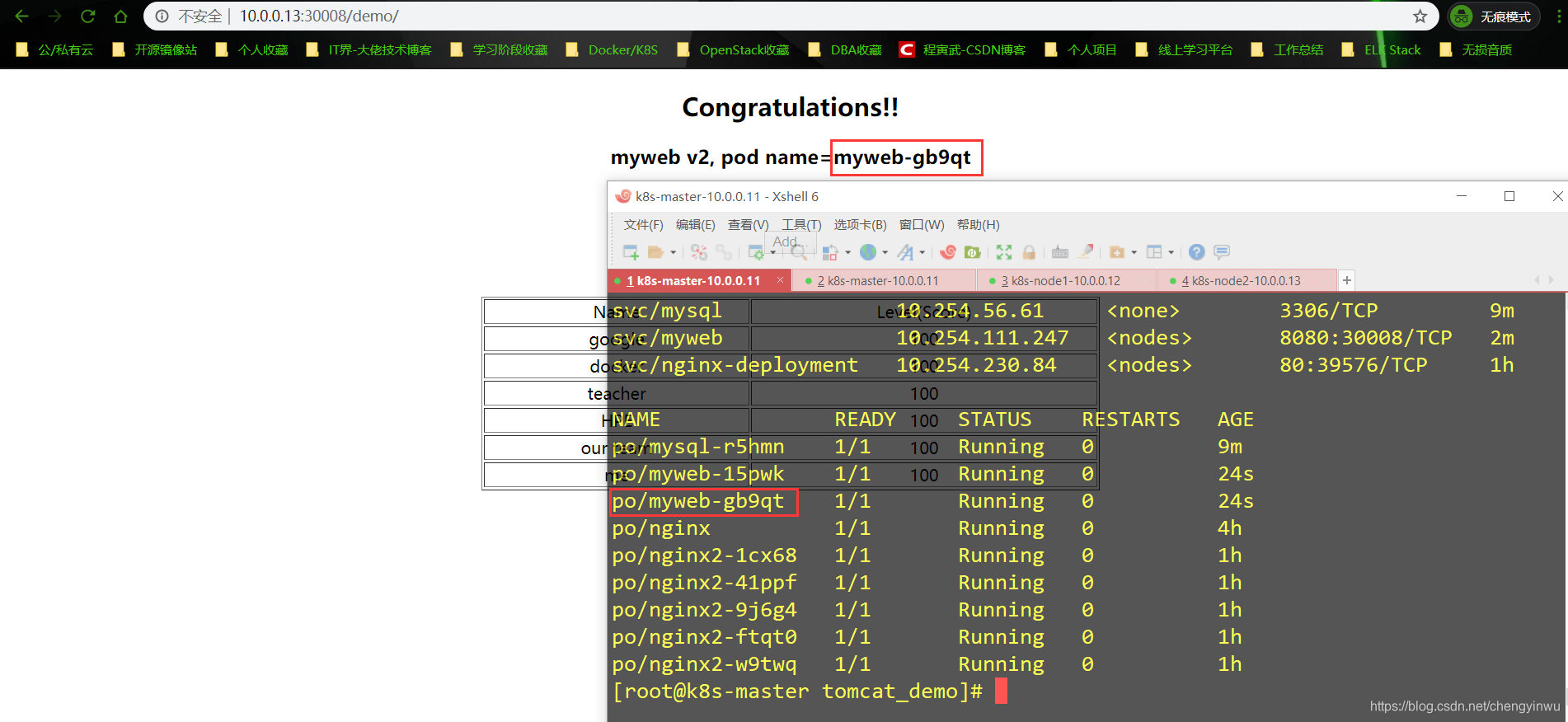

5.5 tomcat+mysql部署

在k8s中容器之间相互访问,通过VIP地址!

0.提前上传mysql和tomcat容器

[root@k8s-node-2 ~]# docker load -i docker-mysql-5.7.tar.gz

[root@k8s-node-2 ~]# docker tag docker.io/mysql:5.7 10.0.0.11:5000/mysql:5.7

[root@k8s-node-2 ~]# docker push 10.0.0.11:5000/mysql:5.7

[root@k8s-node-2 ~]# docker load -i tomcat-app-v2.tar.gz

[root@k8s-node-2 ~]# docker tag docker.io/kubeguide/tomcat-app:v2 10.0.0.11:5000/tomcat-app:v2

[root@k8s-node-2 ~]# docker push 10.0.0.11:5000/tomcat-app:v2

=================================================================

1.上传tomcat的yml文件,删除带有pv的配置文件

[root@k8s-master tomcat_demo]# rm -rf *pv*2.mysql-rc的配置文件:

[root@k8s-master tomcat_demo]# cat mysql-rc.yml

apiVersion: v1

kind: ReplicationController

metadata:name: mysql

spec:replicas: 1selector:app: mysqltemplate:metadata:labels:app: mysqlspec:containers:- name: mysqlimage: 10.0.0.11:5000/mysql:5.7ports:- containerPort: 3306env:- name: MYSQL_ROOT_PASSWORDvalue: '123456'3.mysql-svc的配置文件

[root@k8s-master tomcat_demo]# cat mysql-svc.yml

apiVersion: v1

kind: Service

metadata:name: mysql

spec:ports:- port: 3306targetPort: 3306selector:app: mysql4.创建mysql-sc和mysql-svc

[root@k8s-master tomcat_demo]# kubectl create -f mysql-svc.yml

[root@k8s-master tomcat_demo]# kubectl create -f mysql-rc.yml

[root@k8s-master tomcat_demo]# kubectl get rc

NAME DESIRED CURRENT READY AGE

mysql 1 1 1 4s

nginx2 5 5 5 19h5.记录mysql的ip地址与tomcat对接

[root@k8s-master tomcat_demo]# kubectl get svc

mysql 10.254.221.29 <none> 3306/TCP 57s6.配置tomcat-rc

[root@k8s-master tomcat_demo]# cat tomcat-rc.yml

apiVersion: v1

kind: ReplicationController

metadata:name: myweb

spec:replicas: 2selector:app: mywebtemplate:metadata:labels:app: mywebspec:containers:- name: mywebimage: 10.0.0.11:5000/tomcat-app:v2ports:- containerPort: 8080env:- name: MYSQL_SERVICE_HOSTvalue: '10.254.221.29' #填写mysql的ip地址- name: MYSQL_SERVICE_PORTvalue: '3306'7.配置tomcat-svc

[root@k8s-master tomcat_demo]# cat tomcat-svc.yml

apiVersion: v1

kind: Service

metadata:name: myweb

spec:type: NodePortports:- port: 8080nodePort: 30008selector:app: myweb[root@k8s-master tomcat_demo]# kubectl create -f tomcat-rc.yml

[root@k8s-master tomcat_demo]# kubectl create -f tomcat-svc.yml

Error from server (AlreadyExists): error when creating "tomcat-svc.yml": services "myweb" already exists出现error的话,提示标签名冲突,可先删除掉再重新创建

[root@k8s-master tomcat_demo]# kubectl delete -f tomcat-svc.yml

[root@k8s-master tomcat_demo]# kubectl create -f tomcat-svc.yml8. 查看所有资源

[root@k8s-master tomcat_demo]# kubectl get all

NAME DESIRED CURRENT READY AGE

rc/mysql 1 1 1 9m

rc/myweb 2 2 2 24s

rc/nginx2 5 5 5 1hNAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc/kubernetes 10.254.0.1 <none> 443/TCP 6h

svc/mysql 10.254.56.61 <none> 3306/TCP 9m

svc/myweb 10.254.111.247 <nodes> 8080:30008/TCP 2m

svc/nginx-deployment 10.254.230.84 <nodes> 80:39576/TCP 1hNAME READY STATUS RESTARTS AGE

po/mysql-r5hmn 1/1 Running 0 9m

po/myweb-15pwk 1/1 Running 0 24s

po/myweb-gb9qt 1/1 Running 0 24s

po/nginx 1/1 Running 0 4h

po/nginx2-1cx68 1/1 Running 0 1h

po/nginx2-41ppf 1/1 Running 0 1h

po/nginx2-9j6g4 1/1 Running 0 1h

po/nginx2-ftqt0 1/1 Running 0 1h

po/nginx2-w9twq 1/1 Running 0 1h8.最近web界面进行访问测试

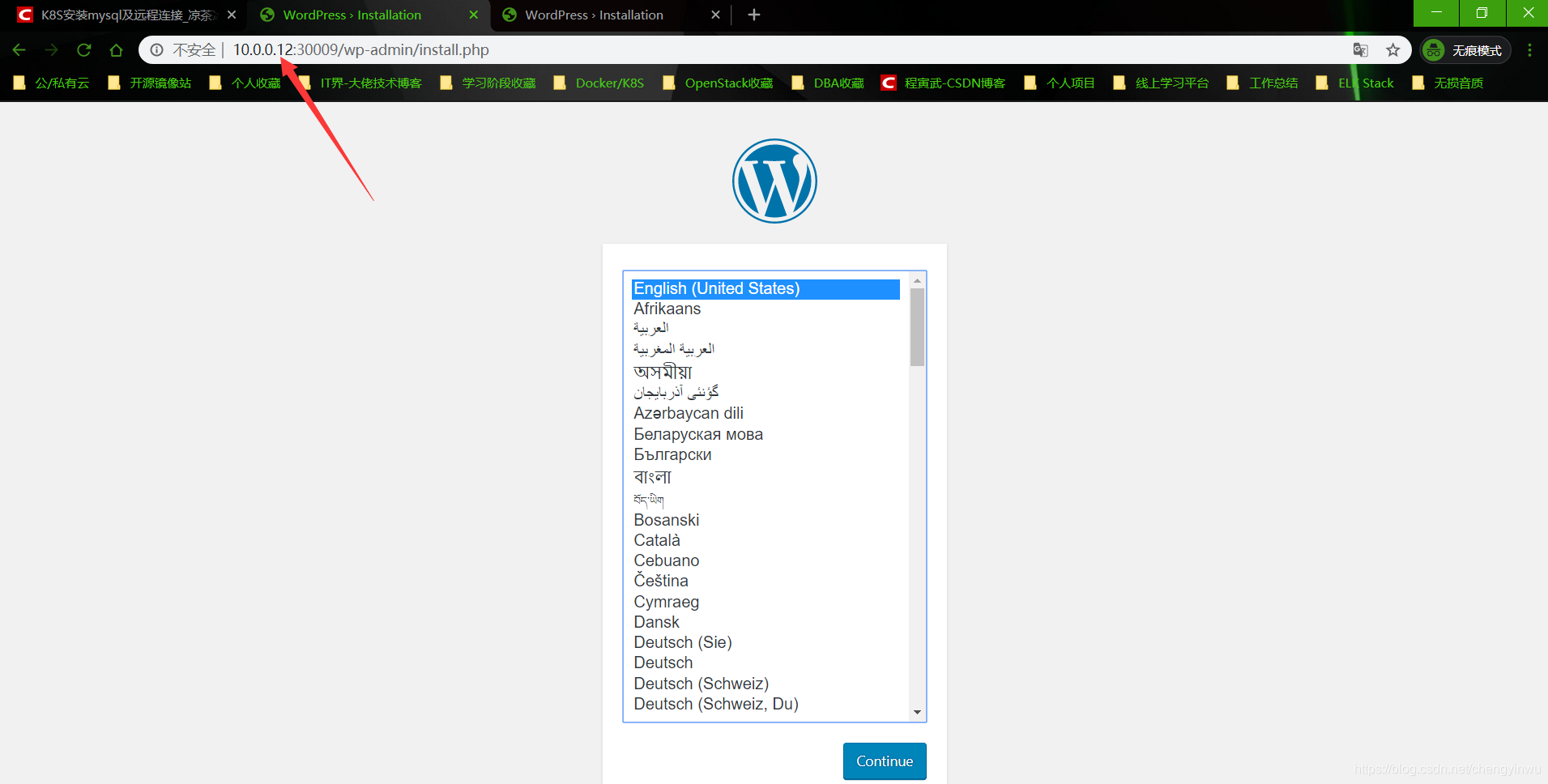

5.6 k8s部署wordpress

[root@k8s-master k8s_yaml]# cp -a tomcat_demo wordpress

[root@k8s-master k8s_yaml]# cd wordpress/1.配置数据库

[root@k8s-master wordpress]# cat mysql-rc.yml

apiVersion: v1

kind: ReplicationController

metadata:name: mysql-wp

spec:replicas: 1selector:app: mysql-wptemplate:metadata:labels:app: mysql-wpspec:containers:- name: mysqlimage: 10.0.0.11:5000/mysql:5.7ports:- containerPort: 3306env:- name: MYSQL_ROOT_PASSWORDvalue: 'somewordpress'- name: MYSQL_DATABASEvalue: 'wordpress'- name: MYSQL_USERvalue: 'wordpress'- name: MYSQL_PASSWORDvalue: 'wordpress'2.编写mysql-svc

[root@k8s-master wordpress]# cat mysql-svc.yml

apiVersion: v1

kind: Service

metadata:name: mysql-wp

spec:ports:- port: 3306targetPort: 3306selector:app: mysql-wp3.编写wordpress-rc

[root@k8s-master wordpress]# cat wordpress-rc.yml

apiVersion: v1

kind: ReplicationController

metadata:name: wordpress

spec:replicas: 2selector:app: wordpresstemplate:metadata:labels:app: wordpressspec:containers:- name: wordpressimage: 10.0.0.11:5000/wordpress:latestports:- containerPort: 80env:- name: WORDPRESS_DB_HOSTvalue: 'mysql-wp'- name: WORDPRESS_DB_USERvalue: 'wordpress'- name: WORDPRESS_DB_PASSWORDvalue: 'wordpress'4.编写wordpress-svc

[root@k8s-master wordpress]# cat wordpress-svc.yml

apiVersion: v1

kind: Service

metadata:name: wordpress

spec:type: NodePortports:- port: 80nodePort: 30009selector:app: wordpress5.依次创建wordpress资源

[root@k8s-master wordpress]# kubectl create -f mysql-rc.yml

[root@k8s-master wordpress]# kubectl create -f mysql-svc.yml

[root@k8s-master wordpress]# kubectl create -f wordpress-svc.yml

[root@k8s-master wordpress]# kubectl create -f wordpress-rc.yml6.查看所有资源

[root@k8s-master wordpress]# kubectl get all

NAME DESIRED CURRENT READY AGE

rc/mysql-wp 1 1 1 9m

rc/nginx2 5 5 5 2h

rc/wordpress 2 2 2 1mNAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc/kubernetes 10.254.0.1 <none> 443/TCP 6h

svc/mysql-wp 10.254.84.20 <none> 3306/TCP 9m

svc/nginx-deployment 10.254.230.84 <nodes> 80:39576/TCP 2h

svc/wordpress 10.254.182.104 <nodes> 80:30009/TCP 37sNAME READY STATUS RESTARTS AGE

po/mysql-wp-7mdrm 1/1 Running 0 9m

po/nginx 1/1 Running 0 4h

po/nginx2-1cx68 1/1 Running 0 2h

po/nginx2-41ppf 1/1 Running 0 2h

po/nginx2-9j6g4 1/1 Running 0 2h

po/nginx2-ftqt0 1/1 Running 0 2h

po/nginx2-w9twq 1/1 Running 0 2h

po/wordpress-6ztxf 1/1 Running 2 1m

po/wordpress-m73sv 1/1 Running 1 1m7. 访问web进行测试

5.7 k8s部署Zabbix

提前将我们所需的镜像上传

1.编写mysql-rc

[root@k8s-master zabbix]# cat mysql-rc.yml

apiVersion: v1

kind: ReplicationController

metadata:name: mysql-server

spec:replicas: 1selector:app: mysql-servertemplate:metadata:labels:app: mysql-serverspec:containers:- name: mysqlimage: 10.0.0.11:5000/mysql:5.7args:- --character-set-server=utf8- --collation-server=utf8_binports:- containerPort: 3306env:- name: MYSQL_ROOT_PASSWORDvalue: 'root_pwd'- name: MYSQL_DATABASEvalue: 'zabbix'- name: MYSQL_USERvalue: 'zabbix'- name: MYSQL_PASSWORDvalue: 'zabbix_pwd'2.编写Mysql-svc

[root@k8s-master zabbix]# cat mysql-svc.yml

apiVersion: v1

kind: Service

metadata:name: mysql-server

spec:ports:- port: 3306targetPort: 3306selector:app: mysql-server3.编写zabbix_java-rc

[root@k8s-master zabbix]# cat zabbix_java-rc.yml

apiVersion: v1

kind: ReplicationController

metadata:name: zabbix-java

spec:replicas: 1selector:app: zabbix-javatemplate:metadata:labels:app: zabbix-javaspec:containers:- name: mysqlimage: 10.0.0.11:5000/zabbix-java-gateway:latestports:- containerPort: 100524.编写zabbix-java-svc

[root@k8s-master zabbix]# cat zabbix_java-svc.yml

apiVersion: v1

kind: Service

metadata:name: zabbix-java

spec:ports:- port: 10052targetPort: 10052selector:app: zabbix-java5.编写zabbix_server

[root@k8s-master zabbix]# cat zabbix_server-rc.yml

apiVersion: v1

kind: ReplicationController

metadata:name: zabbix-server

spec:replicas: 1selector:app: zabbix-servertemplate:metadata:labels:app: zabbix-serverspec:containers:- name: zabbix-serverimage: 10.0.0.11:5000/zabbix-server-mysql:latestports:- containerPort: 10051env:- name: DB_SERVER_HOSTvalue: '10.254.10.53'- name: MYSQL_DATABASEvalue: 'zabbix'- name: MYSQL_USERvalue: 'zabbix'- name: MYSQL_PASSWORDvalue: 'zabbix_pwd'- name: MYSQL_ROOT_PASSWORDvalue: 'root_pwd'- name: ZBX_JAVAGATEWAYvalue: 'zabbix-java-gateway'6.编写zabbix_server

[root@k8s-master zabbix]# cat zabbix_server-svc.yml

apiVersion: v1

kind: Service

metadata:name: zabbix-server

spec:type: NodePortports:- port: 10051nodePort: 30010selector:app: zabbix-server7.编写zabbix-web

[root@k8s-master zabbix]# cat zabbix_web-rc.yml

apiVersion: v1

kind: ReplicationController

metadata:name: zabbix-web

spec:replicas: 1selector:app: zabbix-webtemplate:metadata:labels:app: zabbix-webspec:containers:- name: zabbix-webimage: 10.0.0.11:5000/zabbix-web-nginx-mysql:latestports:- containerPort: 80env:- name: DB_SERVER_HOSTvalue: '10.254.10.53'- name: MYSQL_DATABASEvalue: 'zabbix'- name: MYSQL_USERvalue: 'zabbix'- name: MYSQL_PASSWORDvalue: 'zabbix_pwd'- name: MYSQL_ROOT_PASSWORDvalue: 'root_pwd'8.编写zabbix_web

[root@k8s-master zabbix]# cat zabbix_web-svc.yml

apiVersion: v1

kind: Service

metadata:name: zabbix-web

spec:type: NodePortports:- port: 80nodePort: 30011selector:app: zabbix-web

5.8 k8s部署zabbix升级版

将我们部署的zabbix服务更新为mysql +(zabbix-java-gateway && zabbix-sever && zabbix-web)

1.编写mysql-rc配置文件

[root@k8s-master zabbix2]# cat mysql-rc.yml

apiVersion: v1

kind: ReplicationController

metadata:namespace: zabbixname: mysql-server

spec:replicas: 1selector:app: mysql-servertemplate:metadata:labels:app: mysql-serverspec:containers:- name: mysqlimage: 10.0.0.11:5000/mysql:5.7args:- --character-set-server=utf8- --collation-server=utf8_binports:- containerPort: 3306env:- name: MYSQL_ROOT_PASSWORDvalue: 'root_pwd'- name: MYSQL_DATABASEvalue: 'zabbix'- name: MYSQL_USERvalue: 'zabbix'- name: MYSQL_PASSWORDvalue: 'zabbix_pwd'2.编写mysql-svc配置文件

[root@k8s-master zabbix2]# cat mysql-svc.yml

apiVersion: v1

kind: Service

metadata:namespace: zabbixname: mysql-server

spec:ports:- port: 3306targetPort: 3306selector:app: mysql-server3.服务集合(非常重要)

[root@k8s-master zabbix2]# cat zabbix_server-rc.yml

apiVersion: v1

kind: ReplicationController

metadata:namespace: zabbixname: zabbix-server

spec:replicas: 1selector:app: zabbix-servertemplate:metadata:labels:app: zabbix-serverspec:nodeName: 10.0.0.13containers:

### java-gateway- name: zabbix-java-gatewayimage: 10.0.0.11:5000/zabbix-java-gateway:latest

# imagePullPolicy: IfNotPresentports:- containerPort: 10052

### zabbix-server- name: zabbix-serverimage: 10.0.0.11:5000/zabbix-server-mysql:latest

# imagePullPolicy: IfNotPresentports:- containerPort: 10051env:- name: DB_SERVER_HOSTvalue: 'mysql-server'- name: MYSQL_DATABASEvalue: 'zabbix'- name: MYSQL_USERvalue: 'zabbix'- name: MYSQL_PASSWORDvalue: 'zabbix_pwd'- name: MYSQL_ROOT_PASSWORDvalue: 'root_pwd'- name: ZBX_JAVAGATEWAYvalue: '127.0.0.1'

###zabbix-web- name: zabbix-webimage: 10.0.0.11:5000/zabbix-web-nginx-mysql:latest

# imagePullPolicy: IfNotPresentports:- containerPort: 80env:- name: DB_SERVER_HOST value: 'mysql-server'- name: MYSQL_DATABASEvalue: 'zabbix'- name: MYSQL_USERvalue: 'zabbix'- name: MYSQL_PASSWORDvalue: 'zabbix_pwd'- name: MYSQL_ROOT_PASSWORDvalue: 'root_pwd'4.编写zabbix_server-svc配置文件

[root@k8s-master zabbix2]# cat zabbix_server-svc.yml

apiVersion: v1

kind: Service

metadata:namespace: zabbixname: zabbix-server

spec:type: NodePortports:- port: 10051nodePort: 30010selector:app: zabbix-server5.编写zabbix_web配置文件

[root@k8s-master zabbix2]# cat zabbix_web-svc.yml

apiVersion: v1

kind: Service

metadata:namespace: zabbixname: zabbix-web

spec:type: NodePortports:- port: 80selector:app: zabbix-server6.因为是基于dns服务,在架构上也进行了优化【1拖3架构模式】所有直接一次性创建;登录web访问并测试

本文来自互联网用户投稿,文章观点仅代表作者本人,不代表本站立场,不承担相关法律责任。如若转载,请注明出处。 如若内容造成侵权/违法违规/事实不符,请点击【内容举报】进行投诉反馈!